Tsjarly

Tsjarly

- Jul 24, 2024

- Joined Oct 3, 2023

- 1 best answer

Hi @Tsjarly

No SW workaround unfortunately. Since 9782 has a bandpass at 940nm, the sunlight will disturb it. That is to be expected and can only be resolved by using an IR-CUT filter over sensors. But this will disable the active stereo - you still have TOF which should be enough.Thanks,

JakaHi @Tsjarly ,

Not yet, but we plan to support it. We're not sure whether stereo postprocessing filters can be applied for tof depth. These filters were designed for stereo vision and work on disparity (not depth). But it's worth a try. We also found this paper, but we haven't tested it yet: https://www.researchgate.net/publication/256434960_Denoising_Strategies_for_Time-of-Flight_DataHi @Tsjarly

Looks like a HW issue, not directly fixable. Could you please write to support@luxonis.com so we can replace it.Thanks and sorry for the inconvenience,

JakaHi @Tsjarly

This one: luxonis/depthai-pythonblob/main/examples/calibration/calibration_dump.pyThanks,

Jaka@SamiUddin @Tsjarly We have made initial script for on-host alignment which is more accurate than the previous one (that I posted above). Could you give it a try (feedbacks welcome)?

Hi @Tsjarly and @SamiUddin .

The plan is to release it together with SR PoE (cam that has ToF), so in 2 weeks. We might also write a python script that demos the alignment on the host, but I can't promise that atm.

Thanks, ErikHi @Tsjarly ,

Currently not supported, but we will soon expose DepthAlign node, which will be used for ToF alignment as well. For now, you can align it on host, demo here:

luxonis/depthai-python94b3177Hi @Tsjarly

Tsjarly Is there a particular reason why the Q matrix isn't exposed directly?

Not sure, tbh.

jakaskerl if you find a way to calculate c_x' yourself.

C_x' should also be accessible from the calibration. It's the first row, last column of the second intrinsic matrix. So the whole matrix should be accessible, just not via API, but manually through the calibration on the EEPROM.

Thanks,

JakaHi @Tsjarly ,

I also updated docs that describes this in-detail: https://docs.luxonis.com/projects/hardware/en/latest/pages/guides/depth_accuracy/#accuracy-oscillationcalibrate.py is (almost) the same, the main difference was factory calibration process, so if you already self-calibrated cameras, it wouldn't change.

Hi @Tsjarly ,

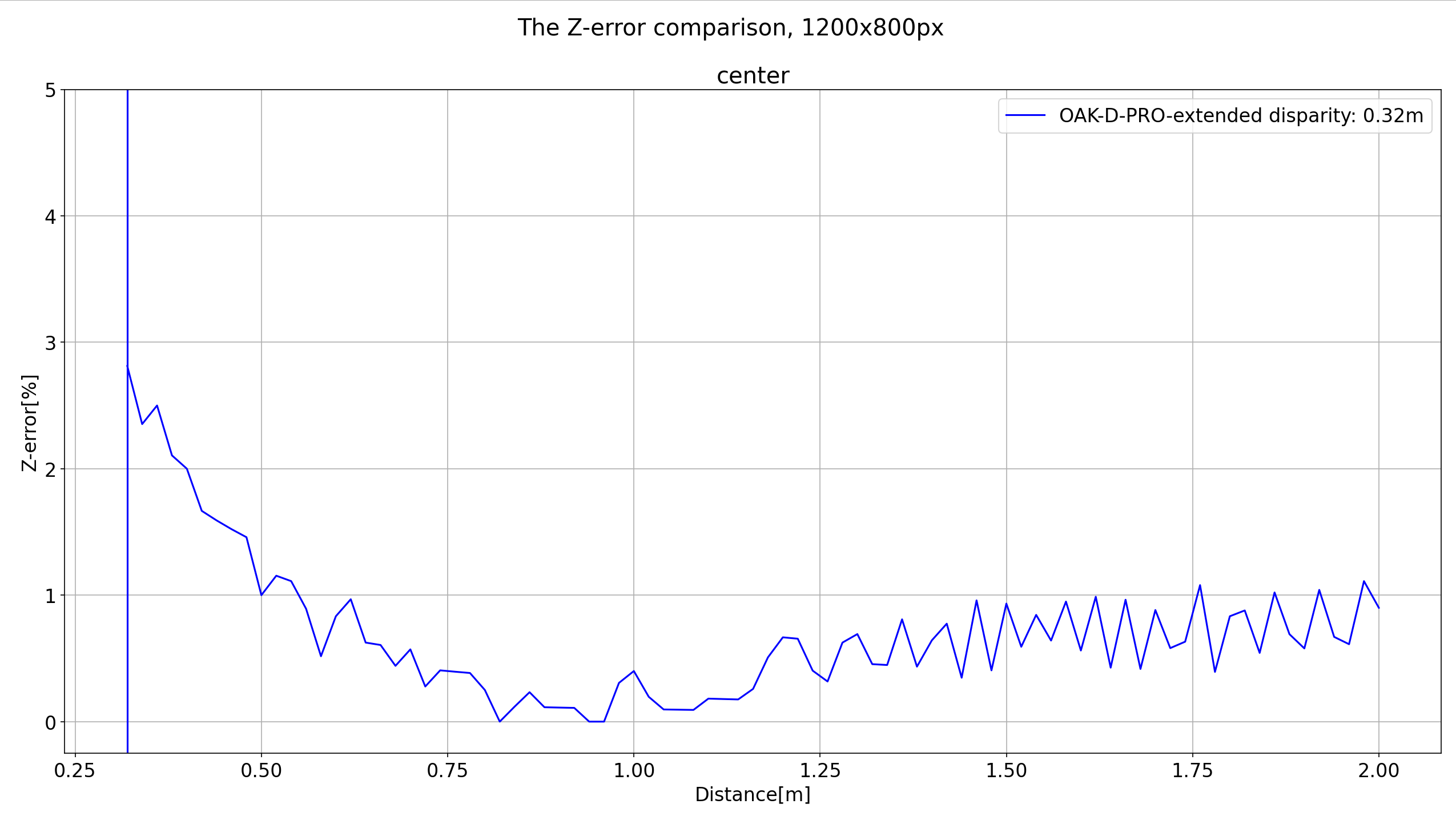

The graph you have screenshotted is from the first unit that came off of the new calibration rig. After that, we tested 10 factory-calibrated cameras (with new rig we setup at factory), we tested all of them and took the median depth accuracy for what we advertise as our depth (instead of just taking the best one).

We tested 10 cameras that were calibrated with previous system, and the average was about 20% of error at 4m, and now it's well below 1/5 of that (median is 2% below 4m).

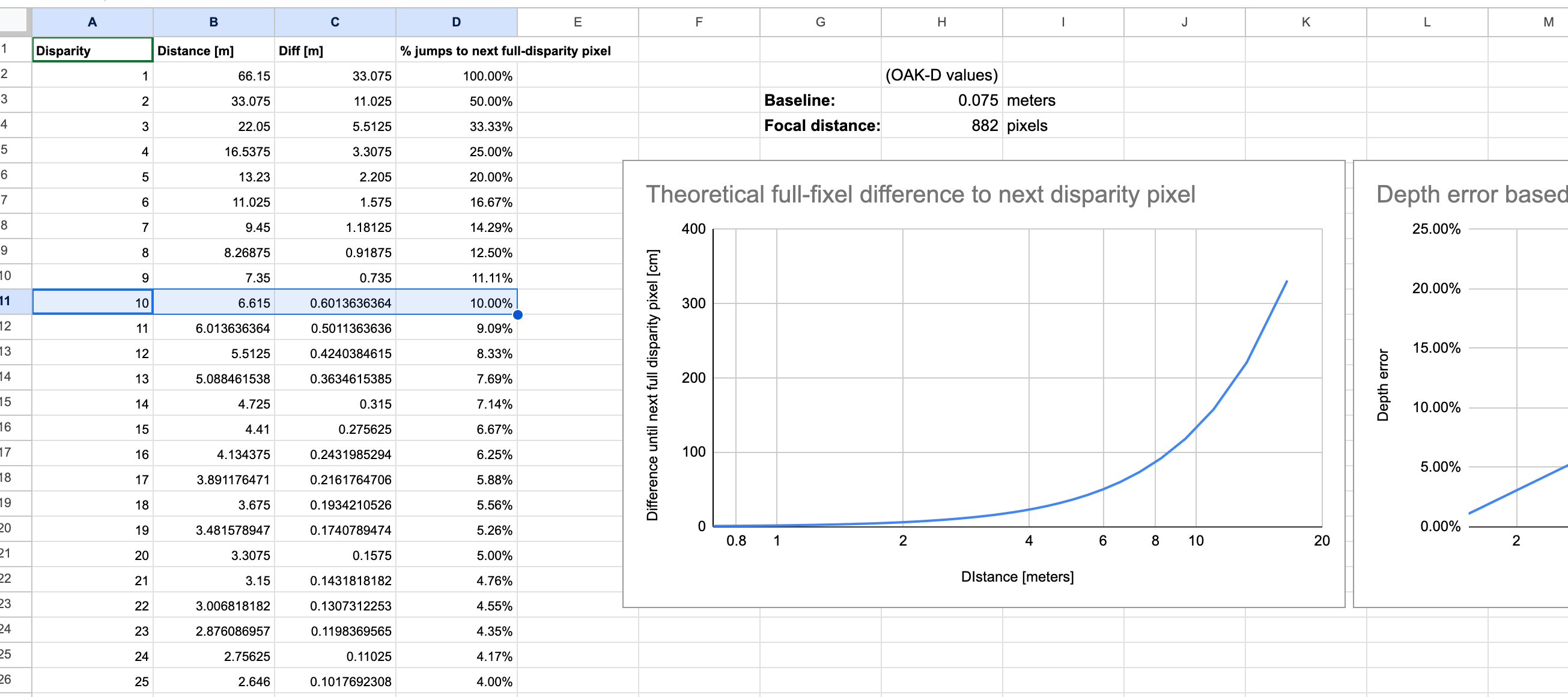

Regarding the oscillation effect - I'll be writing docs about it, essentially it's disparity pixel thing. so "error valley" (where accuracy is spot-on) at 6.6m would be likely be equate to 10 disparity pixels, while valley at 7.5m would be 9pix disparity (so it was 9 pixels difference between left/right img, which corresponds to 7.5m depth).

Here's the theoretical calculations for an OAK-D (it will vary camera to camera due to diff in focal len):

purely disparity to depth computation

This as well, but major thing is cam intrinsic estimation and distortion model estimation (especially for wide fov cameras). So disparity itself will come out different already.

Thoughts?

Thanks, ErikHi @Tsjarly

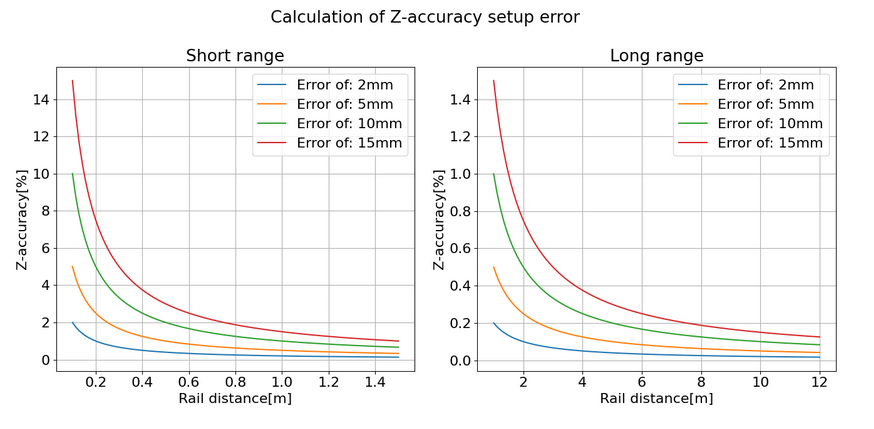

It's more accurate since there is more reference points for depth calculation. As you can see on the graphs, the depth gets better with closer distance, up to a point where either the stereo baseline is too high to allow overlap between the two cameras, OR the rail we use for testing is not accurate enough.

Thanks,

Jaka