jakaskerl

Thank you for your help. Now that I am working with point clouds and calibration has helped and now that the values are at the right value. I have created my own script that uses the tof and color camera node, I am using tof to capture depth and using the color camera to display the pcd in color. Here are some of the things I have in my code (everything is order that it is in my code)

# Create ToF node

**tof = pipeline.create(dai.node.ToF)**

**tofConfig = tof.initialConfig.get()**

**tofConfig.enableOpticalCorrection = True**

**tofConfig.enablePhaseShuffleTemporalFilter = True**

**tofConfig.phaseUnwrappingLevel = 5**

**tofConfig.phaseUnwrapErrorThreshold = 300**

**tof.initialConfig.set(tofConfig)**

# Camera intrinsic parameters (ensure I am using the correct calibration values)

fx = 494.35192765 # Update with my calibrated value

fy = 499.48351759 # Update with my calibrated value

cx = 321.84779556 # Update with my calibrated value

cy = 218.30442303 # Update with my calibrated value

**intrinsic = o3d.camera.PinholeCameraIntrinsic(width=640, height=480, fx=fx, fy=fy, cx=cx, cy=cy)

**

I am using this functionality:

# Convert depth image to Open3D format

**depth_o3d = o3d.geometry.Image(depth_map)**

**color_o3d = o3d.geometry.Image(cv2.cvtColor(color_frame_resized, cv2.COLOR_BGR2RGB))**

**# Generate and save colored point cloud**

**rgbd_image = o3d.geometry.RGBDImage.create_from_color_and_depth(**

**color_o3d, depth_o3d, depth_scale=1000.0, depth_trunc=3.0, convert_rgb_to_intensity=False**

**)**

**color_pcd = o3d.geometry.PointCloud.create_from_rgbd_image(rgbd_image, intrinsic)**

**color_pcd_filename = os.path.join(output_directory, f'color_pcd_{capture_count}.pcd')**

**o3d.io.write_point_cloud(color_pcd_filename, color_pcd)**

I capture the images and save it to a file path. Then I run a python script that uses open3d

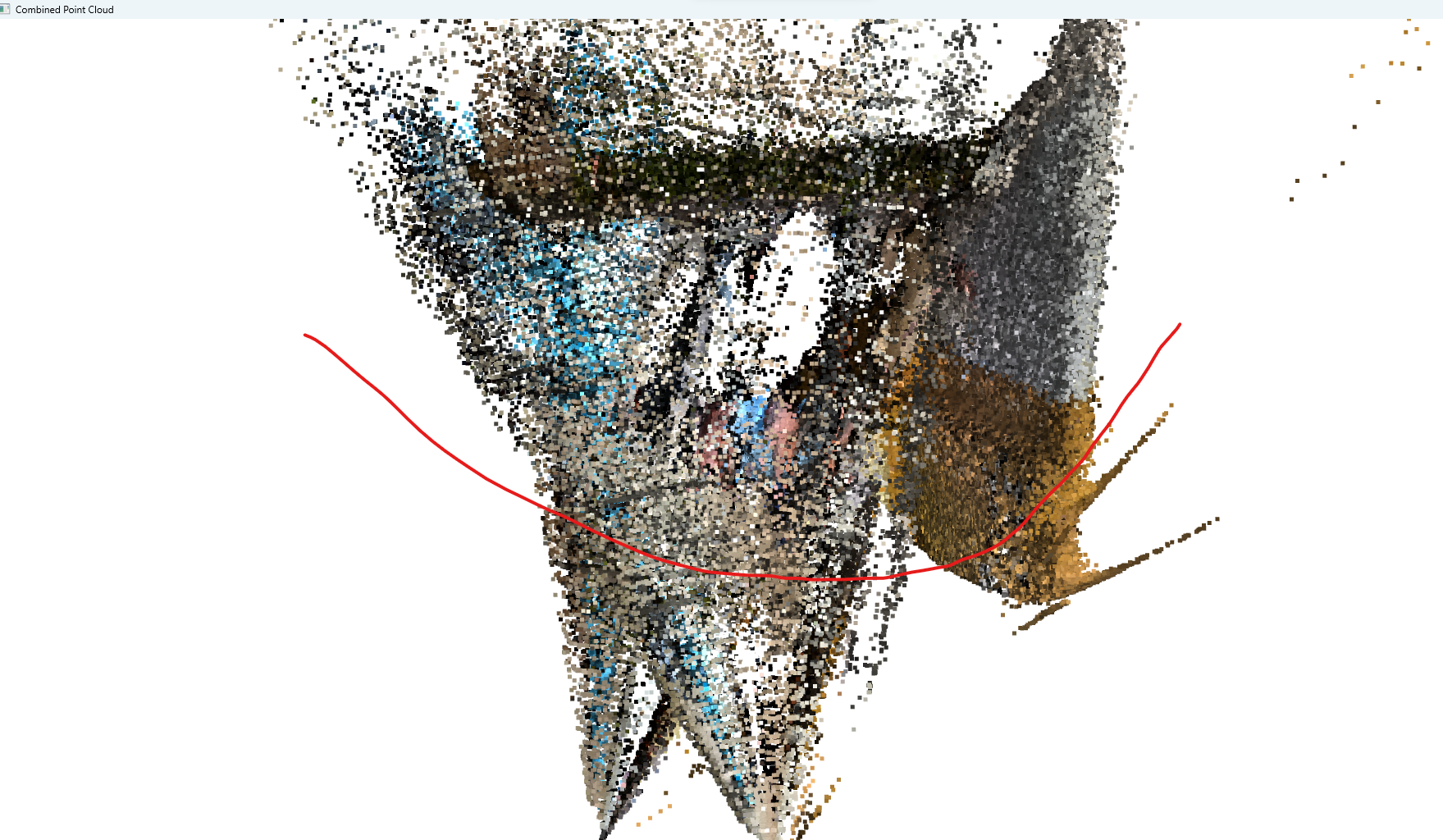

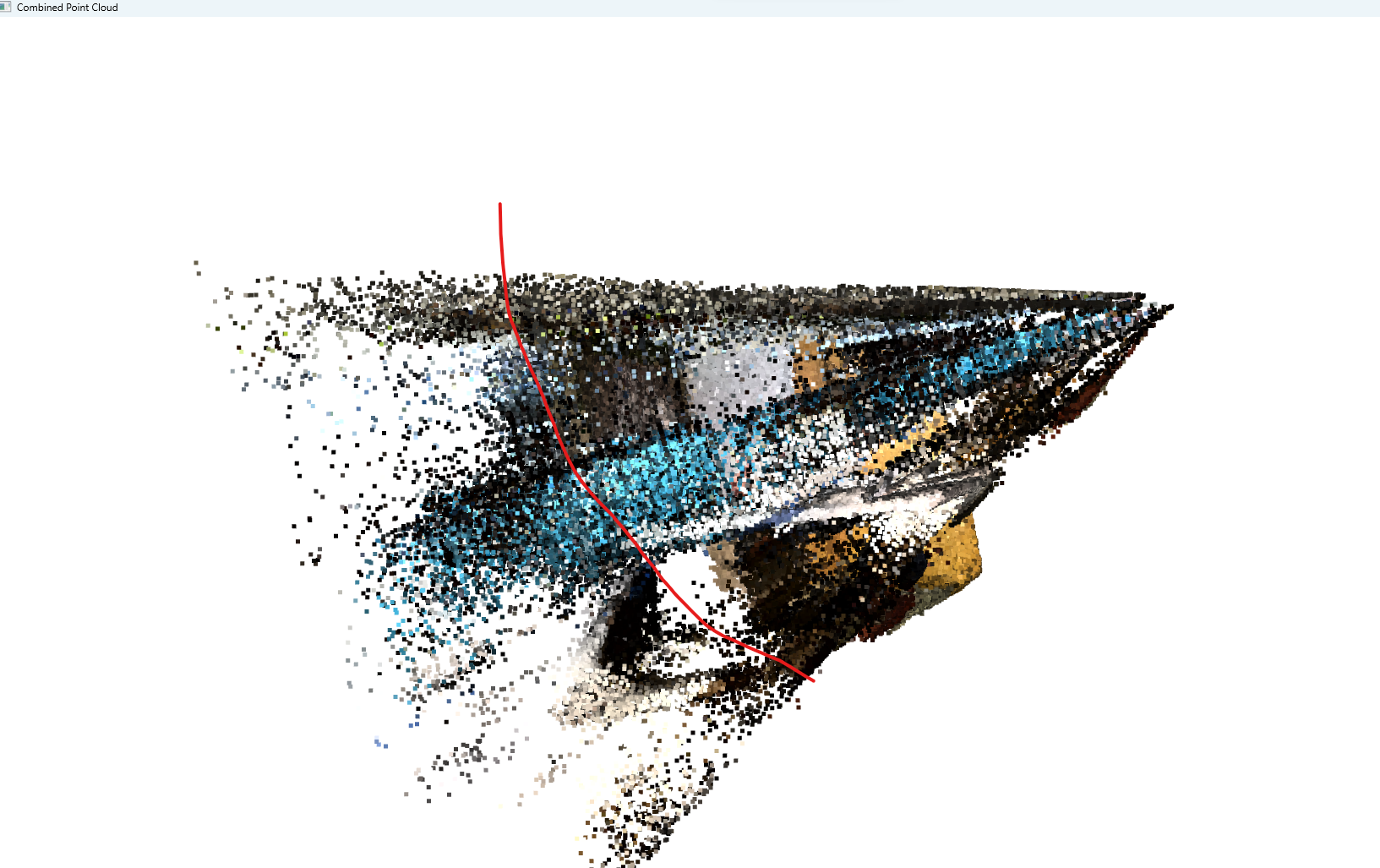

It load the point cloud from that file path and visualize it. My question still is the sparseness, have tried down sampling, icp and global registration, changing the voxel size and much more. But i am still getting unnecessary noise when i am viewing the pcd. Yes it look better with calibration but I am a tad lost on why it is still doing this:

Anything before the red should not be there. It seems to displaying the camera position and just distributing the points. Does the FOV also has an effect on this? With the second pic, anything after the red line should not be there. The points are trying to piece everything together but seem to be having an issue with it.