Ever wished your robot had the vision of an eagle? Well, meet the OAK-D Long Range, a smart 3D camera that's bringing super sight to projects worldwide. Let’s dive into how it achieves this, especially with its 15cm stereo baseline.

The 15cm Stereo Baseline: A Further Perspective

With a 15cm stereo baseline, the OAK-D Long Range can perceive further objects more accurately. In the Configuring Stereo Depth documentation, we mentioned that baseline distance (linearly) correlates with accuracy, so if we increase the baseline distance by 2x, you will get 2x less error at the same distance.

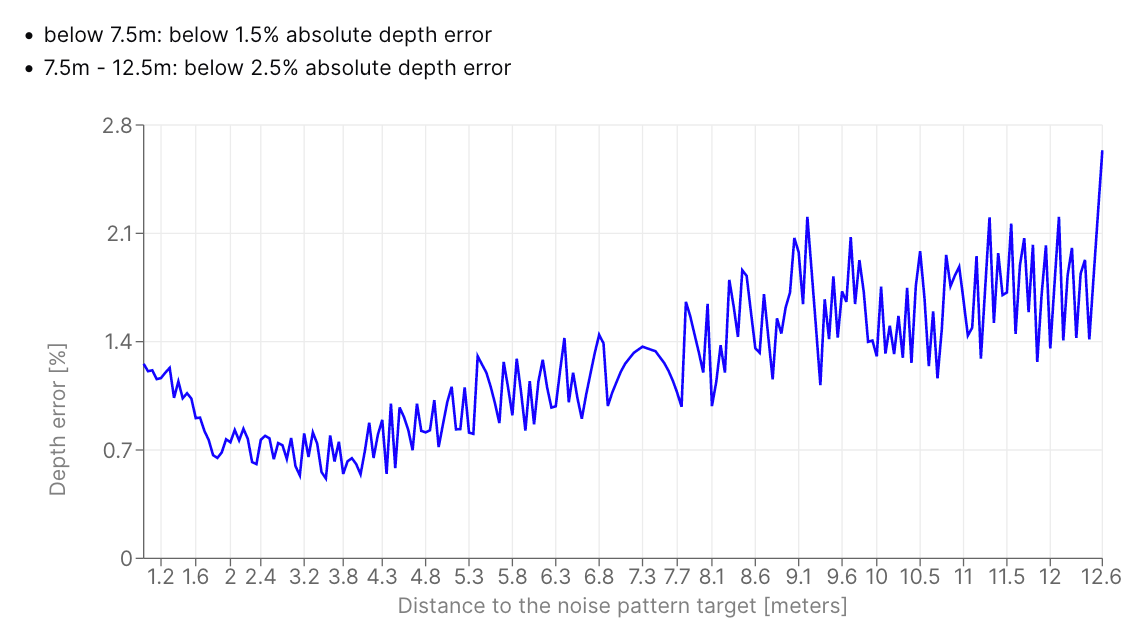

Depth Accuracy

On our Depth Accuracy documentation page, we have more in-depth (pun intended) information about each device's accuracy, along with graphs and explanations on why the accuracy graphs are the way they are. Here's the depth accuracy (with relation to the distance) of our OAK-D Long Range:

This accuracy was obtained with the default lens with 82° horizontal FOV. We'd get better long-range accuracy if we reduced FOV (a different lens or a central-cropping frame instead of ISP downscaling).

Pointclouds!

Graphs are great, but they're useless if the camera isn't applicable in real-world applications. We have recorded some footage on a vibration-heavy tractor (a 30 years old IMT 549), and unfiltered pointclouds look quite decent.

Using rerun.io (well, our flavour of it) we visualized RGB-D frames we got directly from the device and there was no on-host postprocessing (khm.. like RealSense™ cameras operate). So we'd get even smoother surfaces if we added host-compute-intensive pointcloud processing.

Reproduce: The Code

We're using depthai-sdk for the pipeline construction and replaying, and the depthai-viewer for visualization. We could also use native Rerun (here's an example with Rerun), but pointclouds wouldn't be colorized. Before running the code you'll need to install the requirements:

python3 -mpip install depthai-sdk==1.14 depthai-viewer==0.2.2

After installing the requirements and connecting an OAK device to your computer (which does stereo reconstruction), you can run the following code:

from depthai_sdk import OakCamera

import depthai as dai

import depthai_viewer as viewer

import subprocess

import sys

import cv2

# Download and run the tractor recording

with OakCamera(replay="tractor-oak-d-lr") as oak:

# Run & initialize the depthai_viewer

try:

subprocess.Popen([sys.executable, "-m", "depthai_viewer", "--viewer-mode"], stdout=subprocess.PIPE, stderr=subprocess.PIPE)

except subprocess.TimeoutExpired:

pass

viewer.init("Depthai Viewer")

viewer.connect()

oak.replay.set_fps(10)

cam_b = oak.create_camera('CAM_B')

cam_c = oak.create_camera('CAM_C')

# Downscale the 1920x1200 frames to 1280x800

oak.replay.resize('cam_c', (1280, 800))

oak.replay.resize('cam_b', (1280, 800))

nn = oak.create_nn('mobilenet-ssd', cam_c)

stereo = oak.create_stereo(left=cam_b, right=cam_c)

stereo.node.setOutputSize(640, 400)

stereo.config_stereo(confidence=215, lr_check=True, extended=True, subpixel=True, subpixel_bits=5)

stereo.config_stereo(align=cam_c)

stereo.node.setDepthAlign(dai.CameraBoardSocket.CAM_C)

# On-device post processing for stereo depth

config = stereo.node.initialConfig.get()

stereo.node.setPostProcessingHardwareResources(4, 4)

config.postProcessing.speckleFilter.enable = True

config.postProcessing.speckleFilter.speckleRange = 50

config.postProcessing.temporalFilter.enable = False

config.postProcessing.spatialFilter.enable = True

config.postProcessing.spatialFilter.holeFillingRadius = 1

config.postProcessing.spatialFilter.numIterations = 1

config.postProcessing.thresholdFilter.minRange = 400

config.postProcessing.thresholdFilter.maxRange = 15000

config.postProcessing.brightnessFilter.maxBrightness = 255

stereo.node.initialConfig.set(config)

q = oak.queue([

stereo.out.depth.set_name('depth'),

stereo.out.rectified_right.set_name('rr'),

nn.out.main.set_name('nn'),

]).configure_syncing(enable_sync=True, threshold_ms=250).get_queue()

# oak.show_graph()

oak.start()

calibData = oak.device.readCalibration()

intrinsics = calibData.getCameraIntrinsics(dai.CameraBoardSocket.CAM_C, dai.Size2f(640, 400))

viewer.log_pinhole("CAM_C/transform/Color", child_from_parent=intrinsics, width=640, height=400, timeless=True)

viewer.log_rigid3("CAM_C/transform", child_from_parent=([0, 0, 0], [1, 0, 0, 0]), xyz="RDF", timeless=True)

while oak.running():

packets = q.get()

depth = packets['depth']

viewer.log_image("Right", packets['rr'].frame)

color = packets['nn'].frame

color = cv2.pyrDown(color) # Downscale to match 640x400 depth frame

viewer.log_image("CAM_C/transform/Color/Image", color[:, :, ::-1]) # BGR to RGB

viewer.log_depth_image("CAM_C/transform/Color/Depth", depth.frame, meter=1e3)

oak.poll()

Note that on the first run, depthai-sdk will download the tractor-oak-d-lr recording, which is about 300MB:

Let us know your thoughts in the comments!