- Edited

Hi,

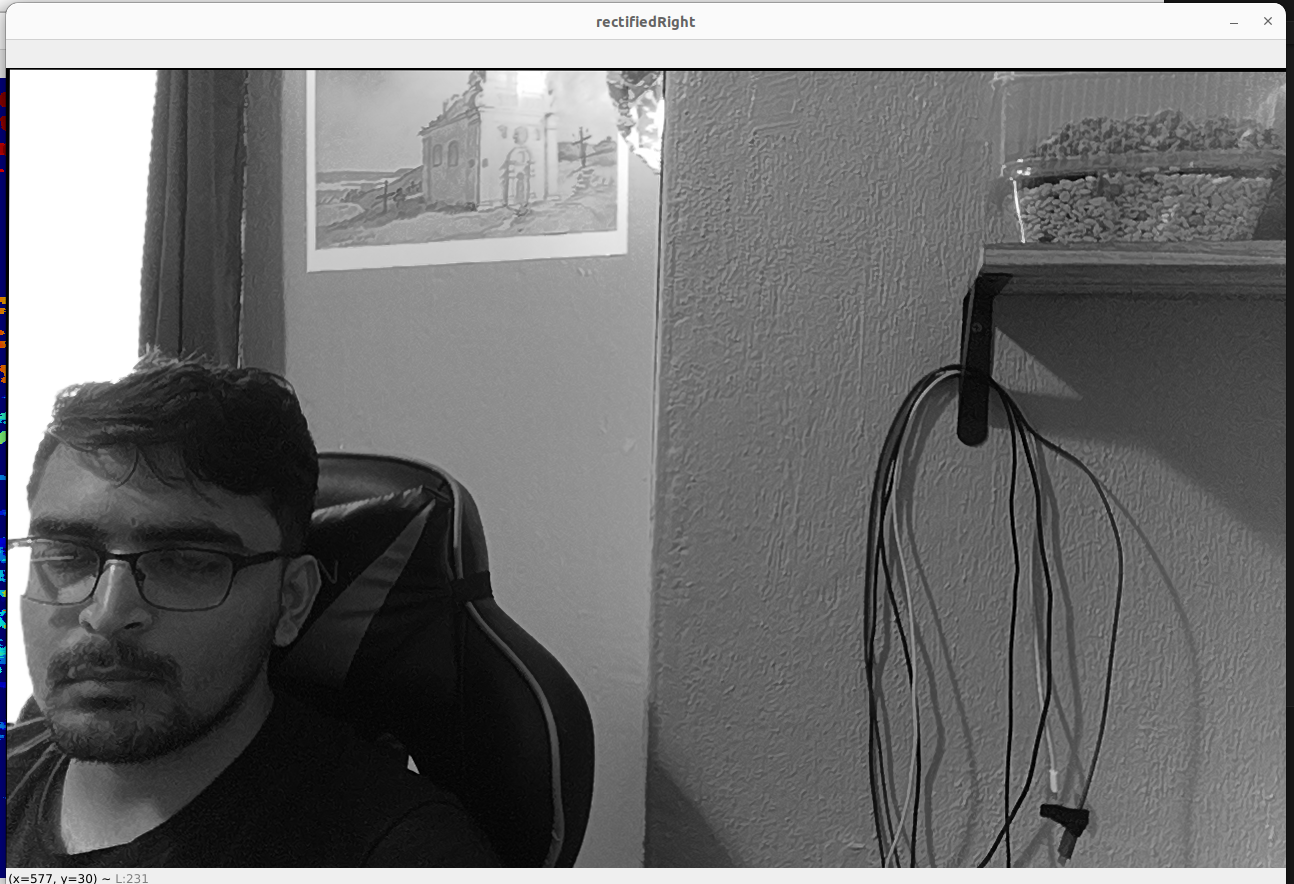

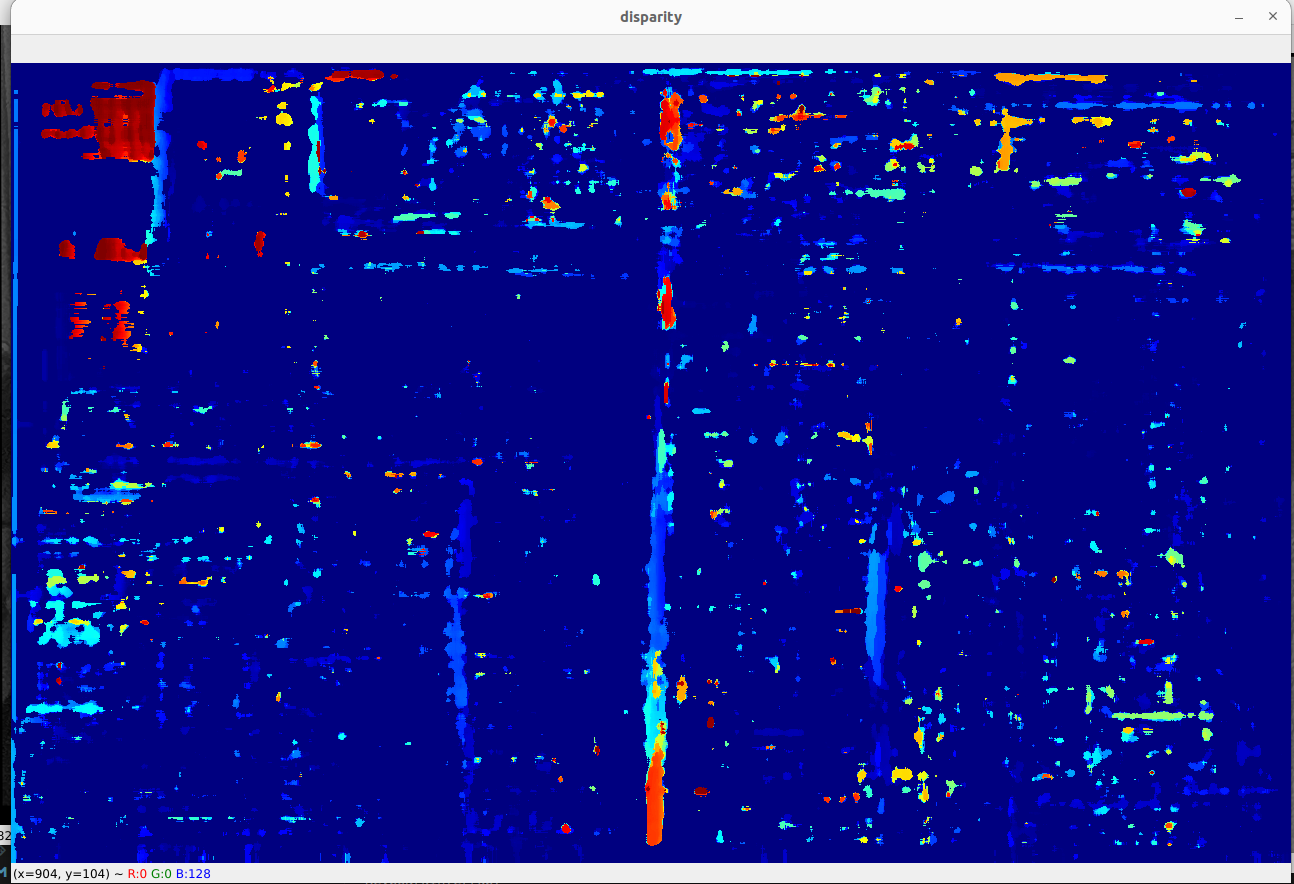

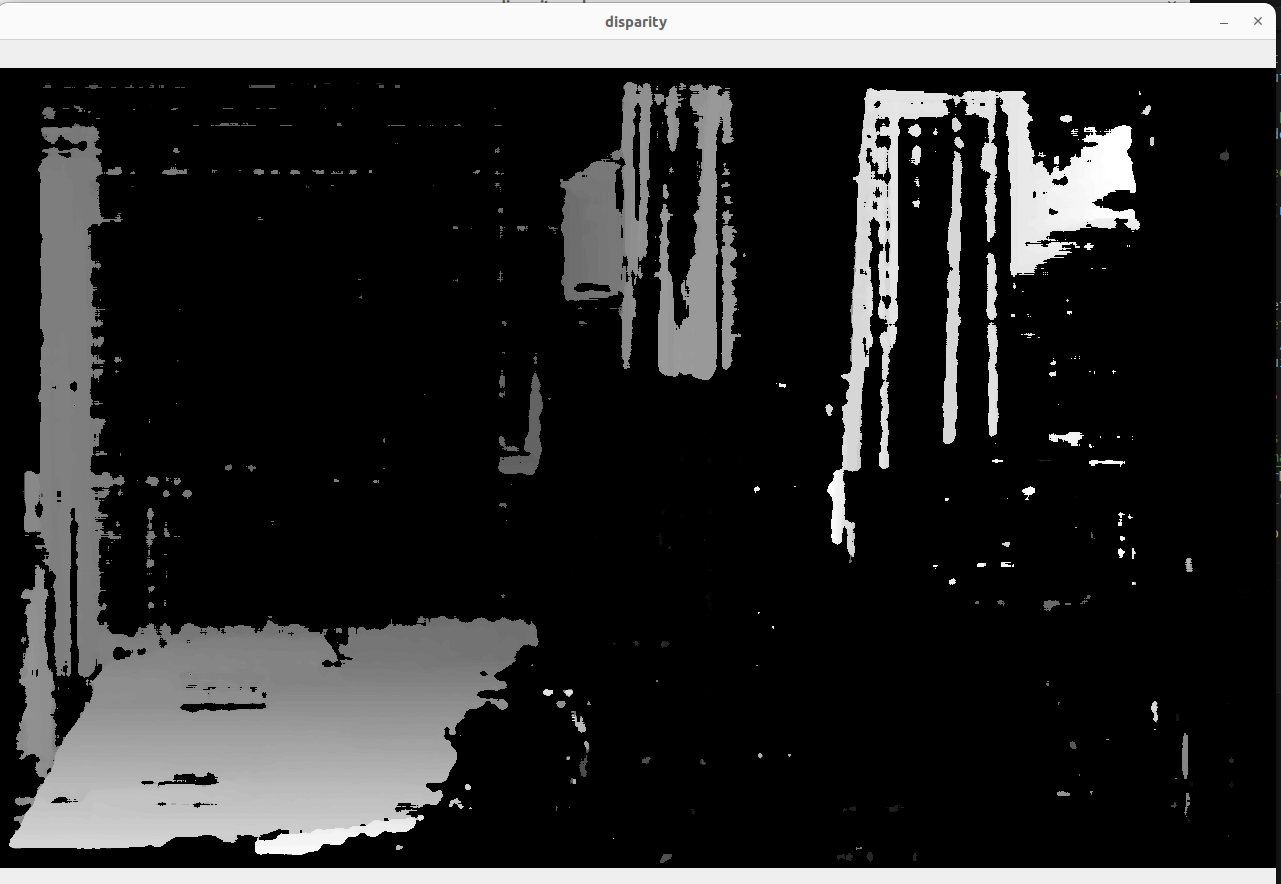

I have a setup of two OVO 9282 cameras with an Oak FFC 3P. I tried calibrating the camera with the calibration.py file, and the following were the results, which didn't include distortion coefficients. Also, on pressing esc at the end, no calibration results were saved in a file. My question is: why is the calibration result incomplete with no distortion coefficients and rectification matrix? Is there no distortion in these cameras? Why is this file not saving calibration data?

shivam157@ubuntu:~/depthai$ python3 calibrate.py -s 3.8 --board OAK-FFC-3P -nx 13 -ny 7 -dbgUsing dataset path: dataset

Starting image processing

<------------Calibrating right ------------>

INTRINSIC CALIBRATION

Reprojection error of right: 0.1450288774499517

<------------Calibrating left ------------>

INTRINSIC CALIBRATION

Reprojection error of left: 0.18671330931332783

<-------------Extrinsics calibration of right and left ------------>

Reprojection error is 0.1806323559925981

<-------------Epipolar error of right and left ------------>

Original intrinsics ....

L [[909.05184795 0. 644.25179619]

[ 0. 909.33572655 383.70089729]

[ 0. 0. 1. ]]

R: [[906.07261965 0. 625.2752514 ]

[ 0. 906.30579217 399.46458918]

[ 0. 0. 1. ]]

Intrinsics from the getOptimalNewCameraMatrix/Original ....

L: [[906.07261965 0. 625.2752514 ]

[ 0. 906.30579217 399.46458918]

[ 0. 0. 1. ]]

R: [[906.07261965 0. 625.2752514 ]

[ 0. 906.30579217 399.46458918]

[ 0. 0. 1. ]]

Average Epipolar Error is : 0.1324884918914444

Displaying Stereo Pair for visual inspection. Press the [ESC] key to exit.

Reprojection error threshold -> 1.1111111111111112

right Reprojection Error: 0.145029

/home/shivam157/depthai/calibrate.py:1029: DeprecationWarning: Conversion of an array with ndim > 0 to a scalar is deprecated, and will error in future. Ensure you extract a single element from your array before performing this operation. (Deprecated NumPy 1.25.)

calibration_handler.setDistortionCoefficients(stringToCam[camera], cam_info['dist_coeff'])

/home/shivam157/depthai/calibrate.py:1068: DeprecationWarning: Conversion of an array with ndim > 0 to a scalar is deprecated, and will error in future. Ensure you extract a single element from your array before performing this operation. (Deprecated NumPy 1.25.)

calibration_handler.setCameraExtrinsics(stringToCam[camera], stringToCam[cam_info['extrinsics']['to_cam']], cam_info['extrinsics']['rotation_matrix'], cam_info['extrinsics']['translation'], specTranslation)

Reprojection error threshold -> 1.1111111111111112

left Reprojection Error: 0.186713

[]

py: DONE.

shivam157@ubuntu:~/depthai$

```

The following is the board file:

{

"board_config":

{

"name": "OAK-FFC-3P",

"revision": "R1M0E1",

"cameras":{

"CAM_C": {

"name": "right",

"hfov": 71.86,

"type": "mono",

"extrinsics": {

"to_cam": "CAM_B",

"specTranslation": {

"x": 14.5,

"y": 0,

"z": 0

},

"rotation":{

"r": 0,

"p": 0,

"y": 0

}

}

},

"CAM_B": {

"name": "left",

"hfov": 71.86,

"type": "mono"

}

},

"stereo_config":{

"left_cam": "CAM_B",

"right_cam": "CAM_C"

}

}}

I think this is related to numpy version. I am not sure if I downgrade numpy that it will support Opencv 4.9. It looks like the error is in the distortion coefficient and extrinsic parameters lines of code. What should I do?