CUSTOM OCR TRAINING

Dear erik:

Yes~you are right

as attached picture content is using text_recognition_0012 results that you couldn't seen by google video:

As we know EAST OCR is 1080p resolution and getting all of text of whole paper.

but our customer want only setting their own ROI and could adjust size of ROI ,finally running OCR inside ROI.

for example like COGNEX OCR (shown red ROI):

We have three ideas:

We would like to use IMREAD_UNCHANGE to cutoff picture ,and imshow

Change the aspect ratio (stretch the image)

Apply letterboxing to the image,

as below:

https://docs.luxonis.com/projects/api/en/latest/tutorials/maximize_fov/SpatialLocationCalculator

to create ROI and combine with text_recognition

https://docs.luxonis.com/projects/api/en/latest/components/nodes/spatial_location_calculator/

Please assist us which method could be match our goal??

all the best~~

Hey @king , so if I understand correctly, you do not need actual test detection, only test recognition? And you want to set a custom ROI?

If that is so, you could use our ImageManip node, where you can set the ROI to some rectangle by providing the points for ROI and then resize the image so that it has correct shape for input to recognition network. Then you would have to link this ImageManip node to a Neural Network node that performs text recognition as in OCR example.

So you could try editing this part of the code to use custom defined ROI instead of ROI from detections from the whole image. Which means you could skip the first NN node, but keep the rest the same.

Does this make sense?

Dear Matija:

Thanks for your suggestion of custom ROI~ our target is not only text recognition but also ROI settings and running inside of ROI.

We will follow your ImageManip ndoe and and compile it with text recognition first . maybe there are good results for us.

all the best~~

Hey, you could manually increase these two lines, so a larger are is cropped: https://github.com/luxonis/depthai-experiments/blob/master/gen2-ocr/main.py#L241-L242.

I found out that rotate crops are slightly incorrect, but should be fixed with the ImageManip refactor which will be released soon. For now you can try increasing the width and height manually in the above lines, like:

rr.size.width = int(rotated_rect[1][0] * 1.1)

rr.size.height = int(rotated_rect[1][1] * 1.1)This will increase width and height for 10%.

Best,

Matija

Dear LUXonis partners :

We have OCR case as google drive as below and picture:

We want to check each OCR SET/CAN/RES , but results is still fail due to influence by pattern of + and -

we try every methods as you suggestion before (include rr.size.widthrr.size.height )but`still can't solve our customer needed.

This case our customer have 10pcs OAK-1 request, if we can solve the issue. maybe create a background pictures with any color code into gen2-OCR and combine with modifying EAST. but we don't know how to modify it yet~~

If you have a good solution for our case we can discuss more detail cooperation methods~

all the beat~~

king If you are limited to a set of these 3 words only, you could just filter them out with some distance metric or just check if the word is contained in the result. SET is in SSET, so you can know that SSET likely refers to SET, similarly CAN is in ICAN and RES in RESE.

If you are looking at other words as well, it's going to be hard to eliminate this + sign. You could try decreasing the factor by which you increase the width.

With image refactor that we are working on, I think the rotated squares should be cropped out better, but don't hold my word for it. I'll report some results when I'll be able to test it out. Until then, I propose you use one of the two approaches I mentioned above.

Best,

Matija

Dear Matija:

Thanks for your support of ROI OCR upgrade in this case ,we sincere that's could solve in this case , also we would like to know how long we could get the upgrade results??

all the best~~

- Edited

Dear all:

We have another issue is autofocus function about OAK-1 as Picture below:

the OAK-1 is fixed on WD=150mm and only focus on A123 text ,we import autofocus.py into gen2-ocr

we set A123 is ok condition and output OK pictures . it shows clear image with OK pictures but also shows blurry image with NG pictures sometimes , we try to modify parameter of autofocus.py and still shows blurry image, could you please assist us how to modify the parameter of autofocus.py and only shows clear image:

import depthai as dai

import cv2

# Screen adjust for "left" "right" "up" "down"

# Step size ('W','A','S','D' controls)

STEP_SIZE = 8

# Create pipeline

pipeline = dai.Pipeline()

# Define sources and outputs

camRgb = pipeline.create(dai.node.ColorCamera)

videoEncoder = pipeline.create(dai.node.VideoEncoder)

stillEncoder = pipeline.create(dai.node.VideoEncoder)

controlIn = pipeline.create(dai.node.XLinkIn)

configIn = pipeline.create(dai.node.XLinkIn)

videoMjpegOut = pipeline.create(dai.node.XLinkOut)

stillMjpegOut = pipeline.create(dai.node.XLinkOut)

previewOut = pipeline.create(dai.node.XLinkOut)

controlIn.setStreamName('control')

configIn.setStreamName('config')

videoMjpegOut.setStreamName('video')

stillMjpegOut.setStreamName('still')

previewOut.setStreamName('preview')

# Properties

camRgb.setVideoSize(640, 360)

camRgb.setPreviewSize(300, 300)

videoEncoder.setDefaultProfilePreset(camRgb.getFps(), dai.VideoEncoderProperties.Profile.MJPEG)

stillEncoder.setDefaultProfilePreset(1, dai.VideoEncoderProperties.Profile.MJPEG)

# Linking

camRgb.video.link(videoEncoder.input)

camRgb.still.link(stillEncoder.input)

camRgb.preview.link(previewOut.input)

controlIn.out.link(camRgb.inputControl)

configIn.out.link(camRgb.inputConfig)

videoEncoder.bitstream.link(videoMjpegOut.input)

stillEncoder.bitstream.link(stillMjpegOut.input)

# Connect to device and start pipeline

with dai.Device(pipeline) as device:

# Get data queues

controlQueue = device.getInputQueue('control')

configQueue = device.getInputQueue('config')

previewQueue = device.getOutputQueue('preview')

videoQueue = device.getOutputQueue('video')

stillQueue = device.getOutputQueue('still')

# Max cropX & cropY

maxCropX = (camRgb.getResolutionWidth() - camRgb.getVideoWidth()) / camRgb.getResolutionWidth()

maxCropY = (camRgb.getResolutionHeight() - camRgb.getVideoHeight()) / camRgb.getResolutionHeight()

# Default crop

cropX = 0

cropY = 0

sendCamConfig = True

while True:

previewFrames = previewQueue.tryGetAll()

for previewFrame in previewFrames:

cv2.imshow('preview', previewFrame.getData().reshape(previewFrame.getHeight(), previewFrame.getWidth(), 3))

videoFrames = videoQueue.tryGetAll()

for videoFrame in videoFrames:

# Decode JPEG

frame = cv2.imdecode(videoFrame.getData(), cv2.IMREAD_UNCHANGED)

# Display

cv2.imshow('video', frame)

# Send new cfg to camera

if sendCamConfig:

cfg = dai.ImageManipConfig()

cfg.setCropRect(cropX, cropY, 0, 0)

configQueue.send(cfg)

print('Sending new crop - x: ', cropX, ' y: ', cropY)

sendCamConfig = False

# Update screen (1ms pooling rate)

key = cv2.waitKey(1)

if key == ord('q'):

break

elif key == ord('t'):

print("Autofocus trigger (and disable continuous)")

ctrl = dai.CameraControl()

ctrl.setAutoFocusMode(dai.CameraControl.AutoFocusMode.AUTO)

ctrl.setAutoFocusTrigger()

controlQueue.send(ctrl)

elif key in [ord('w'), ord('a'), ord('s'), ord('d')]:

if key == ord('a'):

cropX = cropX - (maxCropX / camRgb.getResolutionWidth()) * STEP_SIZE

if cropX < 0: cropX = maxCropX

elif key == ord('d'):

cropX = cropX + (maxCropX / camRgb.getResolutionWidth()) * STEP_SIZE

if cropX > maxCropX: cropX = 0

elif key == ord('w'):

cropY = cropY - (maxCropY / camRgb.getResolutionHeight()) * STEP_SIZE

if cropY < 0: cropY = maxCropY

elif key == ord('s'):

cropY = cropY + (maxCropY / camRgb.getResolutionHeight()) * STEP_SIZE

if cropY > maxCropY: cropY = 0

sendCamConfig = Trueall the best~~

Hello king ,

If the object is always 150mm away from the camera, I would suggest manually specifying the lens position instead of having autofocus enabled. You can achieve that like this:

camRgb = pipeline.create(dai.node.ColorCamera)

cam.initialControl.setManualFocus(90)You can change the value between 0 and 255. You can see what's the best value by going through all focus values with this example (pressing , and . will change lens position).

Thanks, Erik

Dear Erik:

Thanks for your feedback for autofocus function~we are trying to this parameter to check if it could be solved .

all the best~~

Hello dear,

New on Luxonis and Depthai, my first project is based on OAK-D POE camera and OCR.

I am actually playing with experimental GEN 2 OCR, which is working like a charm.

But i am fighting with ROI management.

Actually the code is using 1024x1024 and preview 256x256 on a 12Mp camera.

I am trying to integrate a crop to aim on a dedicated zone of 1024x1024.

I am in a working area where i don't want to see people ( they dont want to be seen… lol)

I tried many imagemanip tests without reaching a working result.

and i just start in that domain so it is not easy.

Is there a way to modify easily the ccode to do 2048x1024?

And add a ROI?

i already saw some limitations, i can't do 2048x2048x3 but 2048x1024x3 should work

After that it is more the relocating which is a pain because it is not a x4 on all axis…

If someone did some improvments on it then don't hesitate to share.

Thank you

erik

Hello Erik, yes of course i prepare that.

To explain more before code, the project is outside, near a train cleaning station.

And we want to read the train number when it arrives for a wash.

But i am parallel of the rails, i just need the bottom of the image but as large as i can to be sure that i will read the number.

Unfortunately i can't post pictures of the camera here, but you can imagine more the subject like that.

I prepare some code explanations to complete.

Thank you

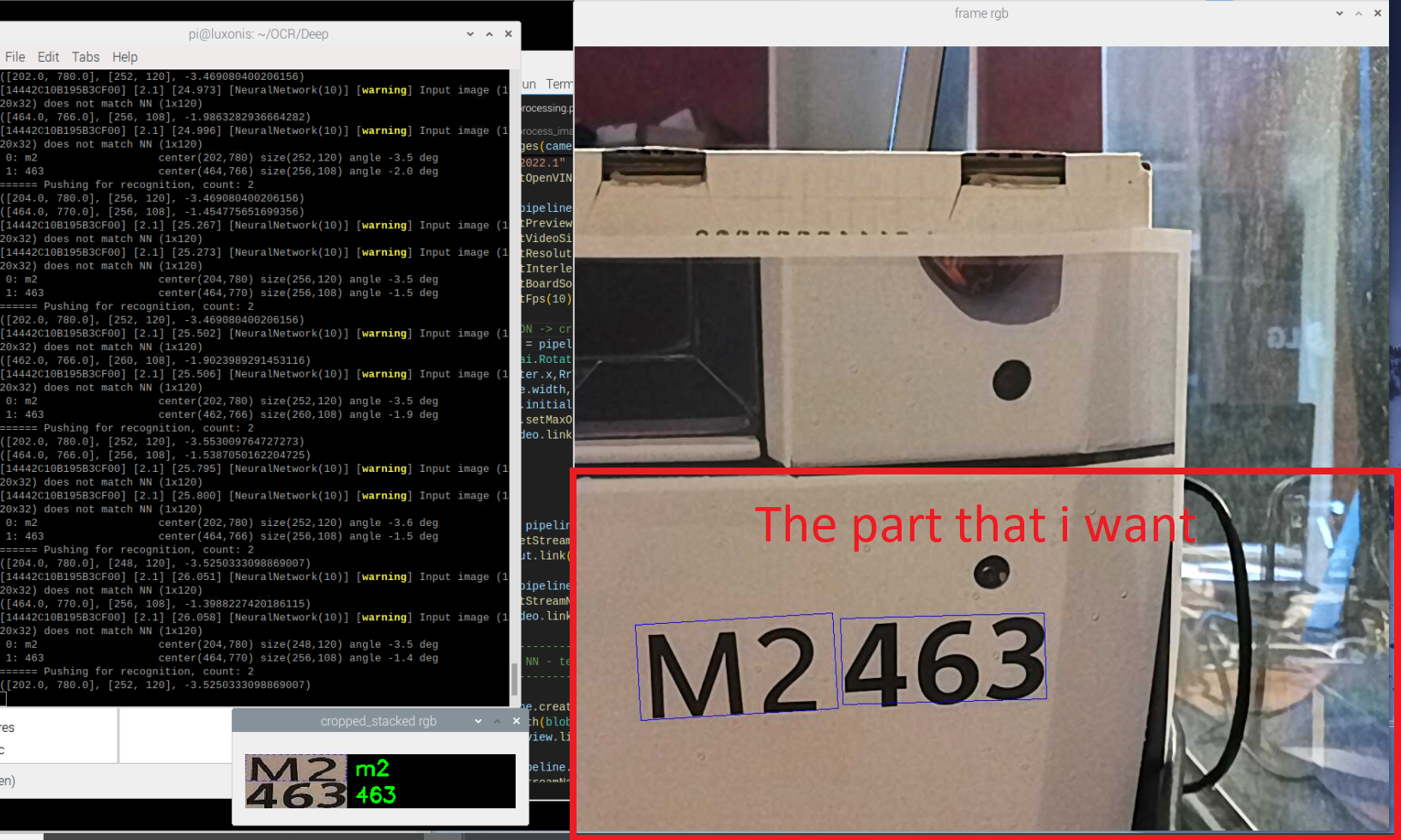

This is the screenshot of my OAK-D CM4 POE cam.

I want to crop the original image taken from the camera to keep the bottom of the image

this is from the original code :

pipeline = dai.Pipeline()

#version = "2021.2"

#pipeline.setOpenVINOVersion(version=dai.OpenVINO.Version.VERSION_2021_2)

version = "2022.1"

pipeline.setOpenVINOVersion(version=dai.OpenVINO.Version.VERSION_2022_1)

colorCam = pipeline.create(dai.node.ColorCamera)

colorCam.setPreviewSize(256, 256)

colorCam.setVideoSize(1024, 1024) # 4 times larger in both axis

colorCam.setResolution(dai.ColorCameraProperties.SensorResolution.THE_1080_P)

colorCam.setInterleaved(False)

colorCam.setBoardSocket(dai.CameraBoardSocket.RGB)

colorCam.setFps(10)

controlIn = pipeline.create(dai.node.XLinkIn)

controlIn.setStreamName('control')

controlIn.out.link(colorCam.inputControl)

cam_xout = pipeline.create(dai.node.XLinkOut)

cam_xout.setStreamName('video')

colorCam.video.link(cam_xout.input)

# ---------------------------------------

# 1st stage NN - text-detection

# ---------------------------------------

nn = pipeline.create(dai.node.NeuralNetwork)

nn.setBlobPath(blobconverter.from_zoo(name="east_text_detection_256x256",zoo_type="depthai",shaves=6, version=version))

colorCam.preview.link(nn.input)

nn_xout = pipeline.create(dai.node.XLinkOut)

nn_xout.setStreamName('detections')

nn.out.link(nn_xout.input)

# ---------------------------------------

# 2nd stage NN - text-recognition-0012

# ---------------------------------------

manip = pipeline.create(dai.node.ImageManip)

manip.setWaitForConfigInput(True)

manip_img = pipeline.create(dai.node.XLinkIn)

manip_img.setStreamName('manip_img')

manip_img.out.link(manip.inputImage)

manip_cfg = pipeline.create(dai.node.XLinkIn)

manip_cfg.setStreamName('manip_cfg')

manip_cfg.out.link(manip.inputConfig)

manip_xout = pipeline.create(dai.node.XLinkOut)

manip_xout.setStreamName('manip_out')

nn2 = pipeline.create(dai.node.NeuralNetwork)

nn2.setBlobPath(blobconverter.from_zoo(name="text-recognition-0012", shaves=6, version=version))

nn2.setNumInferenceThreads(2)

manip.out.link(nn2.input)

manip.out.link(manip_xout.input)

nn2_xout = pipeline.create(dai.node.XLinkOut)

nn2_xout.setStreamName("recognitions")

nn2.out.link(nn2_xout.input)

So i am trying to adapt with 2 image manipulators to link to another ROI :

pipeline = dai.Pipeline()

#version = "2021.2"

#pipeline.setOpenVINOVersion(version=dai.OpenVINO.Version.VERSION_2021_2)

version = "2022.1"

pipeline.setOpenVINOVersion(version=dai.OpenVINO.Version.VERSION_2022_1)

colorCam = pipeline.create(dai.node.ColorCamera)

colorCam.setPreviewSize(256, 256)

colorCam.setVideoSize(2048, 2048) # 4 times larger in both axis

colorCam.setResolution(dai.ColorCameraProperties.SensorResolution.THE_12_MP)

colorCam.setInterleaved(False)

colorCam.setBoardSocket(dai.CameraBoardSocket.RGB)

colorCam.setFps(10)

#MODIFICATION -> creation of the main video Imagemanip

manip_video = pipeline.create(dai.node.ImageManip)

RrVideo = dai.RotatedRect()

RrVideo.center.x,RrVideo.center.y = 0,1024

RrVideo.size.width,RrVideo.size.height = 1024,1024

manip_video.initialConfig.setCropRotatedRect(RrVideo,False)

manip_video.setMaxOutputFrameSize(1024*1024*3)

colorCam.video.link(manip_video.inputImage)

#MODIFICATION -> creation of the preview video Imagemanip

manip_preview= pipeline.create(dai.node.ImageManip)

manip_preview.setResize(256,256)

manip_preview.setMaxOutputFrameSize(256*256*3)

manip_video.out.link(manip_preview.inputImage)

controlIn = pipeline.create(dai.node.XLinkIn)

controlIn.setStreamName('control')

controlIn.out.link(colorCam.inputControl)

cam_xout = pipeline.create(dai.node.XLinkOut)

cam_xout.setStreamName('video')

#MODIFICATION -> link to manip_video output

#colorCam.video.link(cam_xout.input)

manip_video.out.link(cam_xout.input)

# ---------------------------------------

# 1st stage NN - text-detection

# ---------------------------------------

nn = pipeline.create(dai.node.NeuralNetwork)

nn.setBlobPath(blobconverter.from_zoo(name="east_text_detection_256x256",zoo_type="depthai",shaves=6, version=version))

#MODIFICATION -> link the preview to text detection

#colorCam.preview.link(nn.input)

manip_preview.out.link(nn.input)

nn_xout = pipeline.create(dai.node.XLinkOut)

nn_xout.setStreamName('detections')

nn.out.link(nn_xout.input)

# ---------------------------------------

# 2nd stage NN - text-recognition-0012

# ---------------------------------------

manip = pipeline.create(dai.node.ImageManip)

manip.setWaitForConfigInput(True)

manip_img = pipeline.create(dai.node.XLinkIn)

manip_img.setStreamName('manip_img')

manip_img.out.link(manip.inputImage)

manip_cfg = pipeline.create(dai.node.XLinkIn)

manip_cfg.setStreamName('manip_cfg')

manip_cfg.out.link(manip.inputConfig)

manip_xout = pipeline.create(dai.node.XLinkOut)

manip_xout.setStreamName('manip_out')

nn2 = pipeline.create(dai.node.NeuralNetwork)

nn2.setBlobPath(blobconverter.from_zoo(name="text-recognition-0012", shaves=6, version=version))

nn2.setNumInferenceThreads(2)

manip.out.link(nn2.input)

manip.out.link(manip_xout.input)

nn2_xout = pipeline.create(dai.node.XLinkOut)

nn2_xout.setStreamName("recognitions")

nn2.out.link(nn2_xout.input)

But as i said, i just start from scratch on DepthAI, then i possibly missed some fundamental parts….

Regards

actually in the image, the part mentionned "the part that i want" is not reflecting my code.

and not reflecting exactly what i want.

in 12Mp mode, i would like to keep 2048x1024 of the bottom. that is exactly want i want to do but first i need lights lol

- Edited

Hi @DavidP

You can use ImageManip and crop the bottom frame;

#!/usr/bin/env python3

import cv2

import depthai as dai

# Create pipeline

pipeline = dai.Pipeline()

camRgb = pipeline.create(dai.node.ColorCamera)

camRgb.setResolution(dai.ColorCameraProperties.SensorResolution.THE_12_MP) # 4056x3040

width = 4056

height = 1520

crop_manip = pipeline.create(dai.node.ImageManip)

crop_manip.initialConfig.setCropRect(0, 0.5, 1, 1)

crop_manip.setMaxOutputFrameSize(int(width * height * 1.5))

crop_manip.setFrameType(dai.RawImgFrame.Type.NV12)

camRgb.isp.link(crop_manip.inputImage)

xout2 = pipeline.create(dai.node.XLinkOut)

xout2.setStreamName('crop')

crop_manip.out.link(xout2.input)

with dai.Device(pipeline) as device:

# Output queue will be used to get the rgb frames from the output defined above

q1 = device.getOutputQueue(name="crop", maxSize=4, blocking=False)

while True:

if q1.has():

cv2.imshow("Bottom Tile", q1.get().getCvFrame())

if cv2.waitKey(1) == ord('q'):

break