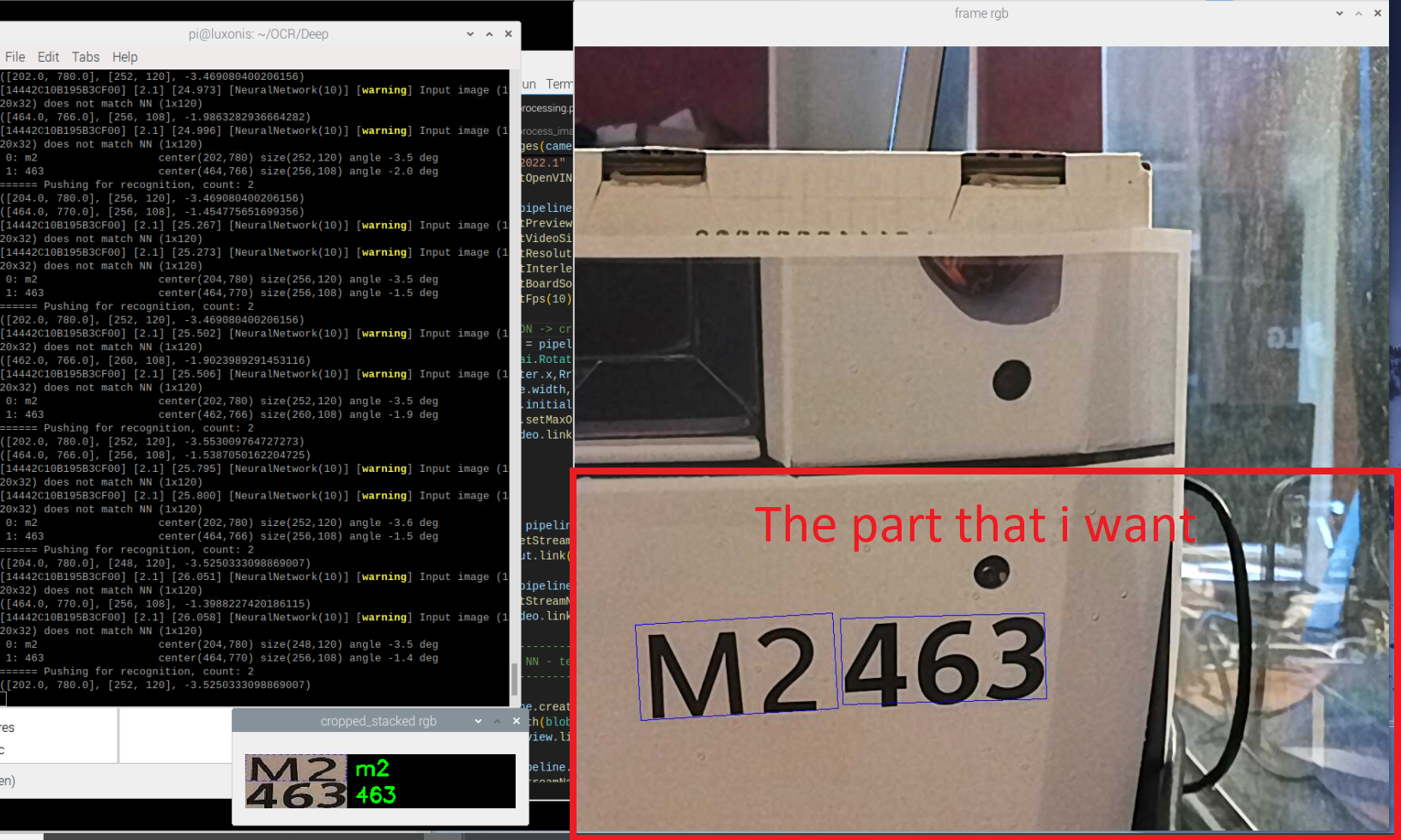

This is the screenshot of my OAK-D CM4 POE cam.

I want to crop the original image taken from the camera to keep the bottom of the image

this is from the original code :

pipeline = dai.Pipeline()

#version = "2021.2"

#pipeline.setOpenVINOVersion(version=dai.OpenVINO.Version.VERSION_2021_2)

version = "2022.1"

pipeline.setOpenVINOVersion(version=dai.OpenVINO.Version.VERSION_2022_1)

colorCam = pipeline.create(dai.node.ColorCamera)

colorCam.setPreviewSize(256, 256)

colorCam.setVideoSize(1024, 1024) # 4 times larger in both axis

colorCam.setResolution(dai.ColorCameraProperties.SensorResolution.THE_1080_P)

colorCam.setInterleaved(False)

colorCam.setBoardSocket(dai.CameraBoardSocket.RGB)

colorCam.setFps(10)

controlIn = pipeline.create(dai.node.XLinkIn)

controlIn.setStreamName('control')

controlIn.out.link(colorCam.inputControl)

cam_xout = pipeline.create(dai.node.XLinkOut)

cam_xout.setStreamName('video')

colorCam.video.link(cam_xout.input)

# ---------------------------------------

# 1st stage NN - text-detection

# ---------------------------------------

nn = pipeline.create(dai.node.NeuralNetwork)

nn.setBlobPath(blobconverter.from_zoo(name="east_text_detection_256x256",zoo_type="depthai",shaves=6, version=version))

colorCam.preview.link(nn.input)

nn_xout = pipeline.create(dai.node.XLinkOut)

nn_xout.setStreamName('detections')

nn.out.link(nn_xout.input)

# ---------------------------------------

# 2nd stage NN - text-recognition-0012

# ---------------------------------------

manip = pipeline.create(dai.node.ImageManip)

manip.setWaitForConfigInput(True)

manip_img = pipeline.create(dai.node.XLinkIn)

manip_img.setStreamName('manip_img')

manip_img.out.link(manip.inputImage)

manip_cfg = pipeline.create(dai.node.XLinkIn)

manip_cfg.setStreamName('manip_cfg')

manip_cfg.out.link(manip.inputConfig)

manip_xout = pipeline.create(dai.node.XLinkOut)

manip_xout.setStreamName('manip_out')

nn2 = pipeline.create(dai.node.NeuralNetwork)

nn2.setBlobPath(blobconverter.from_zoo(name="text-recognition-0012", shaves=6, version=version))

nn2.setNumInferenceThreads(2)

manip.out.link(nn2.input)

manip.out.link(manip_xout.input)

nn2_xout = pipeline.create(dai.node.XLinkOut)

nn2_xout.setStreamName("recognitions")

nn2.out.link(nn2_xout.input)

So i am trying to adapt with 2 image manipulators to link to another ROI :

pipeline = dai.Pipeline()

#version = "2021.2"

#pipeline.setOpenVINOVersion(version=dai.OpenVINO.Version.VERSION_2021_2)

version = "2022.1"

pipeline.setOpenVINOVersion(version=dai.OpenVINO.Version.VERSION_2022_1)

colorCam = pipeline.create(dai.node.ColorCamera)

colorCam.setPreviewSize(256, 256)

colorCam.setVideoSize(2048, 2048) # 4 times larger in both axis

colorCam.setResolution(dai.ColorCameraProperties.SensorResolution.THE_12_MP)

colorCam.setInterleaved(False)

colorCam.setBoardSocket(dai.CameraBoardSocket.RGB)

colorCam.setFps(10)

#MODIFICATION -> creation of the main video Imagemanip

manip_video = pipeline.create(dai.node.ImageManip)

RrVideo = dai.RotatedRect()

RrVideo.center.x,RrVideo.center.y = 0,1024

RrVideo.size.width,RrVideo.size.height = 1024,1024

manip_video.initialConfig.setCropRotatedRect(RrVideo,False)

manip_video.setMaxOutputFrameSize(1024*1024*3)

colorCam.video.link(manip_video.inputImage)

#MODIFICATION -> creation of the preview video Imagemanip

manip_preview= pipeline.create(dai.node.ImageManip)

manip_preview.setResize(256,256)

manip_preview.setMaxOutputFrameSize(256*256*3)

manip_video.out.link(manip_preview.inputImage)

controlIn = pipeline.create(dai.node.XLinkIn)

controlIn.setStreamName('control')

controlIn.out.link(colorCam.inputControl)

cam_xout = pipeline.create(dai.node.XLinkOut)

cam_xout.setStreamName('video')

#MODIFICATION -> link to manip_video output

#colorCam.video.link(cam_xout.input)

manip_video.out.link(cam_xout.input)

# ---------------------------------------

# 1st stage NN - text-detection

# ---------------------------------------

nn = pipeline.create(dai.node.NeuralNetwork)

nn.setBlobPath(blobconverter.from_zoo(name="east_text_detection_256x256",zoo_type="depthai",shaves=6, version=version))

#MODIFICATION -> link the preview to text detection

#colorCam.preview.link(nn.input)

manip_preview.out.link(nn.input)

nn_xout = pipeline.create(dai.node.XLinkOut)

nn_xout.setStreamName('detections')

nn.out.link(nn_xout.input)

# ---------------------------------------

# 2nd stage NN - text-recognition-0012

# ---------------------------------------

manip = pipeline.create(dai.node.ImageManip)

manip.setWaitForConfigInput(True)

manip_img = pipeline.create(dai.node.XLinkIn)

manip_img.setStreamName('manip_img')

manip_img.out.link(manip.inputImage)

manip_cfg = pipeline.create(dai.node.XLinkIn)

manip_cfg.setStreamName('manip_cfg')

manip_cfg.out.link(manip.inputConfig)

manip_xout = pipeline.create(dai.node.XLinkOut)

manip_xout.setStreamName('manip_out')

nn2 = pipeline.create(dai.node.NeuralNetwork)

nn2.setBlobPath(blobconverter.from_zoo(name="text-recognition-0012", shaves=6, version=version))

nn2.setNumInferenceThreads(2)

manip.out.link(nn2.input)

manip.out.link(manip_xout.input)

nn2_xout = pipeline.create(dai.node.XLinkOut)

nn2_xout.setStreamName("recognitions")

nn2.out.link(nn2_xout.input)

But as i said, i just start from scratch on DepthAI, then i possibly missed some fundamental parts….

Regards