Hi,

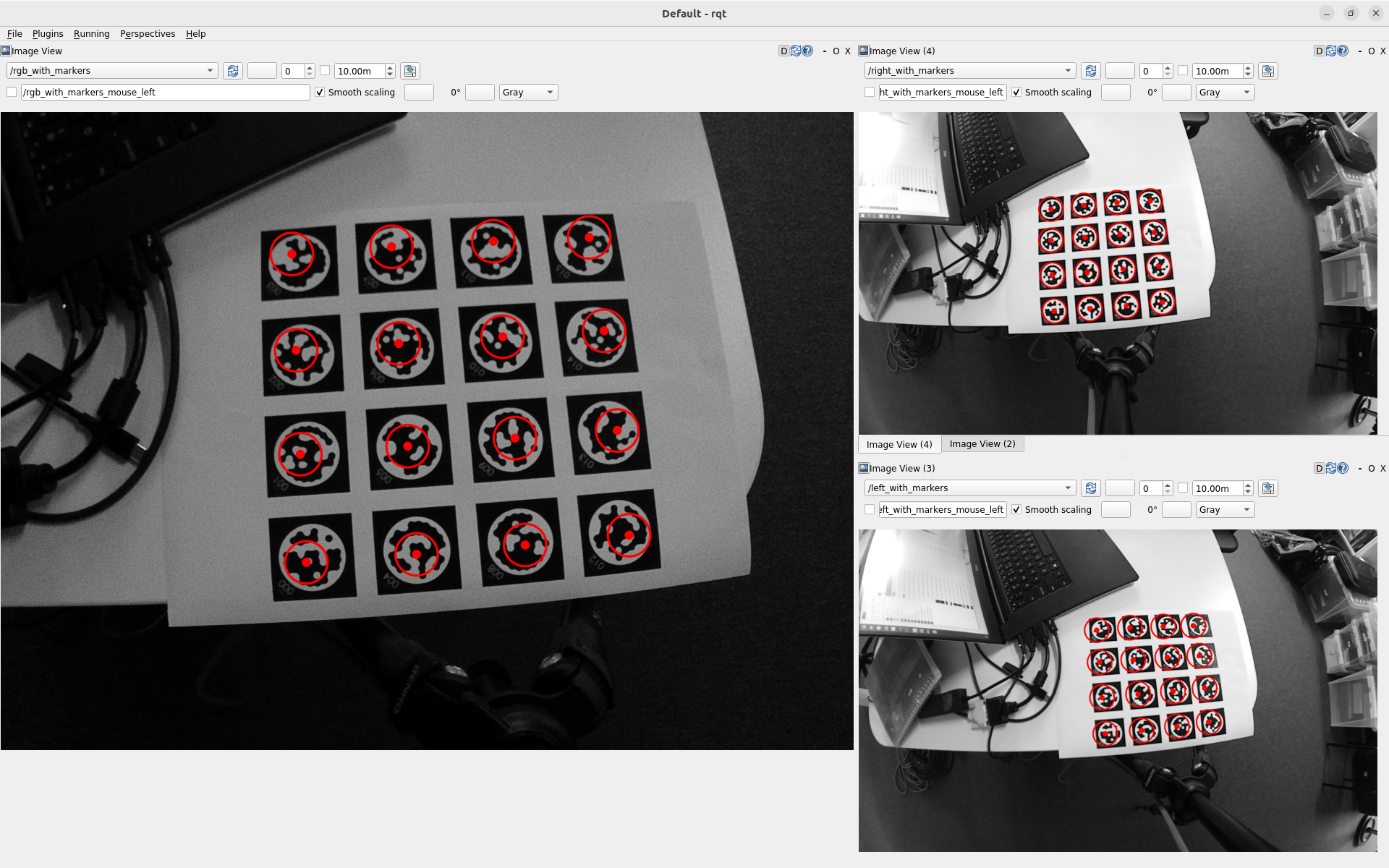

we're running an OAK-D Pro Wide PoE (P/N: A00574, D/C: 5023) and are unsure whether we're applying the factory calibration (published as a CameraInfo ROS message) correctly. We created a small test setup consisting of the camera looking on a 4-by-4 STag marker grid, run marker detection and reprojection, and the expected result is that the red circle's centers coincide with marker origins, however, as you can see, that is not the case, except of the right eye's image:

The setup is as follows:

- A

stag_ros node detects markers in the right image and publishes their poses.

- A custom ROS node subscribes to all images and the marker poses, and projects the 3D poses onto the 2D images. It takes into account the baseline published on TF (copied manually into the script) and the

R, K and D matrices.

For the right image, the marker observations align perfectly, indicating that the applied projection is exactly the inverse of what stag_ros does. (Actually, stag_ros doesn't take into account the camera's R matrix, so upon receipt of the marker poses, we rotate all points by the transpose of the right camera's R matrix.)

Here's the current projection code:

void projectMarkersToImageCoordinates(

const std::vector<stag_ros::STagMarker> markers,

const sensor_msgs::CameraInfo & camera_info,

const cv::Point3d & camera_position,

std::vector<cv::Point2d> & uvs) {

// Ensure output is empty

uvs.clear();

// Nothing to project => exit

if (markers.empty()) return;

// Prepare input

std::vector<cv::Point3d> object_points;

for (const stag_ros::STagMarker& m : markers) {

object_points.push_back(cv::Point3d(

m.pose.position.x - camera_position.x,

m.pose.position.y - camera_position.y,

m.pose.position.z - camera_position.z));

}

cv::Mat r(3, 3, CV_64F);

for (int i = 0; i < 9; ++i) r.at<double>(i) = camera_info.R[i];

cv::Mat rvec;

cv::Rodrigues(r, rvec);

cv::Mat tvec = cv::Mat::zeros(cv::Size(3, 1), CV_64F);

cv::Mat k(3, 3, CV_64F);

for (int i = 0; i < 9; ++i) k.at<double>(i) = camera_info.K[i];

// Assume distortion model follows OpenCV format

cv::Mat d(camera_info.D.size(), 1, CV_64F);

for (int i = 0; i < camera_info.D.size(); ++i) d.at<double>(i) = camera_info.D[i];

// The actual 3D => 2D projection

cv::projectPoints(object_points, rvec, tvec, k, d, uvs);

}

I attempted to add the full source file, however, the forum responded that the file type is not supported.

The above code is classic OpenCV, and it corresponds to what the existing stag_ros node does, so I guess it's close to correct. But perhaps we're still missing something.

Could you please share your thoughts on whether we're interpreting and using the calibration correctly? If anything is unclear, please let know.