- Edited

Back when I was tasked with building interactive voice response (IVR) systems with speech recognition, it was often said humans only understand 50% of words when they do not know the context.

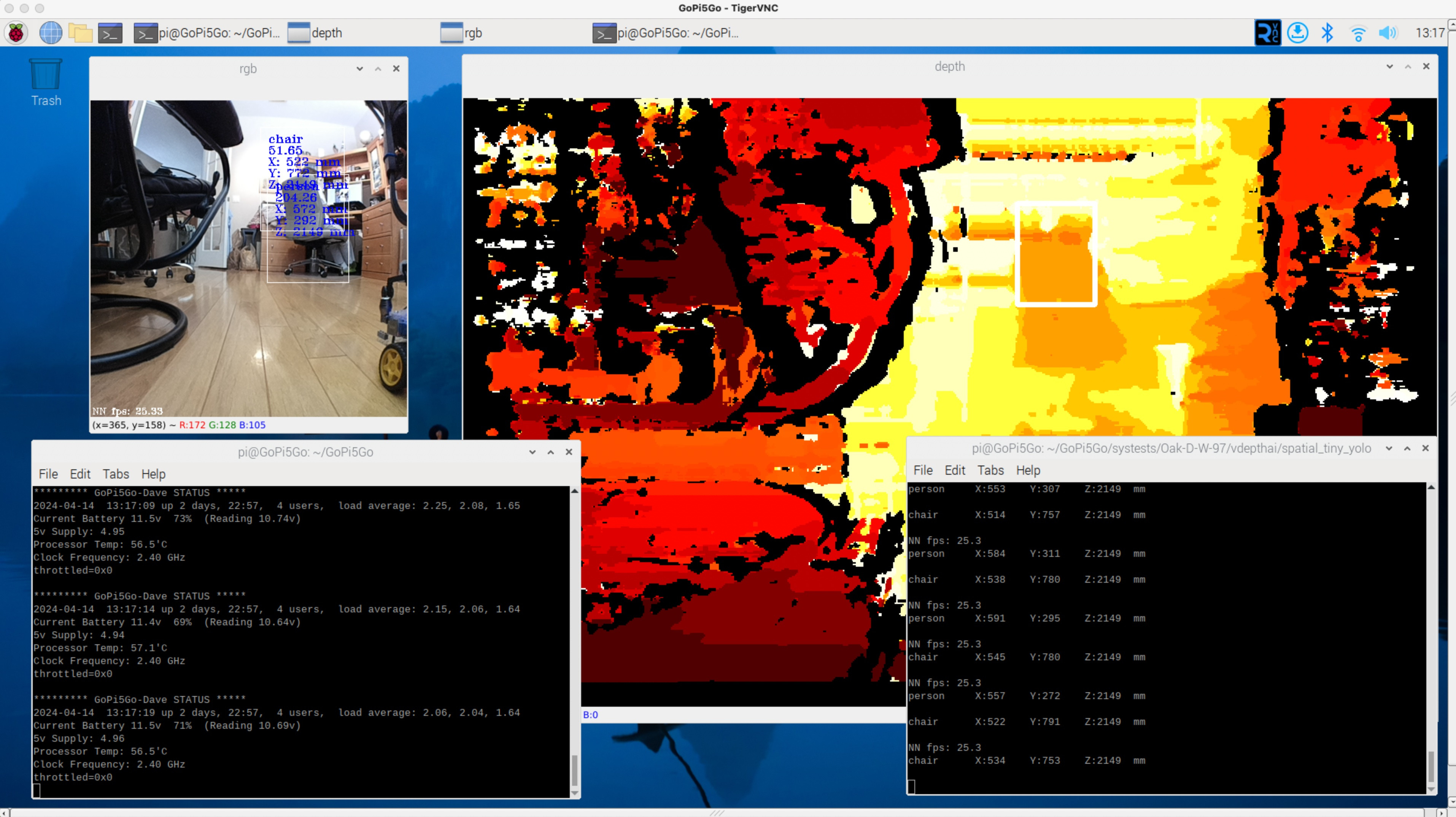

In dreaming for my Raspberry Pi5 powered autonomous robot Dave to understand what he sees, I have been planning to (eventually) use transfer learning to enhance visual neural nets on objects he has “discovered”, but the name “Grok Vision” in the announcement by Elon Musk’s XAI company reminded me that integrating context into the neural net would likely improve object recognition significantly.

Since the YOLOv4 object recognition program running on Oak cameras only uses 8% of the RPi5, and the Oak-D-W-97 camera can perform the recognition at 30 FPS (w/o image transfers), there should be capacity for the next generation of vision recognition algorithms.

I think RTABmap is using a basic context derived from image features and pose to enhance vision localization.

Is there an object recognition with context blob I can run on my Oak camera?