Hello everybody!

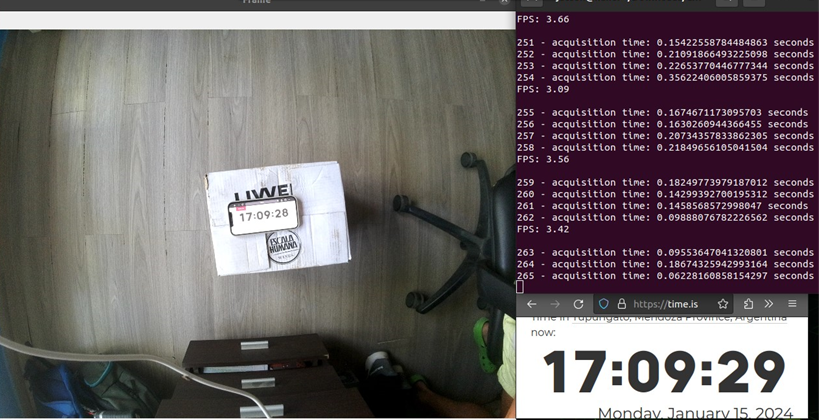

I am using two IMX378 cameras connected to an OAK-FFC 4P module. I need to improve the delay between image acquisition and display on screen, which is more than one second. At the same time, I have a very low fluency in the video, this is because it does not work at the fps that I indicate. Below is an image that shows the delay mentioned and the fps.

I don't know if it is a hardware limitation and therefore a delay that I have to deal with, or if there is any possible way or technique to improve this code.

If I do not use "cam1.setIspScale(1,2)" the delay is ~4 seconds.

import cv2

import depthai as dai

import time

def getFrame(queue):

frame = queue.get()

return frame.getCvFrame()

if __name__ == '__main__':

fps = 10

frame_count = 0

# Define a pipeline

pipeline = dai.Pipeline()

device = dai.Device()

# DEFINE SOURCES AND OUTPUTS

cam1 = pipeline.create(dai.node.ColorCamera)

cam2 = pipeline.create(dai.node.ColorCamera)

cam1.setBoardSocket(dai.CameraBoardSocket.CAM_A) # 4-lane MIPI IMX378

cam2.setBoardSocket(dai.CameraBoardSocket.CAM_D) # 4-lane MIPI IMX378

cam1.setResolution(dai.ColorCameraProperties.SensorResolution.THE_12_MP)

cam2.setResolution(dai.ColorCameraProperties.SensorResolution.THE_12_MP)

cam1.setIspScale(1,2)

cam2.setIspScale(1,2)

cam1.setFps(fps)

cam2.setFps(fps)

# Set output Xlink

outcam1 = pipeline.create(dai.node.XLinkOut)

outcam2 = pipeline.create(dai.node.XLinkOut)

outcam1.setStreamName("cam1")

outcam2.setStreamName("cam2")

# LINKING

cam1.isp.link(outcam1.input)

cam2.isp.link(outcam2.input)

outcam1.input.setBlocking(False)

outcam2.input.setBlocking(False)

outcam1.input.setQueueSize(1)

outcam2.input.setQueueSize(1)

with device:

device.startPipeline(pipeline)

cam1 = device.getOutputQueue(name="cam1", maxSize=1, blocking=False)

cam2 = device.getOutputQueue(name="cam2", maxSize=1, blocking=False)

n=0

while True:

frame_count += 1

n+=1

elapsed_time = time.time() - start_time

if elapsed_time > 1.0:

fps = frame_count / elapsed_time

print(f"FPS: {fps:.2f}\n")

frame_count = 0

start_time = time.time()

start_adq = time.time() # Inicia el temporizador

Frame1 = getFrame(cam1)

#Frame2 = getFrame(cam2)

end_adq = time.time() # Detiene el temporizador

tiempo_adquisicion = end_adq - start_adq

print(n,f"- acquisition time: {tiempo_adquisicion} seconds")

# Check for keyboard input

key = cv2.waitKey(1) & 0xFF

# Display the current state in the window

cv2.imshow("Frame1", Frame1)

#cv2.imshow("Frame2", Frame2)

if key == ord('q'):

break

Thank you very much