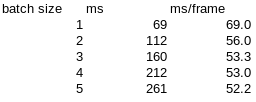

erik So I tried out 6 shave cores, 2 inference threads at batch sizes 1-5 and wasn't able to figure out how to actually run batched inference(even after flattening a [n,3,256,192] tensor and then attaching it to a Buffer I still only get back [1, 133, 384] and [1, 133, 512] results), the average round trip for inference time was 69-261 ms(average of 40 frames)

It appears that batch size 2 would be an excellent trade off of latency/throughput, though again that's if I can figure out how to actually get batched results back from batched inference. I looked on other posts but didn't see similar issues?

Edit: I think I'll just change the graph to have 2 inputs similar to this, each being [1,3,256,192] and then Concat them to a [2,3,256,192] within the graph so that I can link the inputs straight up to ImgManip nodes.