At Luxonis, our mission can be easy to say but much harder to do: “Robotic vision, made simple.” With our powerful DepthAI API ecosystem, coupled with our range of OAK cameras, we provide the means for robots, computers, and machines to perceive the world like people do.

What does that look like? And how do we do it? These are the questions we’ll explore in our “Beginner’s Guide to Robotic Vision” blog series.

If you’re already an experienced Luxonis user you may just want to skip ahead to the more technical details found in our DepthAI Documentation pages, but if you’re new to the space you’re in exactly the right place.

In this article and the articles that follow it, we’ll unpack the essential ideas, terms, and applications that form the core of robotic vision. We’ll start by addressing three primary umbrella topics, Artificial Intelligence (AI), Machine Learning (ML), and Computer Vision (CV) and then move on to take a closer look at some of the applications and use-cases that flow from them.

Throughout, our focus will be much more on the what and the why of robotic vision–as opposed to getting into the weeds with the how. So, for those looking for help with code, troubleshooting, or customization, did we mention DepthAI Documentation? You can also always reach us on Discord, or join in on the discussions of our incredible community. But for those looking to learn more about the basics of what we do at Luxonis these will be the articles for you.

With our direction clear, let’s get started. First up, let’s talk about AI, or more specifically, Spatial AI.

Why not just say “AI”? Why “Spatial AI”? For a very simple reason: true perception requires a sense of space. We don’t work with AI in the abstract or confined to a computer, we work with it to provide vision to devices that would otherwise have none. And for that vision to have any meaning it must be given context. It would be simple enough to hook up a video feed to a robot and call it a day, but that’s not what we’re talking about here. A video feed alone won’t allow a robot to derive meaning from the world around it. What we want is for this new input to allow our robot to engage, recognize, and learn about the information this new sense provides. And the first step in that process is spatial.

What is an object? Does that object have a name? How far away is that object from me? These are all questions people can answer long before they even have the words to do so. These kinds of spatial understandings are all just innate parts of the human experience. But not so for robots; it’s up to us to define what’s significant for them.

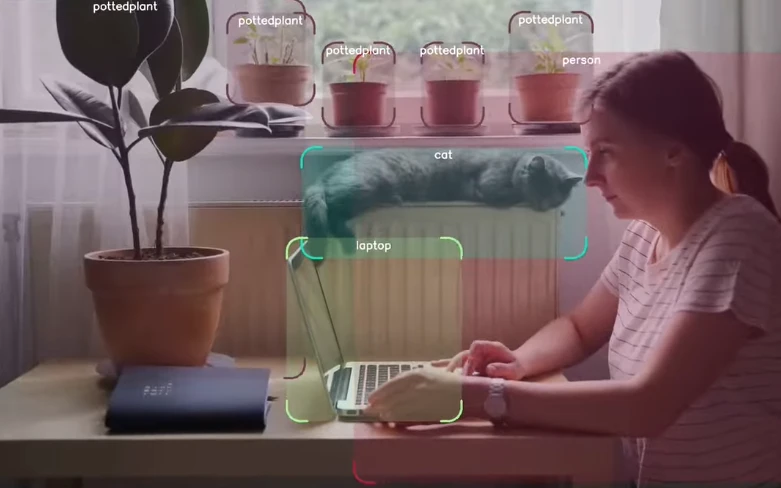

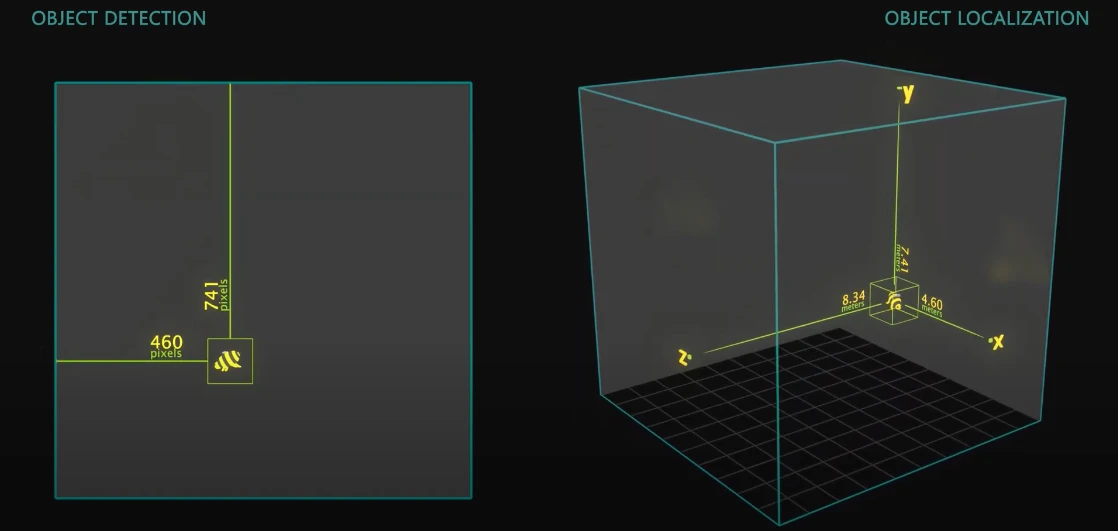

One of the basic building blocks of Spatial AI is object detection. With this technique, targeted areas of interest called “bounding boxes” are placed around objects so the robot can understand where they are in physical space. By running inference on the color and/or mono frames, a trained detector places the bounding box around the object’s pixel space, and assigns X (horizontal) and Y (vertical) coordinates to the object. This 2D version of detection is a basic but essential first step of Spatial AI.

But 2D object detection is nothing special. In order to achieve true Spatial AI we need to go 3D, which means we need to sense depth. Object detection in 3D, also referred to as “object localization,” thus needs a third, “Z” dimension, allowing a robot to not only know where something exists on a flat plane (or screen), but also how close or how far away it is. Once depth is added–once the full spatial location of objects are known–it opens up a whole new world. With this complete form of Spatial AI, many additional techniques come into play, allowing robots to classify and categorize objects, and then employ that new understanding to tackle a wide range of use-cases.

To start, let’s talk about 3D landmark detection. Landmark detection is the method of applying object localization to a pre-defined model. For example, landmark detection could be applied to models of human pose estimation or human hand estimation. In these cases, the on-device neural network identifies the key structures and joints that make up the human body or human hand, respectively, assigns them coordinates, and links them together, as seen in the following example.

By creating this association between landmarks, more complex inference can be done, such as performing a visual search to detect pedestrians walking across the street, or reading the movements of someone’s hands to interpret American Sign Language.

Spatial AI can also be applied in a broader sense. Instead of picking out specific landmarks it can also be used to identify areas of coverage. This is a process known as “segmentation,” and takes two main forms: semantic segmentation and instance segmentation. In both cases, segmentation happens by first training the system to recognize objects that are in a specified group and objects that are outside of that group. Objects in the specified group are identified by shading/coloring each of their pixels, while objects not in the group are shaded/colored differently to classify them as excluded.

The difference between semantic segmentation and instance segmentation is that, for semantic segmentation, all objects of the included group are considered the same. For example, a pile of apples on a table would all be assigned the same color and seen collectively as “apples.” If we instead wanted an instance segmentation of our apples, each one would be assigned a unique color in order to be able to differentiate between “apple 1” and “apple 2.” Instance segmentation is essentially a combination of object detection and semantic segmentation.

One of the primary use-cases for segmentation comes in the form of navigation and obstacle avoidance. In order for an AI to successfully take a vehicle from point A to point B, for example, it must be able to not only identify every object around it, but also understand which of those objects are safe and which must be avoided. Taking the case of an automatic car, it first must be able to see both the road and lane lines so it doesn’t create a hazard for other drivers. These would be semantically segmented as permitted areas. But second, it must also be able to see other vehicles and any other obstacles that could result in a collision or damage. It may be beneficial in these cases to perform instance segmentation to better track independent positions and velocities, while still universally recognizing these objects as hazards.