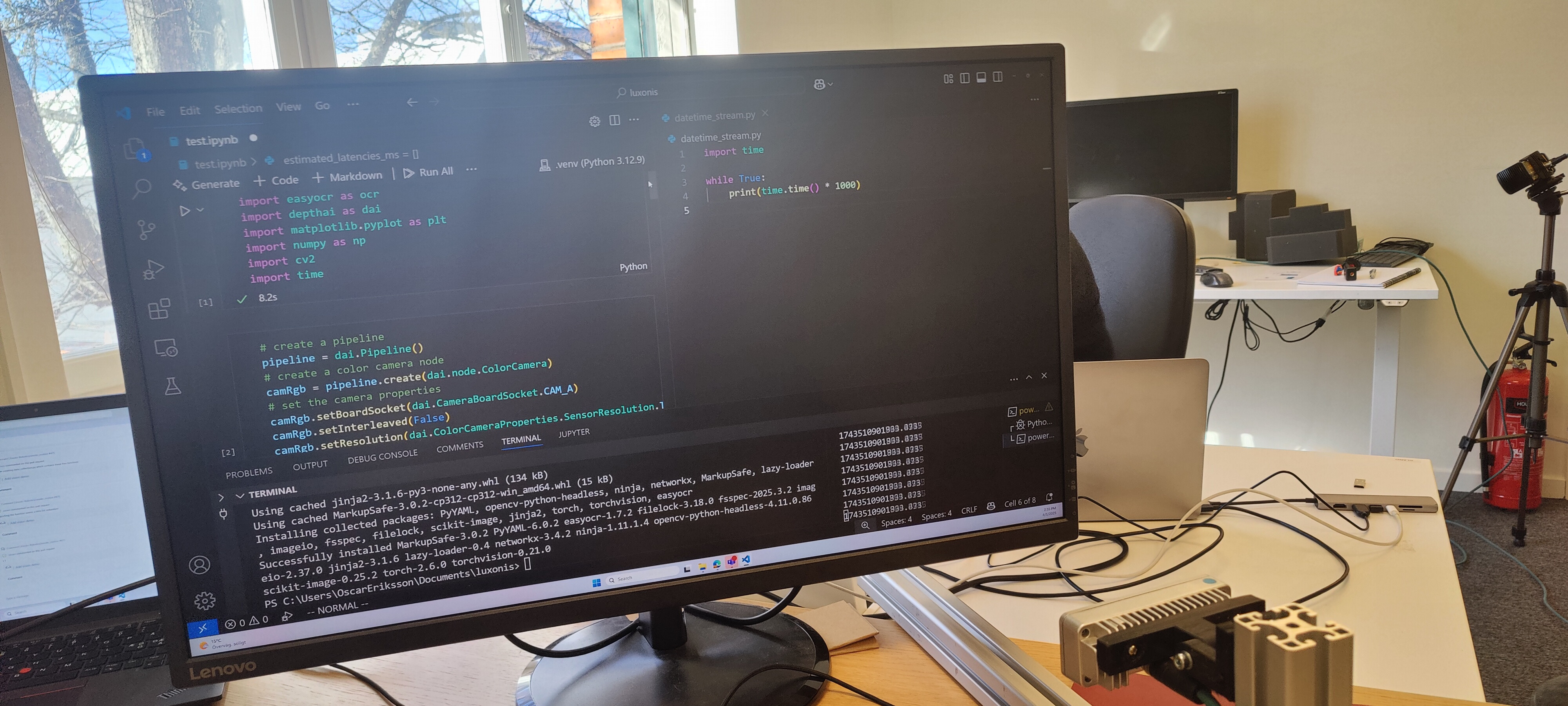

This is the code we use for displaying the timestamp on the screen (datetime_stream.py in the repo):

import time

while True:

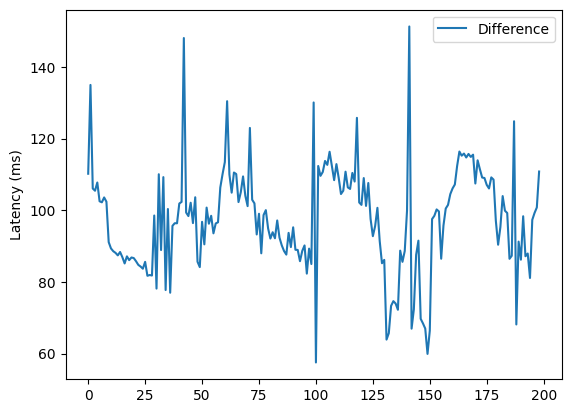

print(time.monotonic_ns() / 1000000)We have tried varying the fps, and the error persisted through that, being at the same levels of 90-100 ms. Another thing we have tried is to see if it has something to do with rolling shutter by placing our timestamp in different parts of the camera FOV, but the discrepancy has persisted through that as well.

We definitely think there might be an error in our experiment/measurement as well. We have tried to eliminate the possible causes we could think of, and made our repo for reproducing the error as simple as possible. If you have any more ideas for what in the experiment/measurement that might be wrong, we are happy to try them, to get to the bottom of this.

Do you maybe know what experiments have been done in developing the camera to check the latency? Maybe we could try replicating one of those experiments to really ensure that it is just our experimental setup that causes the issue.