We have measured the latency of an image based on image content, and compared that to the latency found through the suggested method by Luxonis, and we have found that these two latencies do not match.

Based on this post: Latency Measurement, we expect that the latency of a given frame can be found as

latencyMs = (dai.Clock.now() - imgFrame.getTimestamp()).total_seconds() * 1000

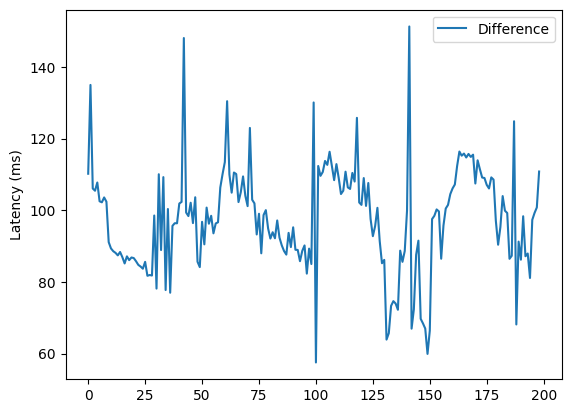

However, we have found this latency to not match reality. We constructed an experiment where we point the camera towards a monitor where we make printouts of the current time. By comparing this time to the time in our program, we can find the latency of an image. We compare this true latency to the latency reported by the Luxonis camera per the above calculation, and find that these do not match.

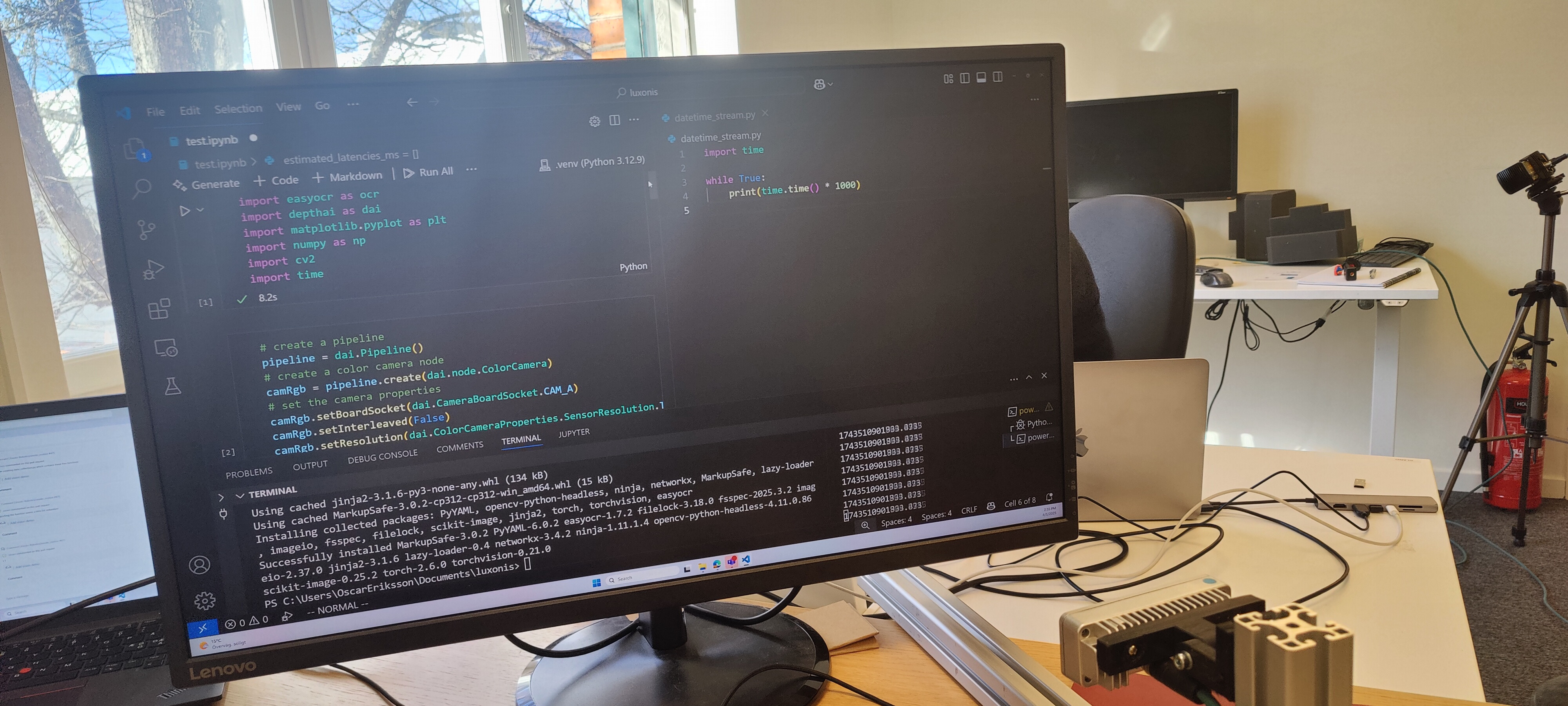

Here is an example of our setup, with the luxonis camera pointing towards the monitor where we have current time printouts.

We find that the difference between the latency based on image content and program time, compared to luxonis timestamp and luxonis camera time, is 100 ms.

We have constructed a minimal code example in this repo, with the link showing the primary notebook for the procedure: Minimal Example Repo

The current discrepancy hinders our usecase where we require accurate latency measurements.

Any pointers as to how we can get more accurate latency measurements, or if we are missing something?