Amovlab-matnav

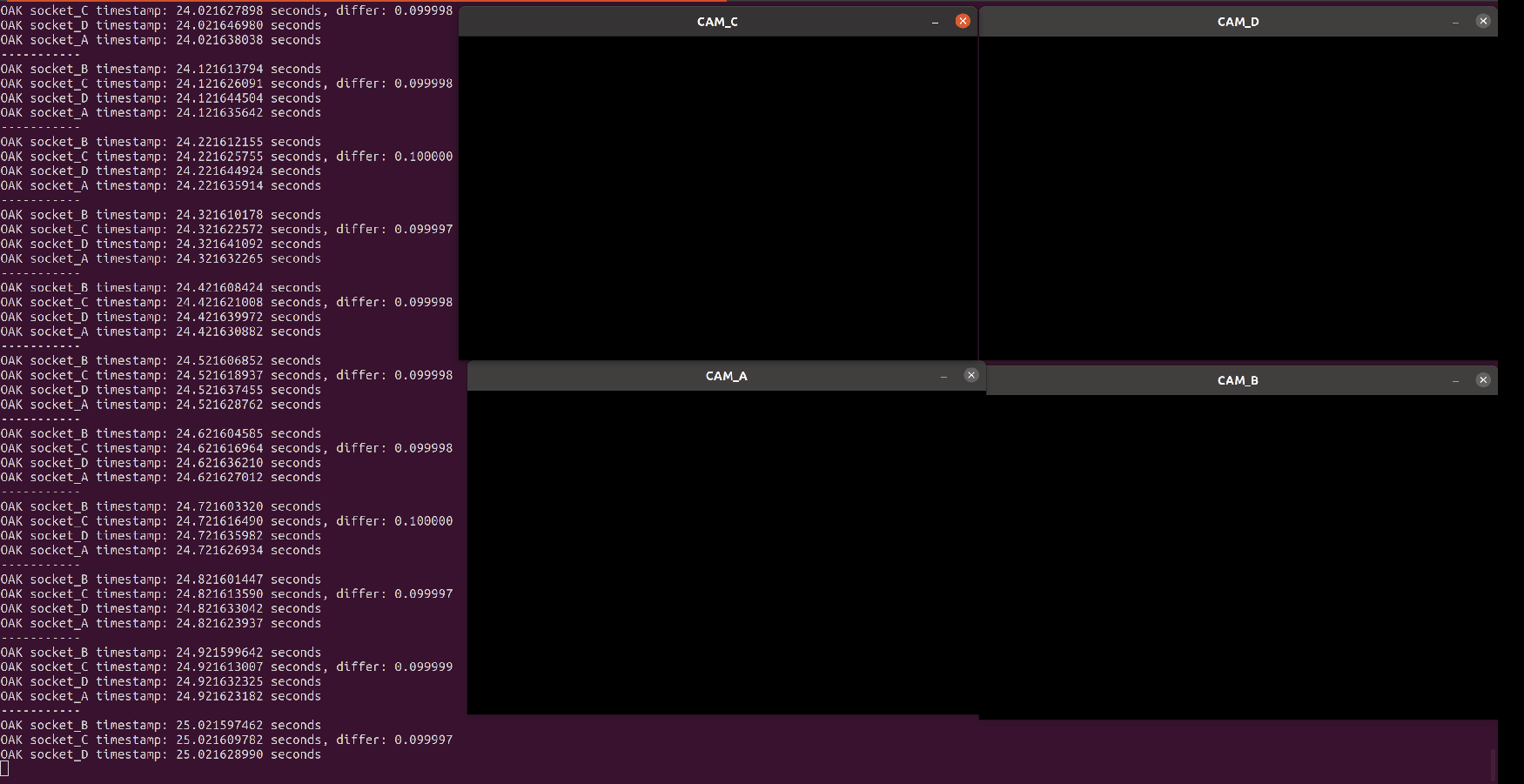

@jakaskerl I tried setting all cameras to "FrameSyncMode::INPUT" and found that the timestamps of the four cameras were synchronized, but there was no image output.

Here's my code:

class TicToc

{

public:

TicToc()

{

tic();

}

void tic()

{

start_ = std::chrono::system_clock::now();

}

double toc()

{

end_ = std::chrono::system_clock::now();

std::chrono::duration<double> elapsed_seconds = end_ - start_;

return elapsed_seconds.count() * 1000;

}

private:

std::chrono::time_point<std::chrono::system_clock> start_, end_;

};

// OAK 设备类

class OAKDevice

{

public:

OAKDevice() = default;

~OAKDevice()

{

imu_queue.reset();

img_queue.clear();

depth_queue.clear();

}

// 创建 depthai 管道

void createPipeline(dai::Pipeline& pipeline)

{

pipeline.setXLinkChunkSize(0);

pipeline.setSippBufferSize(0);

std::map<std::string, std::shared_ptr<dai::node::MonoCamera>> camera_node;

std::map<std::string, std::shared_ptr<dai::node::XLinkOut>> xout_img_node;

std::map<std::string, std::shared_ptr<dai::node::StereoDepth>> stereo_node;

std::map<std::string, std::shared_ptr<dai::node::XLinkOut>> xout_depth_node;

std::shared_ptr<dai::node::Sync> sync_node = pipeline.create<dai::node::Sync>();

std::shared_ptr<dai::node::MessageDemux> demux_node =

pipeline.create<dai::node::MessageDemux>();

sync_node->out.link(demux_node->input);

std::map<std::string, dai::CameraBoardSocket> cam_socket_opts = {

{"CAM_A", dai::CameraBoardSocket::CAM_A},

{"CAM_B", dai::CameraBoardSocket::CAM_B},

{"CAM_C", dai::CameraBoardSocket::CAM_C},

{"CAM_D", dai::CameraBoardSocket::CAM_D}};

//-------------------- link ---------------------//

for (const auto& cam_name : cam_list)

{

camera_node[cam_name] = pipeline.create<dai::node::MonoCamera>();

camera_node[cam_name]->setBoardSocket(cam_socket_opts[cam_name]);

camera_node[cam_name]->setResolution(dai::MonoCameraProperties::SensorResolution::THE_400_P);

camera_node[cam_name]->setFps(10);

camera_node[cam_name]->out.link(sync_node->inputs[cam_name]);

camera_node[cam_name]->initialControl.setFrameSyncMode(dai::CameraControl::FrameSyncMode::INPUT);

xout_img_node[cam_name] = pipeline.create<dai::node::XLinkOut>();

xout_img_node[cam_name]->setStreamName(cam_name);

demux_node->outputs[cam_name].link(xout_img_node[cam_name]->input);

}

}

const std::vector<std::string> cam_list{"CAM_B", "CAM_C", "CAM_D", "CAM_A"};

// 存放传感器数据的队列

std::shared_ptr<dai::DataOutputQueue> imu_queue;

std::map<std::string, std::shared_ptr<dai::DataOutputQueue>> img_queue;

std::map<std::string, std::shared_ptr<dai::DataOutputQueue>> depth_queue;

};

int main(int argc, char** argv)

{

ros::init(argc, argv, "oak");

ros::NodeHandle nh("~");

ros::console::set_logger_level(ROSCONSOLE_DEFAULT_NAME, ros::console::levels::Info);

printWelcomeMsg();

OAKDevice oak_device;

TicToc timer_pipe;

dai::Pipeline pipeline;

oak_device.createPipeline(pipeline);

printf("--Start pipeline time: %f ms\n", timer_pipe.toc());

TicToc timer_deivce;

dai::Device device(pipeline, dai::UsbSpeed::SUPER_PLUS);

printf("--Start device time: %f ms\n", timer_deivce.toc());

switch (static_cast<int32_t>(device.getUsbSpeed()))

{

case 5:

printf("--Usb speed: SUPER_PLUS\n");

break;

case 4:

printf("--Usb speed: SUPER\n");

break;

default:

printf("WARNING: Usb speed is not SUPER!\n");

break;

}

printf("--Device name: %s\n", device.getDeviceName().c_str());

// 获取传感器数据

// oak_device.imu_queue = device.getOutputQueue("imu", 10, false);

for (const auto& cam_name : oak_device.cam_list)

{

oak_device.img_queue[cam_name] =

device.getOutputQueue(cam_name, 20, false);

// if(cam_name == "CAM_A" || cam_name == "CAM_C")

// {

// oak_device.depth_queue[cam_name] =

// device.getOutputQueue(cam_name + "_depth", 20, false);

// }

}

double last_time = 0.0;

while (ros::ok())

{

for (const auto& cam_name : oak_device.cam_list)

{

std::shared_ptr<dai::ImgFrame> img_data = oak_device.img_queue[cam_name]->get<dai::ImgFrame>();

if (img_data == nullptr)

{

std::chrono::milliseconds dura(1);

std::this_thread::sleep_for(dura);

continue;

}

cv::Mat cvImg = img_data->getCvFrame();

if (cam_name == "CAM_C")

{

auto oak_timestamp = img_data->getTimestampDevice().time_since_epoch().count();

double curr_time = oak_timestamp/1e9;

printf("OAK socket_C timestamp: %.9lf seconds, differ: %lf\n", curr_time, curr_time-last_time);

last_time = oak_timestamp/1e9;

cv::imshow(cam_name, cvImg);

}

else if (cam_name == "CAM_B")

{

auto oak_timestamp = img_data->getTimestampDevice().time_since_epoch().count();

printf("OAK socket_B timestamp: %.9lf seconds\n", oak_timestamp/1e9);

cv::imshow(cam_name, cvImg);

}

else if (cam_name == "CAM_A")

{

auto oak_timestamp = img_data->getTimestampDevice().time_since_epoch().count();

printf("OAK socket_A timestamp: %.9lf seconds\n", oak_timestamp/1e9);

cv::imshow(cam_name, cvImg);

}

else

{

auto oak_timestamp = img_data->getTimestampDevice().time_since_epoch().count();

printf("OAK socket_D timestamp: %.9lf seconds\n", oak_timestamp/1e9);

cv::imshow(cam_name, cvImg);

}

cv::waitKey(1);

}

printf("-----------\n");

}

return 0;

}

Thanks,

Nav