No. Not really much to blame on thermal issues. I checked the IC temperature, and the output does seem to line up with what the documentation says I should see. So I'm guessing its just the general result of the OV7251's sensor noise.

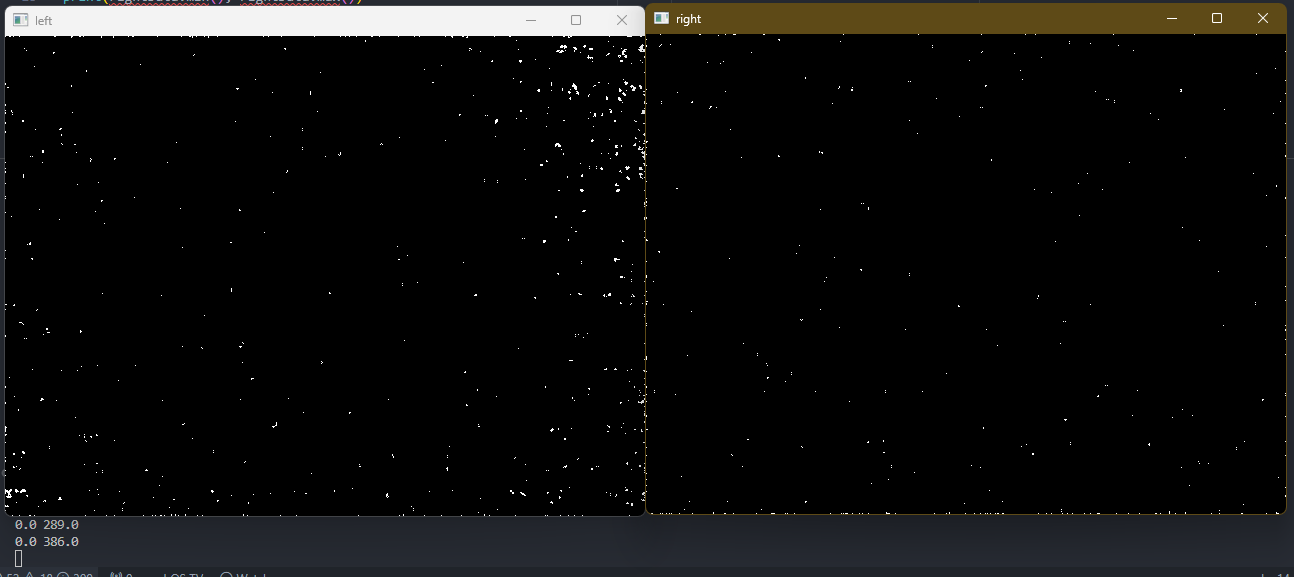

I decided to do some experiments though, because why not? I captured lots of frames from both mono cameras with both 100 ISO, and 20500 exposure, and them summed them up to see what "hot spots" were generated. Most frames had no hot spots at all, but some did.

The left camera was a bit "hotter' than the right camera. I'm think that one is closer to the Myriad X, so its probably just a little bit more thermal noise.

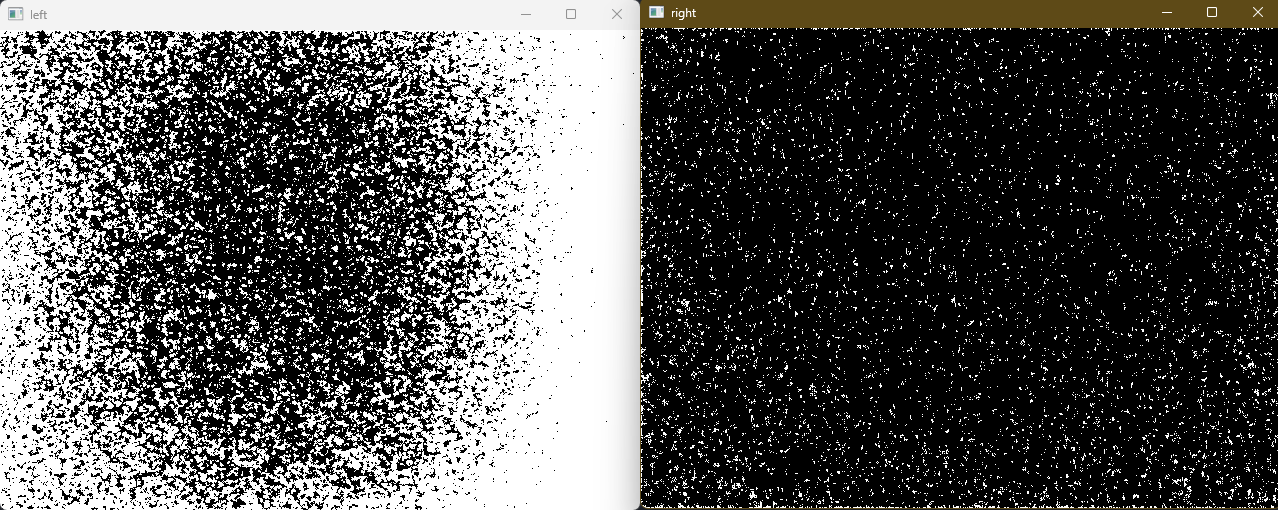

Repeating the same test, but with the highest ISO setting gives similar results, but with many more bright pixels. This makes sense, as high ISO is going to be more sensor gain, and thus a nosier picture.

Here's the code for grabbing all the frames. I just manually stopped it around 700-1000 frames

#!/usr/bin/env python3

from pathlib import Path

import cv2

import depthai as dai

import time

import shutil

# Create pipeline

pipeline = dai.Pipeline()

# Define source and output

monoRight = pipeline.create(dai.node.MonoCamera)

monoLeft = pipeline.create(dai.node.MonoCamera)

xoutRight = pipeline.create(dai.node.XLinkOut)

xoutLeft = pipeline.create(dai.node.XLinkOut)

xoutRight.setStreamName("right")

xoutLeft.setStreamName("left")

# Properties

monoRight.setCamera("right")

monoLeft.setCamera("left")

monoRight.setResolution(dai.MonoCameraProperties.SensorResolution.THE_480_P)

monoLeft.setResolution(dai.MonoCameraProperties.SensorResolution.THE_480_P)

# Linking

monoRight.out.link(xoutRight.input)

monoLeft.out.link(xoutLeft.input)

# Image control

controlIn = pipeline.create(dai.node.XLinkIn)

controlIn.setStreamName('control')

controlIn.out.link(monoRight.inputControl)

controlIn.out.link(monoLeft.inputControl)

# Connect to device and start pipeline

with dai.Device(pipeline) as device:

# Output queue will be used to get the grayscale frames from the output defined above

qRight = device.getOutputQueue(name="right", maxSize=4, blocking=False)

qLeft = device.getOutputQueue(name="left", maxSize=4, blocking=False)

# Queue for sending sensor config

controlQueue = device.getInputQueue(controlIn.getStreamName())

dirName_l = "mono_data_l"

dirName_r = "mono_data_r"

shutil.rmtree(dirName_l)

shutil.rmtree(dirName_r)

Path(dirName_l).mkdir(parents=True, exist_ok=True)

Path(dirName_r).mkdir(parents=True, exist_ok=True)

while True:

ctrl = dai.CameraControl()

ctrl.setManualExposure(20500, 1600)

controlQueue.send(ctrl)

inRight = qRight.get() # Blocking call, will wait until a new data has arrived

inLeft = qLeft.get()

# Data is originally represented as a flat 1D array, it needs to be converted into HxW form

# Frame is transformed and ready to be shown

cv2.imshow("right", inRight.getCvFrame())

cv2.imshow("left", inLeft.getCvFrame())

# After showing the frame, it's being stored inside a target directory as a PNG image

cv2.imwrite(f"{dirName_r}/{int(time.time() * 1000)}.png",

inRight.getFrame())

cv2.imwrite(f"{dirName_l}/{int(time.time() * 1000)}.png",

inLeft.getFrame())

if cv2.waitKey(1) == ord('q'):

break

And here's the script that summed up the data.

from pathlib import Path

import cv2

import numpy as np

from PIL import Image

def stack_dir(directory: str) -> np.array:

frame = np.zeros((480, 640), dtype=np.float32)

files = list(Path(directory).glob("*.png"))

print(f"{directory}: {len(files)}")

for image in files:

loaded = np.asarray(Image.open(image), np.float32)

frame += loaded

return frame.astype(np.float32)

leftData = stack_dir("mono_data_l")

rightData = stack_dir("mono_data_r")

print(leftData.min(), leftData.max())

print(rightData.min(), rightData.max())

cv2.imshow("left", leftData)

cv2.imshow("right", rightData)

cv2.waitKey()

The noise seems fairly random in how its distributed, so I'm thinking if I really wanted to try it (and frankly, the depth data is pretty good, so this is all somewhat academic at this point), the simplest way might be to average out a few frames (likely using some logic running on the SHAVE cores), then pass that average into the depth calculations. Is that possible, or does the StereoDepth node require the original images?