Hi @"JanCuhel"

Thank you for your support!

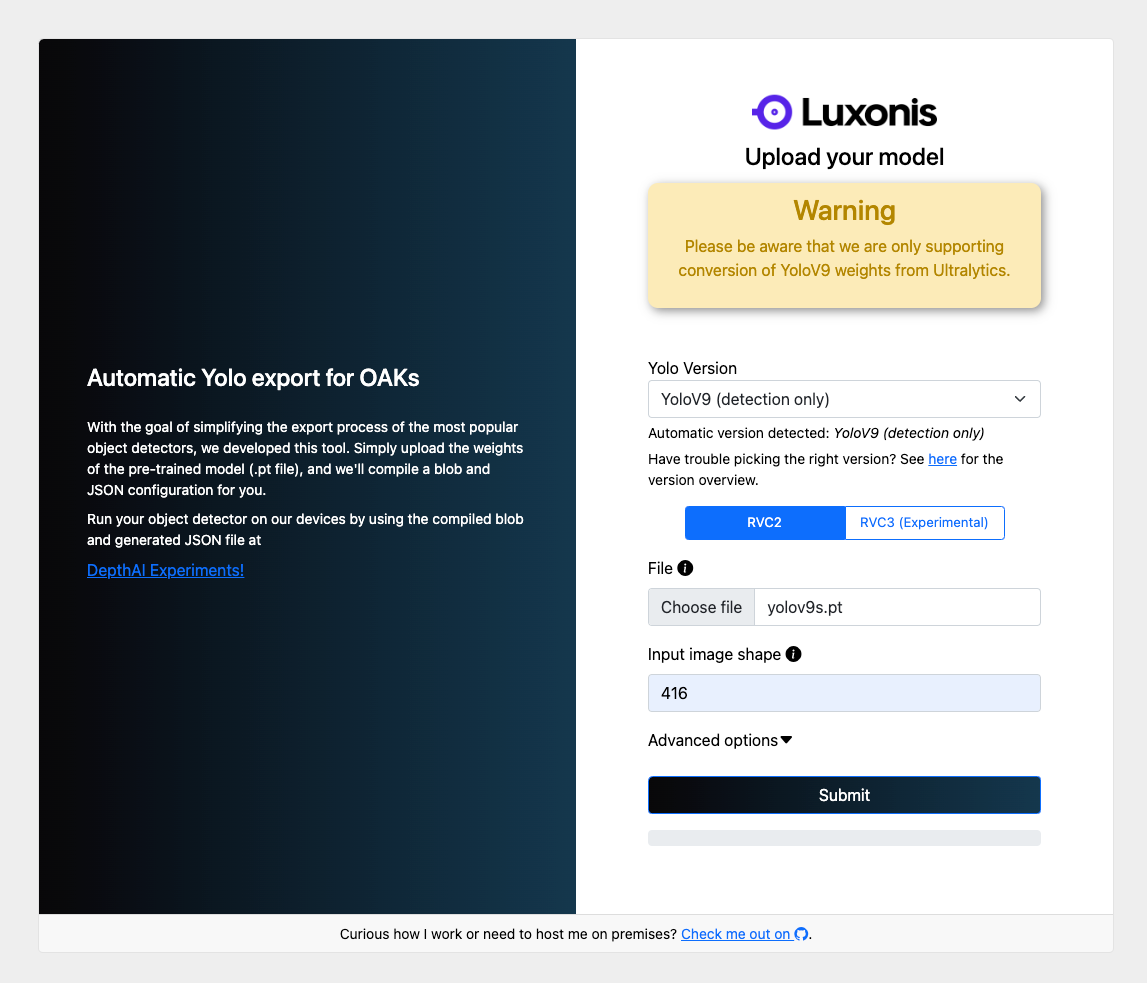

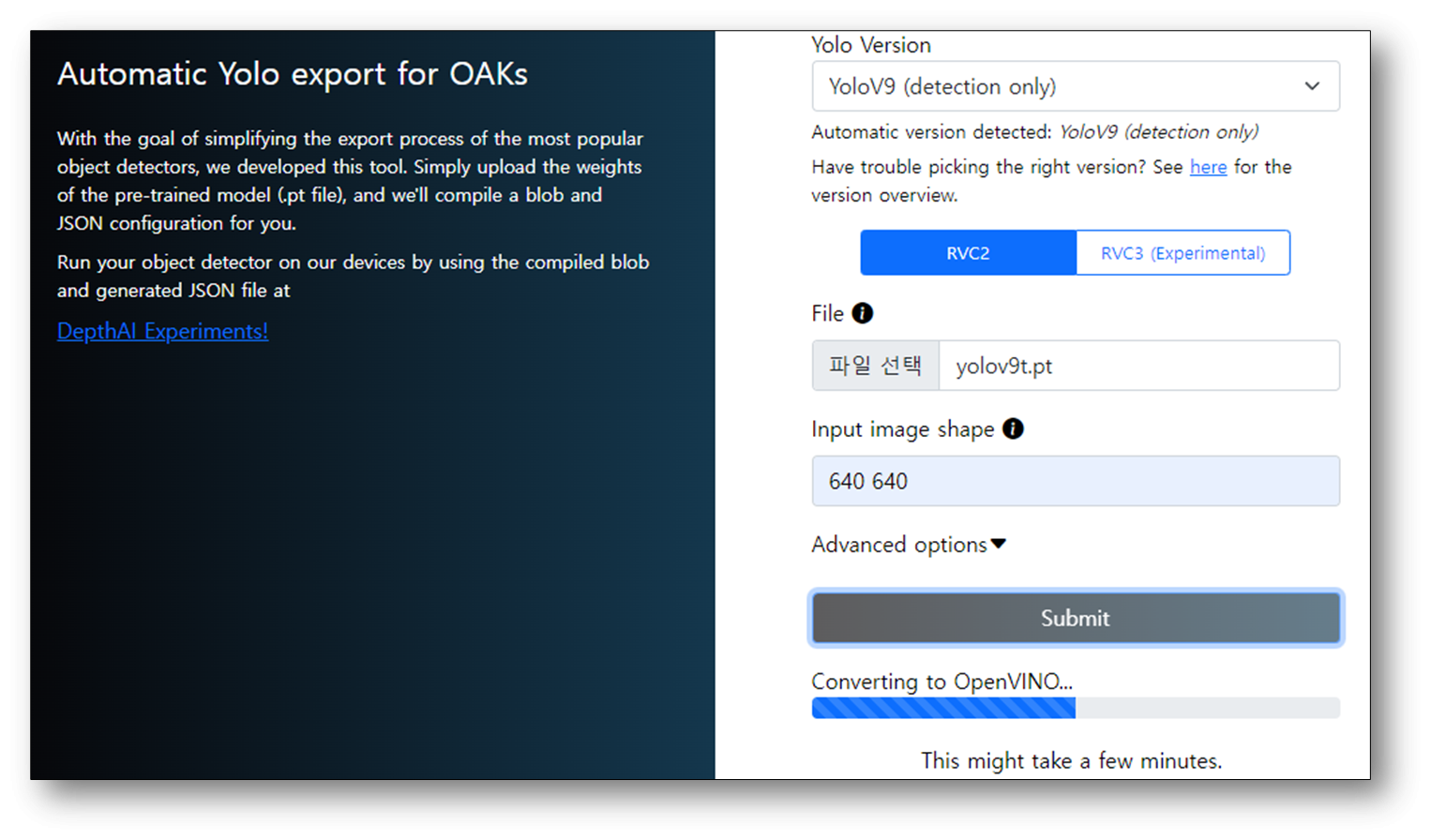

I tested yolov9t model using the gen2-deepsort-tracking experimental app according as instructed and It worked successfully.

Next, I tried using my custom YOLOvn-based model trained to detect “fish”. It seemed to work initially, but the app soom encountered the following error and stopped:

[14442C101148F3D600] [59.19.225.203] [61.171] [ImageManip(8)] [error] Invalid configuration or input image - skipping frame

[14442C101148F3D600] [59.19.225.203] [61.193] [ImageManip(8)] [error] Not possible to create warp params. Error: WARP_SWCH_ERR_CACHE_TO_SMALL

This might not be related to an issue with exporting the NN. Any advice you could provide would be greatly appreciated.

Best,

Oscar