I have finally found two python scripts that actually generates point cloud data while streaming. The attached link is the exact repo with code: luxonis/depthai-experimentstree/gen2-rgb-depth-align/gen2-camera-demo

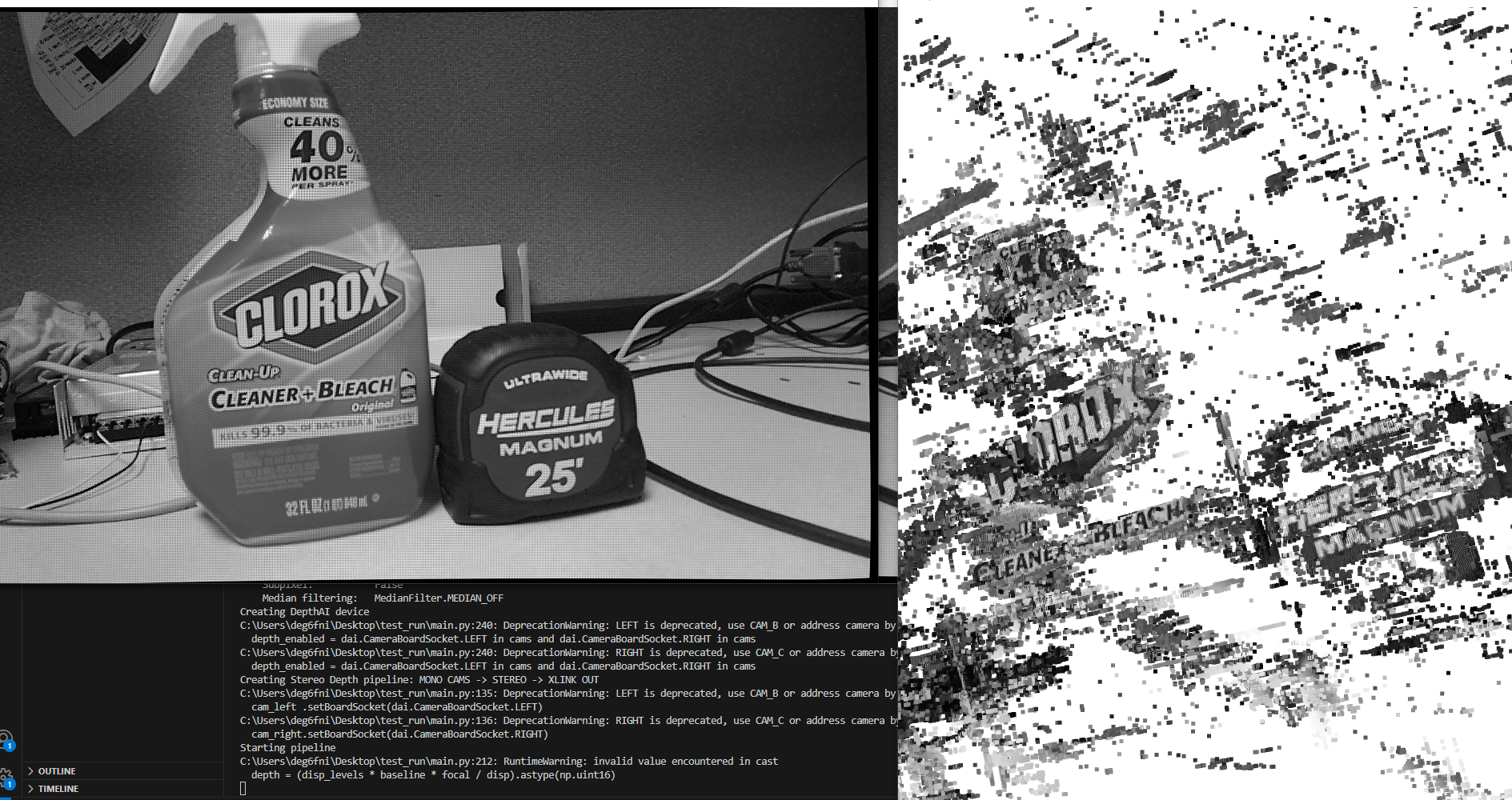

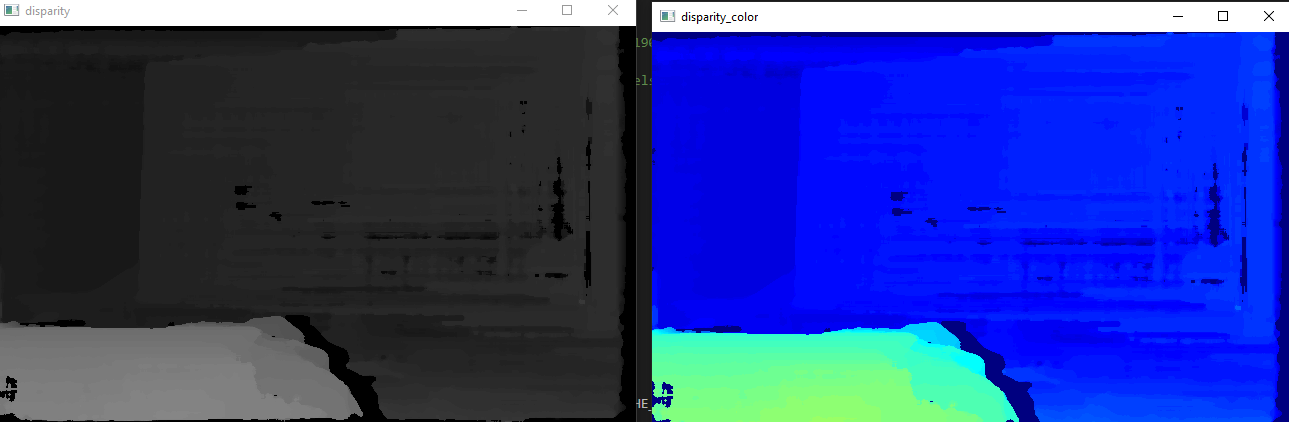

main.py and projector_3d.py are the scripts that I am running. It displays the image in grey scale. My question is how can I retrieve all the point cloud nodes to give me a better image with more point cloud data.

The attached image is the current state of my point cloud