Hello, I've created the virtual environment to be able to run my Oak-D Pro W on my Jetson Orin Nano.

In ROS 2, when I do ros2 launch depthai_examples stereo_inertial_node.launch.py

The img topic grabs the first frame and after that it no longer updates data…

(depthAI) genozen@genozen:~/Documents/depthai-python/examples$ ros2 launch depthai_examples stereo_inertial_node.launch.py

[INFO] [launch]: All log files can be found below /home/genozen/.ros/log/2024-03-03-16-31-45-971391-genozen-10538

[INFO] [launch]: Default logging verbosity is set to INFO

[INFO] [robot_state_publisher-1]: process started with pid [10551]

[INFO] [stereo_inertial_node-2]: process started with pid [10553]

[INFO] [component_container-3]: process started with pid [10555]

[INFO] [rviz2-4]: process started with pid [10557]

[robot_state_publisher-1] [INFO] [1709505106.616323472] [oak_state_publisher]: got segment oak-d-base-frame

[robot_state_publisher-1] [INFO] [1709505106.616540822] [oak_state_publisher]: got segment oak-d_frame

[robot_state_publisher-1] [INFO] [1709505106.616564022] [oak_state_publisher]: got segment oak_imu_frame

[robot_state_publisher-1] [INFO] [1709505106.616575319] [oak_state_publisher]: got segment oak_left_camera_frame

[robot_state_publisher-1] [INFO] [1709505106.616585463] [oak_state_publisher]: got segment oak_left_camera_optical_frame

[robot_state_publisher-1] [INFO] [1709505106.616594487] [oak_state_publisher]: got segment oak_model_origin

[robot_state_publisher-1] [INFO] [1709505106.616603671] [oak_state_publisher]: got segment oak_rgb_camera_frame

[robot_state_publisher-1] [INFO] [1709505106.616612408] [oak_state_publisher]: got segment oak_rgb_camera_optical_frame

[robot_state_publisher-1] [INFO] [1709505106.616621528] [oak_state_publisher]: got segment oak_right_camera_frame

[robot_state_publisher-1] [INFO] [1709505106.616629784] [oak_state_publisher]: got segment oak_right_camera_optical_frame

[component_container-3] [INFO] [1709505106.791015554] [container]: Load Library: /opt/ros/humble/lib/libdepth_image_proc.so

[component_container-3] [INFO] [1709505106.979942799] [container]: Found class: rclcpp_components::NodeFactoryTemplate<depth_image_proc::ConvertMetricNode>

[component_container-3] [INFO] [1709505106.980066611] [container]: Instantiate class: rclcpp_components::NodeFactoryTemplate<depth_image_proc::ConvertMetricNode>

[stereo_inertial_node-2] 1280 720 1280 720

[INFO] [launch_ros.actions.load_composable_nodes]: Loaded node '/convert_metric_node' in container '/container'

[component_container-3] [INFO] [1709505107.028890728] [container]: Found class: rclcpp_components::NodeFactoryTemplate<depth_image_proc::ConvertMetricNode>

[component_container-3] [INFO] [1709505107.028974699] [container]: Found class: rclcpp_components::NodeFactoryTemplate<depth_image_proc::CropForemostNode>

[component_container-3] [INFO] [1709505107.028992587] [container]: Found class: rclcpp_components::NodeFactoryTemplate<depth_image_proc::DisparityNode>

[component_container-3] [INFO] [1709505107.029005419] [container]: Found class: rclcpp_components::NodeFactoryTemplate<depth_image_proc::PointCloudXyzNode>

[component_container-3] [INFO] [1709505107.029016236] [container]: Found class: rclcpp_components::NodeFactoryTemplate<depth_image_proc::PointCloudXyzRadialNode>

[component_container-3] [INFO] [1709505107.029025740] [container]: Found class: rclcpp_components::NodeFactoryTemplate<depth_image_proc::PointCloudXyziNode>

[component_container-3] [INFO] [1709505107.029034476] [container]: Found class: rclcpp_components::NodeFactoryTemplate<depth_image_proc::PointCloudXyziRadialNode>

[component_container-3] [INFO] [1709505107.029043500] [container]: Found class: rclcpp_components::NodeFactoryTemplate<depth_image_proc::PointCloudXyzrgbNode>

[component_container-3] [INFO] [1709505107.029051949] [container]: Instantiate class: rclcpp_components::NodeFactoryTemplate<depth_image_proc::PointCloudXyzrgbNode>

[INFO] [launch_ros.actions.load_composable_nodes]: Loaded node '/point_cloud_xyzrgb_node' in container '/container'

[stereo_inertial_node-2] Listing available devices...

[stereo_inertial_node-2] Device Mx ID: 1844301041D27F0E00

[rviz2-4] [INFO] [1709505107.634205615] [rviz2]: Stereo is NOT SUPPORTED

[rviz2-4] [INFO] [1709505107.634465398] [rviz2]: OpenGl version: 4.6 (GLSL 4.6)

[rviz2-4] [INFO] [1709505107.712087211] [rviz2]: Stereo is NOT SUPPORTED

[component_container-3] [WARN] [1709505107.848987500] [point_cloud_xyzrgb_node]: New subscription discovered on topic '/stereo/points', requesting incompatible QoS. No messages will be sent to it. Last incompatible policy: RELIABILITY_QOS_POLICY

[component_container-3] [WARN] [1709505107.850752827] [point_cloud_xyzrgb_node]: New subscription discovered on topic '/stereo/points', requesting incompatible QoS. No messages will be sent to it. Last incompatible policy: RELIABILITY_QOS_POLICY

[rviz2-4] [INFO] [1709505108.363289333] [rviz2]: Stereo is NOT SUPPORTED

[stereo_inertial_node-2] [1844301041D27F0E00] [1.2.2] [0.859] [ColorCamera(7)] [warning] Unsupported resolution set for detected camera OV9782, needs 800_P or 720_P. Defaulting to 800_P

[stereo_inertial_node-2] [1844301041D27F0E00] [1.2.2] [1.126] [SpatialDetectionNetwork(9)] [warning] Network compiled for 6 shaves, maximum available 10, compiling for 5 shaves likely will yield in better performance

[stereo_inertial_node-2] Device USB status: SUPER

[stereo_inertial_node-2] [1844301041D27F0E00] [1.2.2] [1.317] [StereoDepth(3)] [error] Disparity/depth width must be multiple of 16, but RGB camera width is 854. Set output size explicitly using 'setOutputSize(width, height)'.

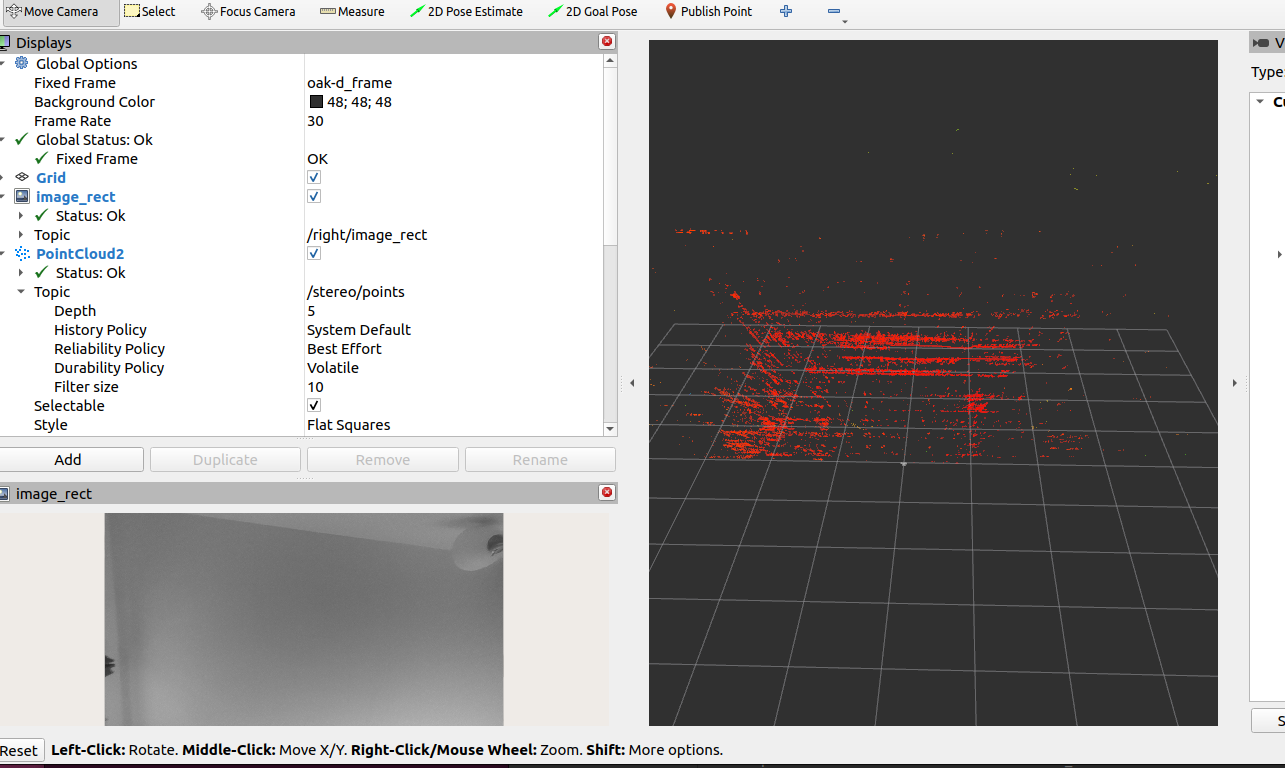

With ros2 launch depthai_examples stereo.launch.py it works fine, but the pointcloud is noisey (I'm assuming it's computing depth from the stereo images, not the IR emitter?)

In short, I would like to create a ROS2 launch example that gives:

left image, right image, imu, and pointcloud (computed from the active stereo, not passive).

Could you please give me some guidance?

Also, where can I find the calibration parameters (intrinsic/extrinsic of the camera and IMU bias?), I would like to run OpenVins: https://docs.openvins.com/gs-calibration.html