- Edited

For just recording, checkout the depthai-sdk document recording.

There are some good examples like encode.py in

depthai_sdk/examples/recording

You may try this to see if it fullfill your requirement.

For just recording, checkout the depthai-sdk document recording.

There are some good examples like encode.py in

depthai_sdk/examples/recording

You may try this to see if it fullfill your requirement.

I have reviewed the examples at the link above, but I don't see how to specify the IP address of the POE camera. Does anyone have documentation on that?

Thanks

I 'believe' I see how to include the camera's IP address:

with OakCamera('192.168.70.86') as oak:But if I run this program (or any of those examples) I get:

ValueError: 'HIGH' is not a valid WLSLevel. I also tried low and medium with the same result.

TimGeddings ValueError: 'HIGH' is not a valid WLSLevel. I also tried low and medium with the same result.

WLS level is related to the stereo component. Are you using an outdated version of the SDK by any chance?

Thanks,

Jaka

I did

pip install depthai-sdk==1.13.1Now, I get a little further. Is 1.13.1 the latest version?

TimGeddings

You can always check latest version (and all elder versions) of a Python package here https://pypi.org/

Is there a specific reason why you're not using a VideoEncoder node? If you create that node and set it to MJPEG, you can stream YUV420 (either from NV12 video output or yuv420p from isp output) and that stream can be set to output to a container, which is just a file that continues to grow as you input to it, so you need to consume from it, either by stopping the pipe and converting to mp4/mkv, or stream from it, or pipe it to a neural network.

Of course, this is 12 bit per pixel instead of RGB's 24 bit per pixel, so if that extra bit-depth is absolutely necessary for you, then you can still pipe out frames, but at a much lower rate, as you're probably experiencing.

FYI: I have performed some detections on custom data on YUV420 and YUV400 frames (12 bit and 8 bit per pixel, respectively) that I would have bet wouldn't have been detected. And these were input images where some had the sun blooming the sensor, some had dust all over, some had terrible chromanoise at night because a lamp went out, some of them were during the rain.

No specific reason other than ignorance of VideoEncoder. Can you point me to some sample code?

Thanks, Tim

Any suggestions before I give up on this Oak-D camera? Using the code below, I can get 20fps in the preview (oak.visualize) window but the streams recorded are about 1 fps. I need to capture video at 20fps or faster and then do some processing on each frame. For now, I'm just trying to determine if I can record video at faster than 1 fps with this camera.

from depthai_sdk import OakCamera, RecordType

from pathlib import Path

import time

duration=10

with OakCamera('192.168.70.86') as oak:

color = oak.create_camera('color', resolution='1080P', fps=20, encode='H265')

left = oak.create_camera('left', resolution='800p', fps=20, encode='H265')

right = oak.create_camera('right', resolution='800p', fps=20, encode='H265')

stereo = oak.create_stereo(left=left, right=right)

nn = oak.create_nn('mobilenet-ssd', color, spatial=stereo)

# Sync & save all (encoded) streams

oak.record([color.out.encoded, left.out.encoded, right.out.encoded], './record', RecordType.VIDEO) \

.configure_syncing(enable_sync=True, threshold_ms=50)

oak.visualize([color.out.encoded], fps=True)

oak.start(blocking=True)Hi @TimGeddings ,

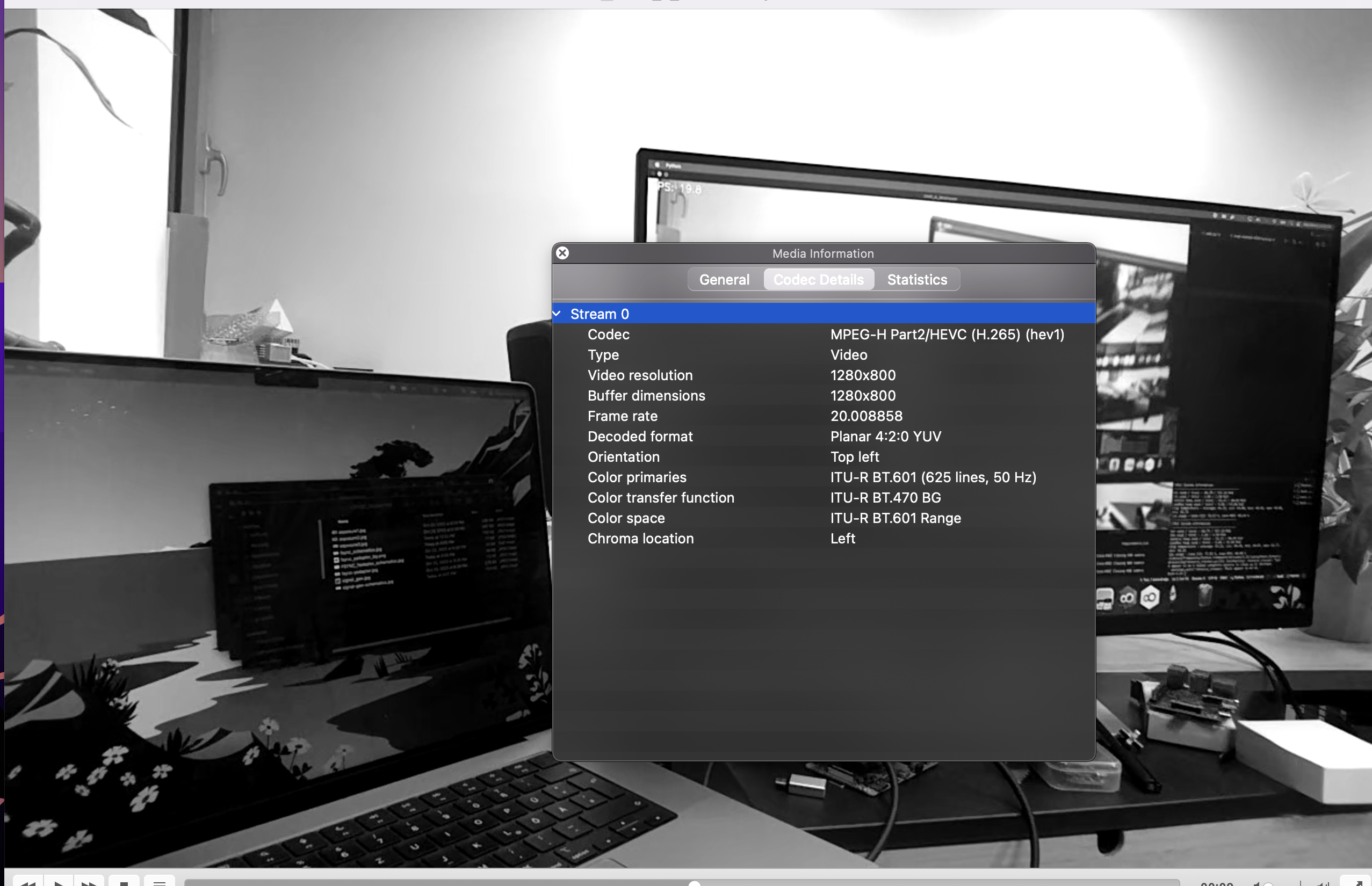

There's nothing wrong with the code, it works fine for me (just removed stereo/nn, as it's not needed). Here is recorded mono stream, at 20fps:

So my instinct would be either:

Could you confirm both?

Thanks, Erik

Hitching along with Erik's instinct on this, it made me recall something similar early on, before I learned how to deal with it. If I was on my laptop with a WIFI connection for internet, and then connected an Ethernet cable, either to an onboard or USB or Thunderbolt Ethernet adapter, then the WIFI and the wired LAN connection would both keep alternate dropping constantly, and it would seem like my internet was horrible and my LAN more complex than it was. The bottom line was that they can't work like that. For my Macbook, I had to learn about "Set Service Order" to ensure that WIFI was always listed at the top of the list, and for Linux, I had to find out how to setup my wired LAN connection to "only use for resources on this network" (if I remember correctly, it's in the "routes" section in "Kubuntu" and similar, and in Ubuntu..I can't remember exactly, because I do most everything in the terminal these days. For that, it's a modification of the NetworkManager:

nmcli connection modify <connection name> ipv4.never-default true

erik Hello, is there a way to start the recording after a certain time ? Because the exposure makes the first frames look really bright

Thank you in advance

Hi @ferrari19 ,

We do have a script here that skips first few frames (1.5sec):

luxonis/depthai-experimentsblob/master/gen2-record-replay/record.py#L100-L101

Thank you ! and do you know if ther's a way to start recording after the camera finshed adjusting?

Hi @ferrari19

You can periodically poll the frame.getSensitivity() to check if the value has converged.

Thanks,

Jaka