Request for assistance with OAK-D POE CM4 and Jetson TX2 integration

Here are the results I obtained by running the code on my Raspberry Pi via an SSH connection:

Latency: 108.63 ms, Average latency: 103.88 ms, Standard deviation: 10.33

Latency: 112.14 ms, Average latency: 103.90 ms, Standard deviation: 10.32

Latency: 115.76 ms, Average latency: 103.92 ms, Standard deviation: 10.33

Latency: 99.22 ms, Average latency: 103.91 ms, Standard deviation: 10.32

Hi jakaskerl

Thank you for your previous insights. I want to clarify that the latency measurements I shared with you earlier were taken without showing the preview (I had commented out `cv2.imshow('frame', imgFrame.getCvFrame())`).

After including the preview display in the computation, here are the new values I obtained:

Latency: 481.45 ms, Average latency: 527.23 ms, Std: 36.31

Latency: 492.62 ms, Average latency: 527.20 ms, Std: 36.31

Latency: 488.96 ms, Average latency: 527.18 ms, Std: 36.31

Latency: 486.37 ms, Average latency: 527.15 ms, Std: 36.31

Latency: 496.27 ms, Average latency: 527.13 ms, Std: 36.31

Latency: 492.14 ms, Average latency: 527.10 ms, Std: 36.31

Latency: 503.84 ms, Average latency: 527.09 ms, Std: 36.30

Latency: 515.83 ms, Average latency: 527.08 ms, Std: 36.29

Latency: 507.38 ms, Average latency: 527.07 ms, Std: 36.28

Latency: 507.18 ms, Average latency: 527.05 ms, Std: 36.27

Latency: 498.62 ms, Average latency: 527.03 ms, Std: 36.27

Latency: 515.08 ms, Average latency: 527.02 ms, Std: 36.25

As you can see, adding the preview display significantly increases the latency.

Best,

Babacar

Hi Jaka,

Apologies for the delay in response. I want to confirm whether this is the correct modification to the code that you requested:

import depthai as dai

import numpy as np

import time

# Create pipeline

pipeline = dai.Pipeline()

pipeline.setXLinkChunkSize(0)

# Define source and output

camRgb = pipeline.create(dai.node.ColorCamera)

camRgb.setFps(60)

camRgb.setResolution(dai.ColorCameraProperties.SensorResolution.THE_1080_P)

xout = pipeline.create(dai.node.XLinkOut)

xout.setStreamName("out")

camRgb.isp.link(xout.input)

# Connect to device and start pipeline

with dai.Device(pipeline) as device:

print(device.getUsbSpeed())

q = device.getOutputQueue(name="out")

diffs = np.array([])

while True:

start_time = time.time() # Record start time of the loop

imgFrame = q.get()

latencyMs = (dai.Clock.now() - imgFrame.getTimestamp()).total_seconds() * 1000

diffs = np.append(diffs, latencyMs)

print('Latency: {:.2f} ms, Average latency: {:.2f} ms, Std: {:.2f}'.format(latencyMs, np.average(diffs), np.std(diffs)))

end_time = time.time() # Record end time of the loop

loop_time = (end_time - start_time) * 1000 # Calculate loop time in ms

print('Loop time: {:.2f} ms'.format(loop_time))

Please let me know if this is correct, or if there are any further changes that I should make.

Thanks,

Babacar

Hi jakaskerl

Here's the code that I implemented:

import time

# ...

while True:

# Begin timing

start_time = time.time()

for name, q in queues.items():

# Add all msgs (color frames, object detections and recognitions) to the Sync class.

if q.has():

sync.add_msg(q.get(), name)

msgs = sync.get_msgs()

if msgs is not None:

frame = msgs["color"].getCvFrame()

detections = msgs["detection"].detections

embeddings = msgs["embedding"]

# Write raw frame to the raw_output video

raw_out.write(frame)

# Update the tracker

object_tracks = tracker_iter(detections, embeddings, tracker, frame)

# For each tracking object

for track in object_tracks:

#... All existing code

# Write the frame with annotations to the output video

out.write(frame)

# End timing and print elapsed time

end_time = time.time()

elapsed_time = end_time - start_time

print(f"Elapsed time for iteration: {elapsed_time} seconds")raw_out.release()

out.release()

These are the results I got:

Elapsed time for iteration: 0.13381719589233398 seconds

Elapsed time for iteration: 0.1333160400390625 seconds

Elapsed time for iteration: 0.13191676139831543 seconds

...

...

Elapsed time for iteration: 0.13199663162231445 seconds

Thanks, Jaka, for your input so far.I would appreciate any further suggestions you might have to fix this issue.

Following your advice, I've made some further modifications to my code and have also removed the video writing part. The changes have resulted in considerable improvements in the performance. However, the time taken per iteration now varies widely. Here's a subset of the results:

Elapsed time for iteration: 2.3365020751953125e-05 seconds

Elapsed time for iteration: 2.3603439331054688e-05 seconds

...

...

Elapsed time for iteration: 2.6702880859375e-05 seconds

Elapsed time for iteration: 2.3603439331054688e-05 seconds

Elapsed time for iteration: 3.361701965332031e-05 seconds

Elapsed time for iteration: 2.4080276489257812e-05 seconds

...

...

Elapsed time for iteration: 0.00014281272888183594 seconds

Elapsed time for iteration: 0.06066274642944336 seconds

Elapsed time for iteration: 0.05930662155151367 seconds

Elapsed time for iteration: 0.05977463722229004 seconds

Elapsed time for iteration: 0.06491947174072266 seconds

Now I am going to try training my own model by following this tutorial:

https://github.com/luxonis/depthai-ml-training/blob/master/colab-notebooks/YoloV8_training.ipynb

But after, does DeepSORT support YOLOv8 and can YOLOv8 be imported in JSON format like YOLOv6 from the DeepSORT_Tracking GitHub repository?

Thank you for your help.

Hi Babacar

I'm not sure so I asked Bard:

Yes, DeepSORT supports YOLOv8. You can import YOLOv8 in JSON format from the DeepSORT_Tracking GitHub repository. Here are the steps on how to do it:

Clone the DeepSORT_Tracking GitHub repository.

Go to the deepsort/deepsort/detection/ directory.

Copy the yolov4.cfg and yolov4.weights files from the tutorial you linked to.

Create a new file called yolov8.json.

Paste the following code into the yolov8.json file:

{

"model": "yolov8",

"classes": ["person"],

"path": "./yolov4.cfg",

"weights": "./yolov4.weights"

}Save the yolov8.json file.

Now you can use DeepSORT to track objects detected by YOLOv8.

Here are some additional resources that you may find helpful:

DeepSORT documentation: https://github.com/nwojke/deep_sort/blob/master/README.md

YOLOv8 tutorial: https://pjreddie.com/darknet/yolo/

Hope this helps,

Jaka

Hi Jaka,

I think there might have been a misunderstanding in our last exchange. I intend to train my YOLOv8 model using this code: https://github.com/luxonis/depthai-ml-training/blob/master/colab-notebooks/YoloV8_training.ipynb, and then import it in JSON format, as indicated in the tutorial.

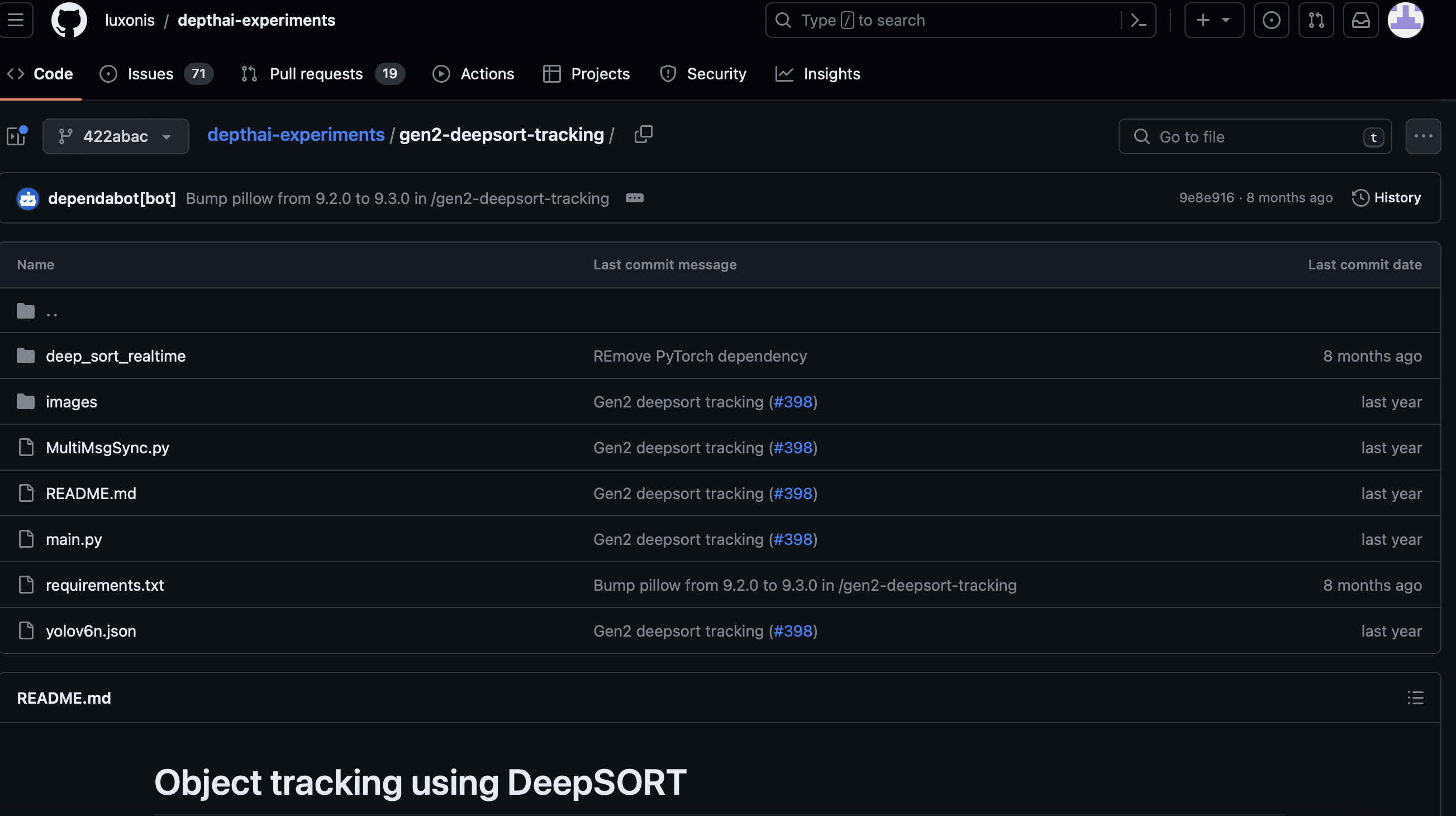

I plan on using this specific DeepSORT repository from Luxonis

and I would like to, instead of launching it with yolov6.json, do it with a yolov8 that I have trained on my own database.

Furthermore, I'm not quite sure about the "deepsort/deepsort/detection" directory you mentioned. I don't see the yolov4.cfg and yolov4.weights files.

Could you provide more clarification on this?

Best regards,

Babacar

Hi erik

I've trained my model and deployed it on Roboflow. Following the tutorial, I modified the main.py code:

import cv2

from depthai_sdk import OakCamera

from depthai_sdk.classes.packets import TwoStagePacket

from depthai_sdk.visualize.configs import TextPosition

from deep_sort_realtime.deepsort_tracker import DeepSort

tracker = DeepSort(max_age=1000, nn_budget=None, embedder=None, nms_max_overlap=1.0, max_cosine_distance=0.2)

def cb(packet: TwoStagePacket):

detections = packet.img_detections.detections

vis = packet.visualizer

# Update the tracker

object_tracks = tracker.iter(detections, packet.nnData, (640, 640))

for track in object_tracks:

if not track.is_confirmed() or \

track.time_since_update > 1 or \

track.detection_id >= len(detections) or \

track.detection_id < 0:

continue

det = packet.detections[track.detection_id]

vis.add_text(f'ID: {track.track_id}',

bbox=(*det.top_left, *det.bottom_right),

position=TextPosition.MID)

frame = vis.draw(packet.frame)

cv2.imshow('DeepSort tracker', frame)

with OakCamera() as oak:

color = oak.create_camera('color')

model_config = {

'source': 'roboflow',

'model':'usv-7kkhf/4',

'key':'zzzzzzzzzzzzzzz' # FAKE Private API key

}

yolo = oak.create_nn(model_config,color)

embedder = oak.create_nn('mobilenetv2_imagenet_embedder_224x224', input=yolo)

oak.visualize(embedder, fps=True, callback=cb)

# oak.show_graph()

oak.start(blocking=True)However, I'm encountering an error stating that it can't find my trained model:

Exception: {'message': 'No trained model was found.', 'type': 'GraphMethodException', 'hint': 'You must train a model on this version with Roboflow Train before you can use inference.', 'e': ['Model not found, looking for filename 4JiY9CSQUUctWZgCzw210yo9qcw2/heRJlafm8KwTDQrTn8dI/4/roboflow.zip']}

Sentry is attempting to send 2 pending error messages

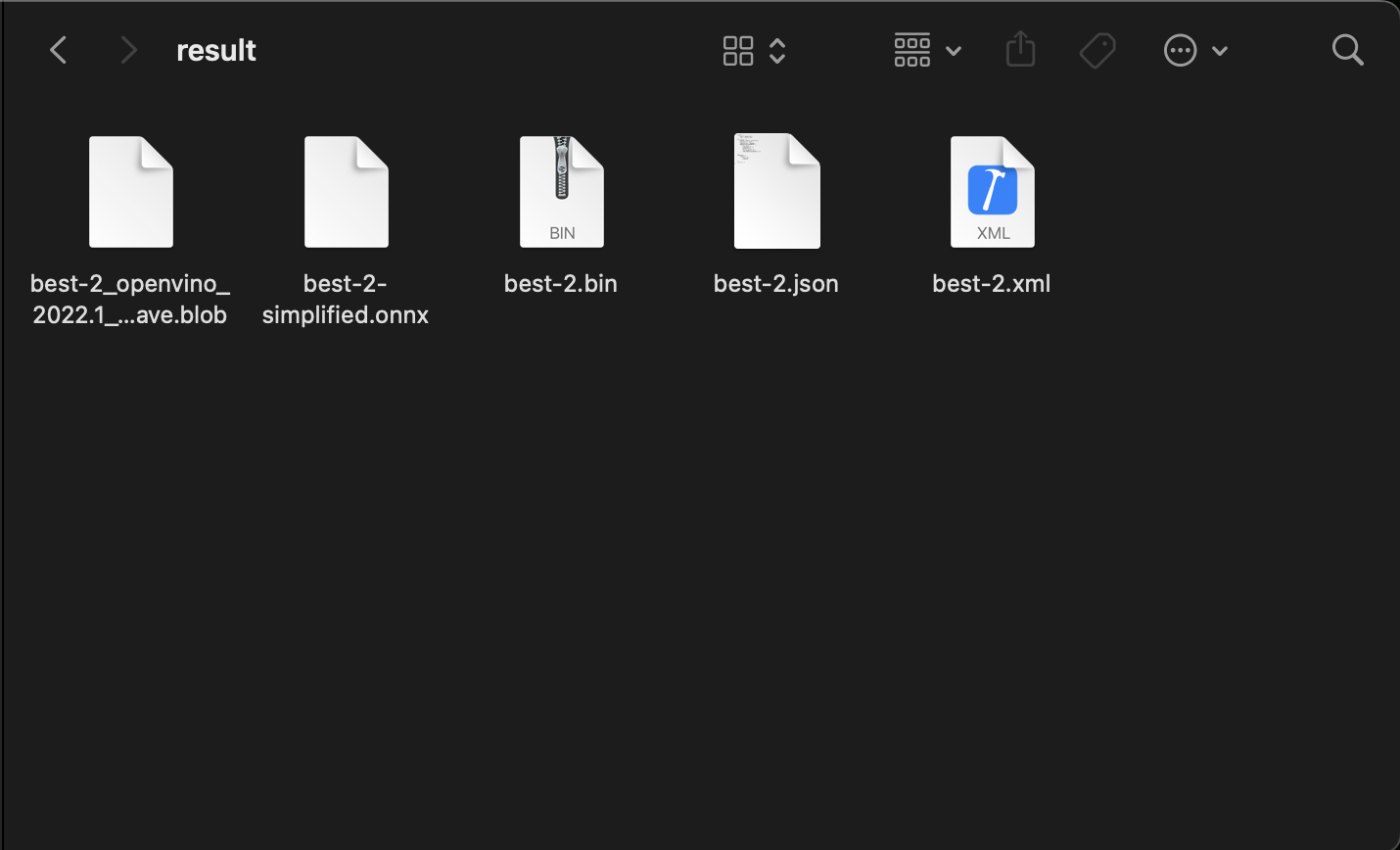

So, I saved my file as best.py and then used the model converter. I'd like to know how to implement it into the code:

Thanks for your assistance.

Hi Babacar

The roboflow api should work as expected it think. I am also getting the same errors though, so I asked the roboflow team what the correct procedure for using the models is.

Ill get back to you on that.

In the meanwhile, use the downloaded blob path to when creating your neural network.

Thanks,

Jaka

Hi jakaskerl

Do you have any idea how to use it with this code: https://github.com/luxonis/depthai-experiments/blob/master/gen2-deepsort-tracking/main.py?

I tried replacing the yolov6 model with my blob file, but I received this error:

File "/home/pi/Tracking/gen3-deepsort-tracking/main.py", line 43, in <module>

oak.start(blocking=True)

File "/home/pi/.local/lib/python3.9/site-packages/depthai_sdk/oak_camera.py", line 347, in start

self.build()

File "/home/pi/.local/lib/python3.9/site-packages/depthai_sdk/oak_camera.py", line 465, in build

xouts = out.setup(self.pipeline, self.oak.device, names)

File "/home/pi/.local/lib/python3.9/site-packages/depthai_sdk/classes/output_config.py", line 54, in setup

xoutbase: XoutBase = self.output(pipeline, device)

File "/home/pi/.local/lib/python3.9/site-packages/depthai_sdk/components/nn_component.py", line 629, in main

out = XoutTwoStage(det_nn=self.comp.input,

File "/home/pi/.local/lib/python3.9/site-packages/depthai_sdk/oak_outputs/xout/xout_nn.py", line 289, in init

self.whitelist_labels: Optional[List[int]] = second_nn.multi_stage_nn.whitelist_labels

AttributeError: 'NoneType' object has no attribute 'whitelist_labels'

Sentry is attempting to send 2 pending error messages

Waiting up to 2 seconds

Press Ctrl-C to quit~

I appreciate your help.

Hi Babacar

I think you need to edit the callback function to correctly parse the results from your model. Try removing all the logic inside the callback to see if it still causes the same error. I'm not sure whether it stems from the parsing or whether it's a visualizer problem.

Thanks,

Jaka