Hey Jaka, there's quite a bit of code so it would be quite difficult to make an MRE…

Here's a general rundown. Hopefully you have some insights/ideas based off of this?

Our code prepares the pipeline, configures the camera, and loads all the ML models initially and when we do inferencing it does the inferencing from that model instead of swapping.

We intentionally wrote this code to make the inferencing faster.

To provide more context based on the code:

General Flow:

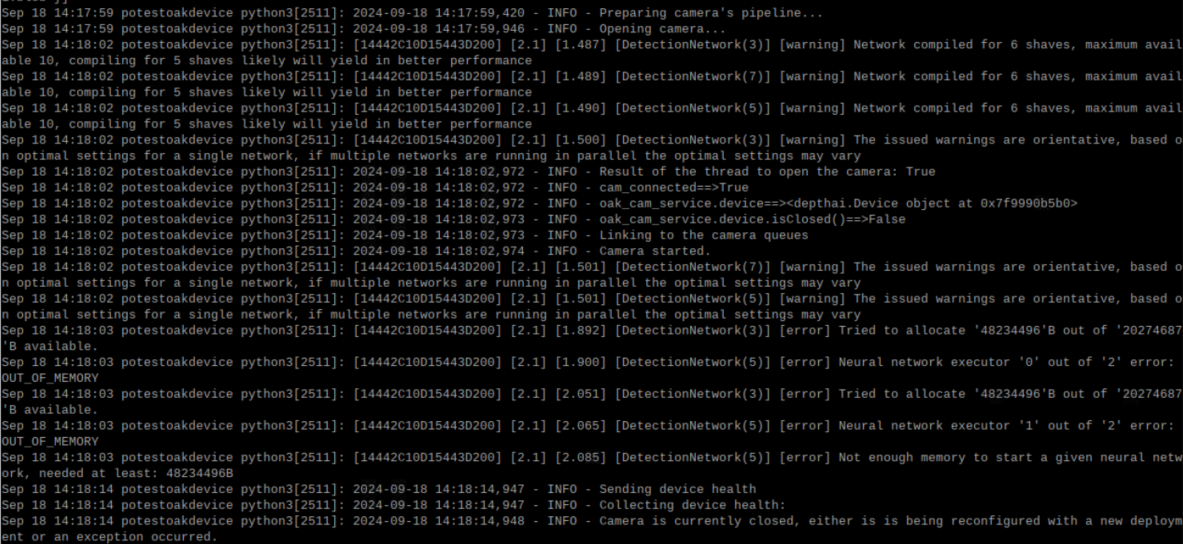

The code sets up the DepthAI pipeline, configures the camera, and preloads multiple models (e.g., 3 models). This avoids model swapping during inference for speed.

Pipeline Configuration:

Uses DepthAI's Pipeline API. The prepare_pipeline function configures camera settings (resolution, FPS) and links camera preview to each model's detection network (e.g., YOLO). It handles high resolution setups (e.g., 4K) and ensures all models are ready for inference.

Camera Focus:

The code includes manual and automatic camera focus control with reset_focus(), using DepthAI's CameraControl.

Inference Process:

infer() handles inference using the preloaded model. It processes frames, sends them through the model, and builds a structured message using build_inference_msg(), including object detection polygons and confidence scores. Threshold checks are done before results are logged.

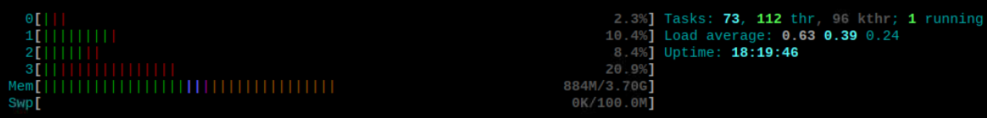

System Health Monitoring:

get_device_health() monitors memory (DDR, CMX), CPU load, and subsystem temperatures (e.g., UPA, DSS) using the system logger (SystemLogger node). It returns a summary of device health.

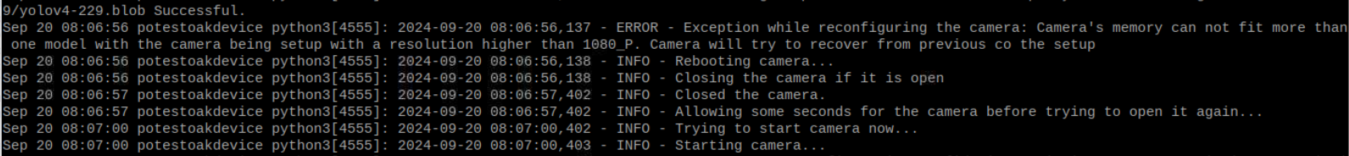

Exception Handling:

Custom exceptions like FrameEncodingError and PipelinePreparationError are used to trace and log errors during frame acquisition, encoding, and pipeline setup.

Queue Linking:

link_queues() connects the camera to output queues for real-time inference, setting up OutputQueue for each model. close_camera() and open_camera() handle device lifecycle.

Design Intent:

All models are loaded upfront to minimize runtime operations during inference, optimizing for speed.

Thank you!

Charlie