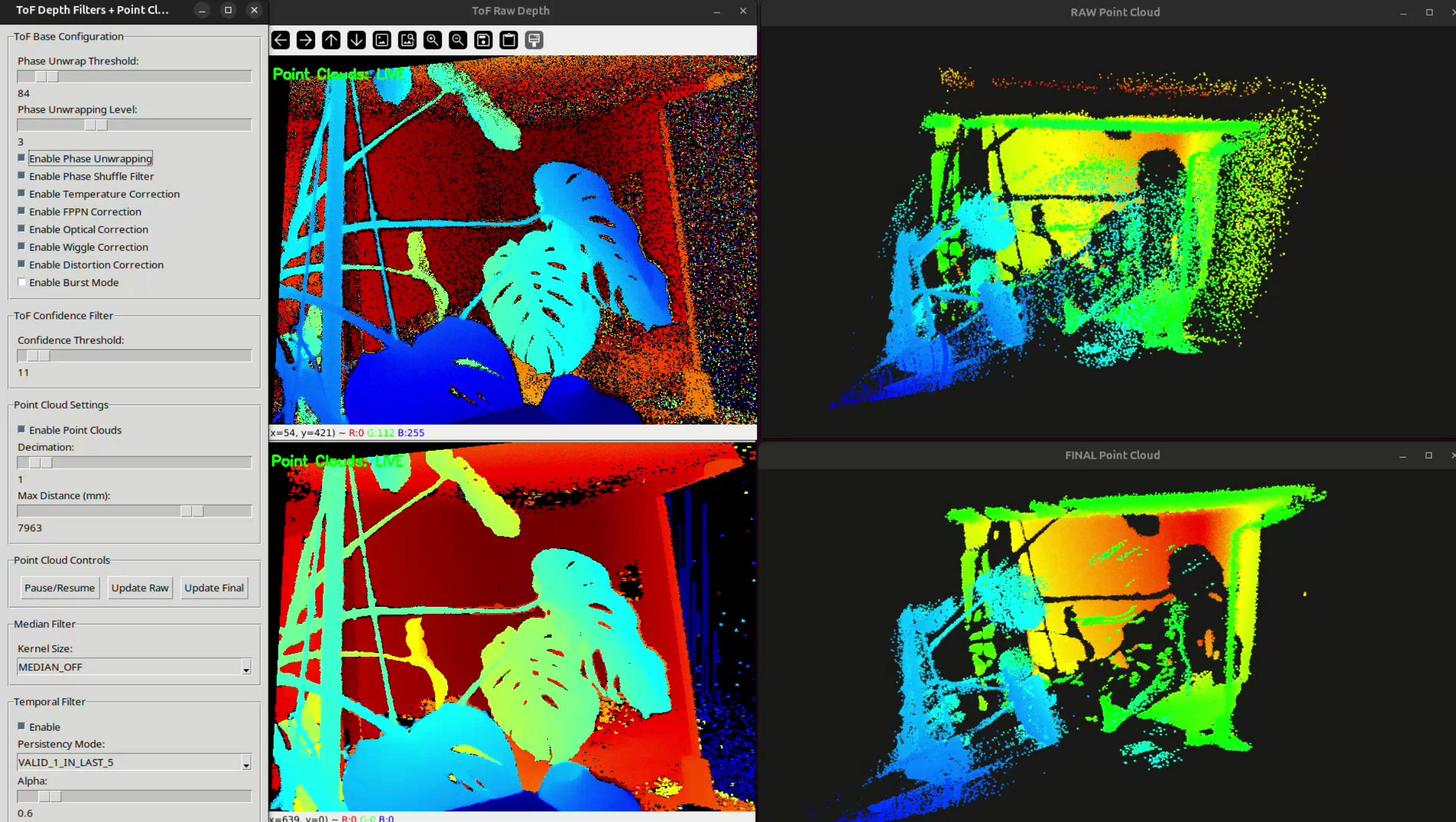

We’re excited to announce a new set of filters for our Time-of-Flight (ToF) cameras. These filters dramatically improve raw ToF depth perception — reducing noise, stabilizing measurements, and producing much cleaner point clouds (PCLs).

Out of the box, ToF provides powerful depth sensing, but raw data can be noisy, flickery, or incomplete. With our new filtering system, ToF now delivers depth maps and PCLs that are sharper, more stable, and far more useful for real-world tasks. Whether you’re working with robotics, automation, or consumer devices, this means ToF becomes a strong alternative where stereo vision struggles.

Why Filters Matter

Raw ToF depth data has incredible potential, but without processing, it’s messy. The new filtering pipeline changes this by:

Validation – removing data you can’t trust.

Spatial filtering – smoothing flat regions without blurring edges.

Temporal filtering – stabilizing results over time while staying responsive.

In simple terms, these filters take raw depth input and turn it into stable, usable data. The improvements are especially visible in point clouds, where previously noisy or incomplete surfaces now appear clean and continuous.

Even better: filters can be tuned for specific applications, so whether you need detail up close or stability at distance, you can adjust the balance.

Presets for Every Range

To make things easier, we’ve created pre-tuned presets for the most common scenarios. No need to tweak endlessly — just pick the one that fits your use case:

TOF_LOW_RANGE – Optimized for close-up tasks like bin picking or detecting objects within ~2 meters.

TOF_MID_RANGE – The most versatile setting, ideal for recognizing 3D gestures at a comfortable distance (about 2 m away).

TOF_HIGH_RANGE – Tuned for stability at long distances, such as detecting obstacles up to 6.5 m away in a hallway or workspace.

Each preset balances noise reduction, edge preservation, and stability differently. For example, the low-range preset minimizes edge blurring, while the high-range preset focuses on smoothing and consistency across distance.

Trying Out Custom Filter Values

Want to go beyond presets and experiment with your own filter settings? You can easily try it out by running our example project:

git clone https://github.com/luxonis/oak-examples.git

cd oak-examples/

python3 -m venv venv # for Linux

source venv/bin/activate # for Linux

cd depth-measurement/3d-measurement/tof-pointcloud/

pip install -r requirements.txt

python3 main.py

This will launch the ToF point cloud demo, where you can adjust validation, spatial, and temporal filters directly and see how they affect the results in real time. It’s a great way to understand how each filter contributes — and to fine-tune for your own application.

Demo Scenarios

To show the difference, we’ve prepared three simple but realistic examples:

Bin Picking (Short Range) – Using TOF_LOW_RANGE, objects inside a bin are captured at ~0.7 m with much more detail than stereo vision can provide.

Gesture Recognition (Mid Range) – At ~2 m, the TOF_MID_RANGE preset clearly captures a person and their 3D gestures, making interaction far more reliable.

Obstacle Detection (Long Range) – At 5–6 m, TOF_HIGH_RANGE filters stabilize noisy hallways and reveal objects like boxes on the floor that stereo vision either misses or renders inconsistently.

With these presets, you can drop ToF into real applications quickly and see immediate benefits.

Practical Notes

On RVC2, these new filters currently run on the host computer.

On RVC4, there are no ToF cameras available at the moment.

Filters dramatically improve results, but they can’t create information where none exists — scenes with very low reflectance, extreme distance, or heavy multipath reflections will remain challenging.

Wrapping Up

With the release of these new ToF filters, raw depth perception takes a big leap forward. Cleaner maps, smoother point clouds, and ready-to-use presets make ToF far more accessible and effective across industries.

From short-range bin picking, to gesture recognition at 2 m, to long-range obstacle detection, these improvements make ToF not just a technology demo, but a practical solution for robotics, automation, and consumer electronics.

This is the start of a new chapter for ToF at Luxonis — and we can’t wait to see what you build with it.