Hi jakaskerl

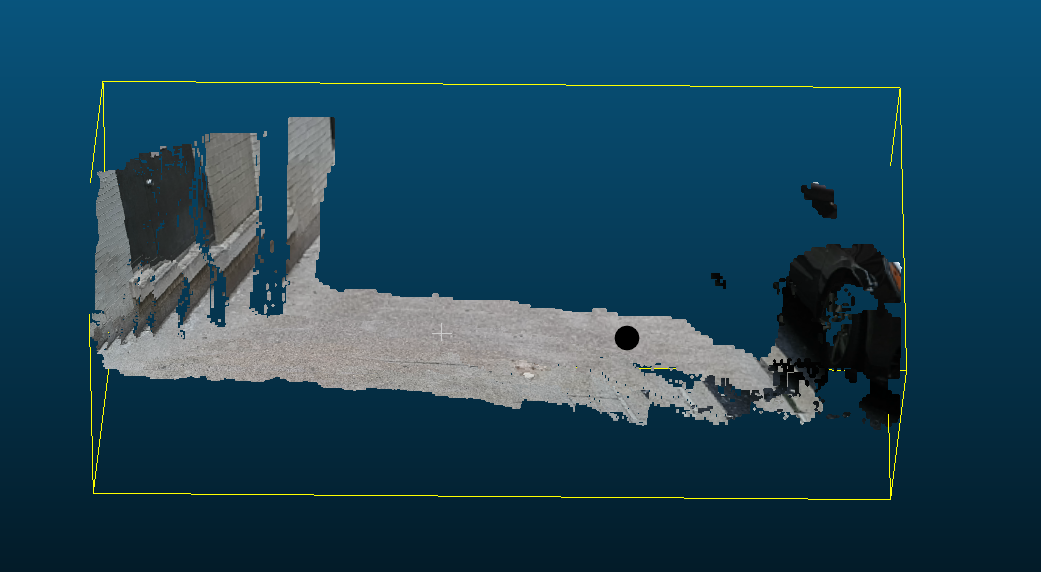

For the flat ground I captured, the calculated slope came out to around 7.5%, and the point cloud appears slightly skewed from left to right, as seen in the image. I’m currently using the accelerometer-derived pitch and roll values to construct a rotation matrix and apply that to correct the camera’s orientation during capture.

pipeline = dai.Pipeline()

monoLeft = pipeline.create(dai.node.MonoCamera)

monoRight = pipeline.create(dai.node.MonoCamera)

monoLeft.setBoardSocket(dai.CameraBoardSocket.CAM_B)

monoRight.setBoardSocket(dai.CameraBoardSocket.CAM_C)

monoLeft.setResolution(dai.MonoCameraProperties.SensorResolution.THE_400_P)

monoRight.setResolution(dai.MonoCameraProperties.SensorResolution.THE_400_P)

# Stereo depth - simplified settings

depth = pipeline.create(dai.node.StereoDepth)

depth.setDefaultProfilePreset(dai.node.StereoDepth.PresetMode.DEFAULT)

depth.setLeftRightCheck(True)

depth.setSubpixel(False)

depth.setDepthAlign(dai.CameraBoardSocket.CAM_A)

# Simple depth filtering

cfg = depth.initialConfig.get()

cfg.postProcessing.temporalFilter.enable = True # Disable for speed

cfg.postProcessing.speckleFilter.enable = True

cfg.postProcessing.speckleFilter.speckleRange = 28

cfg.postProcessing.spatialFilter.enable = False # Disable for speed

cfg.postProcessing.thresholdFilter.minRange = 400

cfg.postProcessing.thresholdFilter.maxRange = 8000

depth.initialConfig.set(cfg)

# RGB camera - match depth resolution exactly

colorCam = pipeline.create(dai.node.ColorCamera)

colorCam.setBoardSocket(dai.CameraBoardSocket.CAM_A)

colorCam.setResolution(dai.ColorCameraProperties.SensorResolution.THE_1080_P)

colorCam.setColorOrder(dai.ColorCameraProperties.ColorOrder.BGR)

colorCam.setPreviewSize(640, 400) # Match mono camera resolution

# IMU - simplified

imu = pipeline.create(dai.node.IMU)

imu.enableIMUSensor(dai.IMUSensor.ACCELEROMETER_RAW, 50) # Lower frequency

imu.setBatchReportThreshold(1)

imu.setMaxBatchReports(5)

# Outputs

xoutRgb = pipeline.create(dai.node.XLinkOut)

xoutRgb.setStreamName("rgb")

colorCam.preview.link(xoutRgb.input)

xoutDepth = pipeline.create(dai.node.XLinkOut)

xoutDepth.setStreamName("depth")

depth.depth.link(xoutDepth.input)

xoutImu = pipeline.create(dai.node.XLinkOut)

xoutImu.setStreamName("imu")

imu.out.link(xoutImu.input)

monoLeft.out.link(depth.left)

monoRight.out.link(depth.right)

def get_quick_imu_reading(imu_queue, timeout_ms=500):

"""Get IMU reading quickly with timeout"""

import time

start_time = time.time()

while (time.time() - start_time) < (timeout_ms / 1000.0):

try:

if imu_queue.has():

imu_data = imu_queue.get()

if imu_data.packets:

accel = imu_data.packets[-1].acceleroMeter

return [accel.x, accel.y, accel.z]

except:

continue

print("IMU timeout - using default orientation")

return None

def create_rotation_matrix(pitch_deg, roll_deg):

"""Create rotation matrix from pitch and roll in degrees"""

pitch_rad = np.radians(pitch_deg)

roll_rad = np.radians(roll_deg)

# Rotation around X-axis (pitch)

Rx = np.array([

[1, 0, 0],

[0, np.cos(pitch_rad), -np.sin(pitch_rad)],

[0, np.sin(pitch_rad), np.cos(pitch_rad)]

])

# Rotation around Y-axis (roll)

Ry = np.array([

[np.cos(roll_rad), 0, np.sin(roll_rad)],

[0, 1, 0],

[-np.sin(roll_rad), 0, np.cos(roll_rad)]

])

return Rx @ Ry

def create_simple_pointcloud(rgb_frame, depth_frame, fx, fy, cx, cy, R_align=None):

points = []

colors = []

depth_h, depth_w = depth_frame.shape

rgb_h, rgb_w = rgb_frame.shape[:2]

print(f"Depth: {depth_h}x{depth_w}, RGB: {rgb_h}x{rgb_w}")

# Resize RGB to match depth dimensions exactly

if depth_h != rgb_h or depth_w != rgb_w:

rgb_resized = cv2.resize(rgb_frame, (depth_w, depth_h))

else:

rgb_resized = rgb_frame

# Simple loop - every 2nd pixel for speed, with bounds checking

for y in range(0, depth_h, 2):

for x in range(0, depth_w, 2):

# Bounds check

if y >= depth_h or x >= depth_w:

continue

z = depth_frame[y, x] / 1000.0 # Convert to meters

if z == 0 or z > 8.0 or z < 0.4:

continue

# Project to 3D

X = (x - cx) * z / fx

Y = (y - cy) * z / fy

Z = z

point = np.array([X, Y, Z])

# Apply rotation if provided

if R_align is not None:

point = R_align @ point

# Get color from resized RGB

color = rgb_resized[y, x] / 255.0

points.append(point)

colors.append(color[[2, 1, 0]]) # BGR to RGB

return np.array(points), np.array(colors)

# Main execution

print("SIMPLE VERSION: Press SPACE to save point cloud, 'q' to quit")

print("Press 'r' to reset IMU reading")

current_rotation = None

with dai.Device(pipeline) as device:

rgbQueue = device.getOutputQueue("rgb", maxSize=1, blocking=False)

depthQueue = device.getOutputQueue("depth", maxSize=1, blocking=False)

imuQueue = device.getOutputQueue("imu", maxSize=1, blocking=False)

print("Ready! Camera should be showing preview...")

while True:

try:

# Get latest frames

if rgbQueue.has():

rgbFrame = rgbQueue.get().getCvFrame()

else:

continue

if depthQueue.has():

depthFrame = depthQueue.get().getFrame()

else:

continue

# Show preview - ensure same dimensions

depth_colorized = cv2.applyColorMap(

cv2.convertScaleAbs(depthFrame, alpha=0.03),

cv2.COLORMAP_JET

)

# Resize depth to match RGB if needed

if rgbFrame.shape[:2] != depth_colorized.shape[:2]:

depth_colorized = cv2.resize(depth_colorized,

(rgbFrame.shape[1], rgbFrame.shape[0]))

overlay = cv2.addWeighted(rgbFrame, 0.7, depth_colorized, 0.3, 0)

# Add text overlay

if current_rotation is not None:

cv2.putText(overlay, f"Pitch: {current_rotation[0]:.1f}° Roll: {current_rotation[1]:.1f}°",

(10, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 255, 0), 2)

else:

cv2.putText(overlay, "Press 'r' to read IMU orientation",

(10, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2)

cv2.putText(overlay, "SPACE: Save | R: Read IMU | Q: Quit",

(10, overlay.shape[0] - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (255, 255, 255), 1)

cv2.imshow("DepthAI Preview", overlay)

key = cv2.waitKey(1) & 0xFF

if key == ord('q'):

break

elif key == ord('r'):

print("Reading IMU orientation...")

accel_data = get_quick_imu_reading(imuQueue)

if accel_data:

ax, ay, az = accel_data

# Normalize

norm = np.sqrt(ax**2 + ay**2 + az**2)

if norm > 0:

ax, ay, az = ax/norm, ay/norm, az/norm

# Calculate pitch and roll

pitch = np.degrees(np.arctan2(-ax, np.sqrt(ay**2 + az**2)))

roll = np.degrees(np.arctan2(ay, az))

current_rotation = (pitch, roll)

print(f"✅ IMU reading: Pitch={pitch:.1f}°, Roll={roll:.1f}°")

else:

print("❌ Bad IMU reading")

else:

print("❌ Failed to get IMU reading")

elif key == ord(' '): # Spacebar

print("Capturing point cloud...")

# Get camera intrinsics

calib = device.readCalibration()

intr = calib.getCameraIntrinsics(dai.CameraBoardSocket.CAM_A, 640, 400)

fx, fy = intr[0][0], intr[1][1]

cx, cy = intr[0][2], intr[1][2]

# Create rotation matrix if we have IMU data

R_align = None

if current_rotation:

R_align = create_rotation_matrix(current_rotation[0], current_rotation[1])

print(f"Applying rotation: Pitch={current_rotation[0]:.1f}°, Roll={current_rotation[1]:.1f}°")

else:

print("No IMU data - saving without gravity alignment")

# Create point cloud

points, colors = create_simple_pointcloud(

rgbFrame, depthFrame, fx, fy, cx, cy, R_align

)

if len(points) > 100:

# Create Open3D point cloud

pcd = o3d.geometry.PointCloud()

pcd.points = o3d.utility.Vector3dVector(points)

pcd.colors = o3d.utility.Vector3dVector(colors)

# Light filtering only

if len(points) > 500:

pcd, _ = pcd.remove_statistical_outlier(nb_neighbors=10, std_ratio=3.0)

# Save

timestamp = datetime.now().strftime("%Y%m%d_%H%M%S")

ply_path = output_dir / f"pointcloud_simple_{timestamp}.ply"

las_path = output_dir / f"pointcloud_simple_{timestamp}.las"

laz_path = output_dir / f"pointcloud_simple_{timestamp}.laz"

try:

o3d.io.write_point_cloud(str(ply_path), pcd)

print(f"✅ Saved PLY: {ply_path} ({len(pcd.points)} points)")

header = laspy.LasHeader(point_format=3, version="1.2")

las = laspy.LasData(header)

las.x, las.y, las.z = points[:, 0], points[:, 1], points[:, 2]

las.red = (colors[:, 0] * 65535).astype(np.uint16)

las.green = (colors[:, 1] * 65535).astype(np.uint16)

las.blue = (colors[:, 2] * 65535).astype(np.uint16)

las.write(las_path)

print(f"✅ Saved LAS: {las_path}")

subprocess.run([str(laszip_exe), "-i", str(las_path), "-o", str(laz_path)], check=True)

print(f"✅ Saved LAZ: {laz_path}")

except Exception as e:

print(f"❌ Failed to save one or more point cloud formats: {e}")

else:

print("❌ Not enough valid points generated")

except Exception as e:

print(f"Error: {e}")

import traceback

traceback.print_exc()

break

cv2.destroyAllWindows()

print("Done!")