Dear all,

I'm coming up with a task I'm working on for several weeks already and see no other help then trying to reach out here.

I used the FoundationStereo-Model to come up with depth-maps from my prior taken oak-d-lite stereo-images. I'd like to align those with the RGB-Frame being captured on 13mp. There are multiple code-snippets pointed to in recent Discussion-threads which all won't work on my example.

import numpy as np

import cv2 as cv

import depthai as dai

import reprojection

from pathlib import Path

RGB_SOCKET = dai.CameraBoardSocket.CAM_A

LEFT_SOCKET = dai.CameraBoardSocket.CAM_B

RIGHT_SOCKET = dai.CameraBoardSocket.CAM_C

ALIGN_SOCKET = LEFT_SOCKET

def get_calibData(device, depthSize, bgrSize):

#Get Intrinsics and Extrinisics from Camera

try:

calibData = device.readCalibration()

M_Left = np.array(calibData.getCameraIntrinsics(ALIGN_SOCKET, *depthSize))

M_Right = np.array(calibData.getCameraIntrinsics(RIGHT_SOCKET, *depthSize))

D_Left = np.array(calibData.getDistortionCoefficients(ALIGN_SOCKET))

M_RGB = np.array(calibData.getCameraIntrinsics(RGB_SOCKET, *bgrSize))

D_RGB = np.array(calibData.getDistortionCoefficients(RGB_SOCKET))

R_left = np.array(calibData.getStereoLeftRectificationRotation())

R_right = np.array(calibData.getStereoRightRectificationRotation())

T_left_rgb = (

np.array(calibData.getCameraTranslationVector(ALIGN_SOCKET, RGB_SOCKET, False))

*10

)

R_left_rgb = np.array(calibData.getCameraExtrinsics(ALIGN_SOCKET, RGB_SOCKET, False))[

0:3, 0:3

]

TARGET_MATRIX = M_Left

except:

raise

return M_Left, D_Left, M_RGB, D_RGB, M_Right, T_left_rgb, R_left_rgb, R_left, R_right, TARGET_MATRIX

def getAlignedDepth(frameDepth, M1, D1, M2, D2, R, T, depthSize,bgrSize, TARGET_MATRIX):

R1, R2, _, _, _, _, _ = cv.stereoRectify(M1, D1, M2, D2, (100, 100), R, T) # The (100,100) doesn't matter as it is not used for calculating the rotation matrices

leftMapX, leftMapY = cv.initUndistortRectifyMap(M1, None, R1, TARGET_MATRIX, depthSize, cv.CV_32FC1)

depthRect = cv.remap(frameDepth, leftMapX, leftMapY, cv.INTER_NEAREST)

newR = np.dot(R2, np.dot(R, R1.T)) # Should be very close to identity

newT = np.dot(R2, T)

combinedExtrinsics = np.eye(4)

combinedExtrinsics[0:3, 0:3] = newR

combinedExtrinsics[0:3, 3] = newT

depthAligned = reprojection.reprojection(depthRect, TARGET_MATRIX, combinedExtrinsics, TARGET_MATRIX)

# Rotate the depth to the RGB frame

cv.imshow('depthAligned', colorizeDepth(depthAligned))

cv.waitKey(0)

R_back = R2.T

mapX, mapY = cv.initUndistortRectifyMap(TARGET_MATRIX, None, R_back, M2, bgrSize, cv.CV_32FC1)

outputAligned = cv.remap(depthAligned, mapX, mapY, cv.INTER_NEAREST)

return outputAligned

def colorizeDepth(frameDepth):

minDepth = np.min(frameDepth[frameDepth != 0])

maxDepth = np.max(frameDepth[frameDepth != 0])

invalidMask = frameDepth == 0

# Log the depth, minDepth and maxDepth

logDepth = np.log(frameDepth, where=frameDepth != 0)

logMinDepth = np.log(minDepth)

logMaxDepth = np.log(maxDepth)

depthFrameColor = np.interp(logDepth, (logMinDepth, logMaxDepth), (0, 255)).astype(

np.uint8

)

depthFrameColor = cv.applyColorMap(depthFrameColor, cv.COLORMAP_JET)

# Set invalid depth pixels to black

depthFrameColor[invalidMask] = 0

return depthFrameColor

return rect_img

if __name__ == "__main__":

# open Device

device = dai.Device()

#get filepaths for images

folder_path= Path('D:/') / 'OneDrive' / 'Greenhub' / 'Plant Science - Dokumente' / 'General' / '7. Thesis' / 'Victor' / 'Master_Thesis' / 'Trial_Nov-Dec' / 'pictures' / 'Plants' / 'K_1' / '0'

stereo_rect_path = folder_path / 'stereo' / 'rectified'

date = '2024-11-22'

bgr_image_path = folder_path / f'{date}.png'

left_image_path = stereo_rect_path / f'{date}_Right_disp.png' #Right is Left and v.v.

depth_path = stereo_rect_path / f'{date}_Right_depth.npy'

#load filepaths

factor = 1000

depth_data = np.load(depth_path)

depth_data *= factor

bgr_img = cv.imread(str(bgr_image_path))

left_disp = cv.imread(str(left_image_path))

bgrSize = (1472,1088)

bgr_img = cv.resize(bgr_img, bgrSize)

depthSize = (depth_data.shape[1], depth_data.shape[0])

M_left, D_left, M_rgb, D_rgb, M_right, T_left_rgb, R_left_rgb, R_left_right, R_right_left, Target_Matrix = get_calibData(device, depthSize=depthSize, bgrSize=bgrSize)

#cv.imshow('depth_og', colorizeDepth(depth_data, depth_min, depth_max))

alignedDepth = getAlignedDepth(depth_data, M_left, D_left, M_rgb, D_rgb, R_left_rgb, T_left_rgb, depthSize, bgrSize, Target_Matrix)

rgb = cv.cvtColor(bgr_img, cv.COLOR_BGR2RGB)

pclDepth = alignedDepth.copy()

alignedDepthColorized = colorizeDepth(alignedDepth)

cv.imwrite('alignedDepth.png', alignedDepthColorized)

cv.imshow('rgb_depth', cv.addWeighted(cv.resize(bgr_img, depthSize), 0.5, cv.resize(alignedDepthColorized, depthSize), 0.5, 0))

print(f'Depth aligned shape: {alignedDepth.shape}')

print(f'RGB shape: {rgb.shape}')

cv.imshow('depth_aligned', cv.resize(alignedDepthColorized, depthSize))

if cv.waitKey(0) == ord('q'):

cv.destroyAllWindows()

which is a slight modification of this code

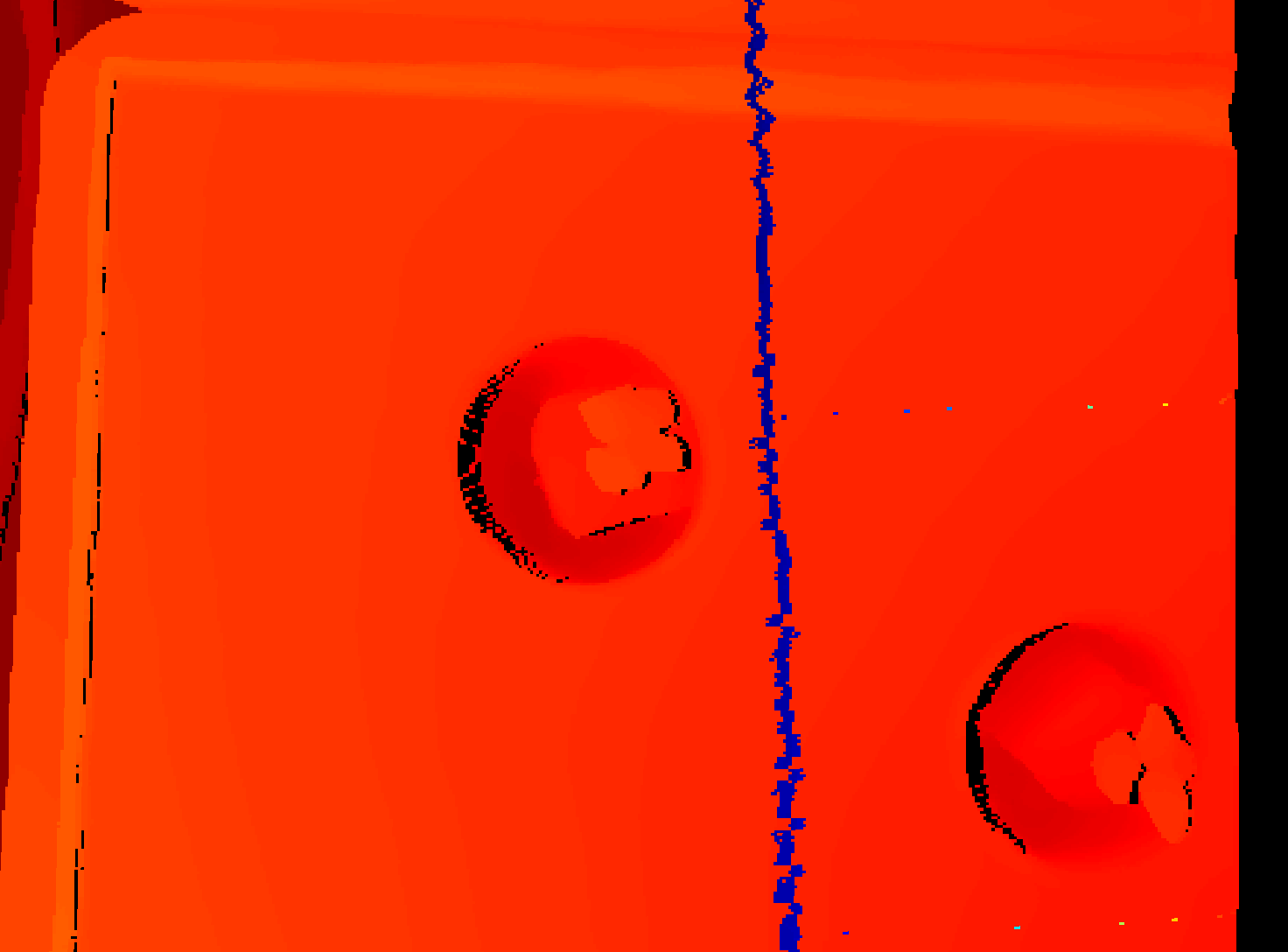

retrieves a weird zoomed in map having that blue line in the middle of my image:

I also tried to do the alignment on cam piping the depth-map and the rgb-image back to the cam using the image-align node while trying to mimic the img-frame message coming from the camera-node usually:

#!/usr/bin/env python3

import depthai as dai

import datetime

import cv2 as cv

import numpy as np

from pathlib import Path

file_path = Path(__file__).parent.resolve()

folder_path= Path('D:/') / 'OneDrive' / 'Greenhub' / 'Plant Science - Dokumente' / 'General' / '7. Thesis' / 'Victor' / 'Master_Thesis' / 'Trial_Nov-Dec' / 'pictures' / 'Plants' / 'K_1' / '0'

stereo_rect_path = folder_path / 'stereo' / 'rectified'

date = '2024-11-22'

rgb_image_path = folder_path / f'{date}.png'

left_image_path = stereo_rect_path / f'{date}_Right_disp.png' #Right is Left and v.v.

depth_path = stereo_rect_path / f'{date}_Right_depth.npy'

def cvFrame_to_daiFrame(cvFrame, depth = False, timestamp_ms=0, instance_num=None, category=None):

dai_frame = dai.ImgFrame()

tstamp = datetime.timedelta(seconds = timestamp_ms // 1000, milliseconds = timestamp_ms % 1000)

dai_frame.setTimestamp(tstamp)

if cvFrame.ndim == 2:

dai_frame.setType(dai.RawImgFrame.Type.GRAY8)

elif cvFrame.ndim == 3:

dai_frame.setType(dai.RawImgFrame.Type.BGR888i)

if depth:

dai_frame.setType(dai.RawImgFrame.Type.RAW16)

dai_frame.setWidth(cvFrame.shape[1])

dai_frame.setHeight(cvFrame.shape[0])

dai_frame.setFrame(cvFrame)

dai_frame.setInstanceNum(instance_num)

dai_frame.setCategory(category)

return dai_frame

def colorizeDepth(frameDepth):

invalidMask = frameDepth == 0

# Log the depth, minDepth and maxDepth

try:

minDepth = np.percentile(frameDepth[frameDepth != 0], 3)

maxDepth = np.percentile(frameDepth[frameDepth != 0], 95)

logDepth = np.log(frameDepth, where=frameDepth != 0)

logMinDepth = np.log(minDepth)

logMaxDepth = np.log(maxDepth)

np.nan_to_num(logDepth, copy=False, nan=logMinDepth)

# Clip the values to be in the 0-255 range

logDepth = np.clip(logDepth, logMinDepth, logMaxDepth)

# Interpolate only valid logDepth values, setting the rest based on the mask

depthFrameColor = np.interp(logDepth, (logMinDepth, logMaxDepth), (0, 255))

depthFrameColor = np.nan_to_num(depthFrameColor)

depthFrameColor = depthFrameColor.astype(np.uint8)

depthFrameColor = cv.applyColorMap(depthFrameColor, cv.COLORMAP_JET)

# Set invalid depth pixels to black

depthFrameColor[invalidMask] = 0

except IndexError:

# Frame is likely empty

depthFrameColor = np.zeros((frameDepth.shape[0], frameDepth.shape[1], 3), dtype=np.uint8)

except Exception as e:

raise e

return depthFrameColor

device = dai.Device()

calibrationHandler = device.readCalibration()

leftDistortion = calibrationHandler.getDistortionCoefficients(dai.CameraBoardSocket.CAM_B)

leftDistortionModel = calibrationHandler.getDistortionModel(dai.CameraBoardSocket.CAM_B)

if leftDistortionModel != dai.CameraModel.Perspective:

raise RuntimeError("Unsupported distortion model for RGB camera. This example supports only Perspective model.")

pipeline = dai.Pipeline()

rgbIn = pipeline.create(dai.node.XLinkIn)

rgbOut = pipeline.create(dai.node.XLinkOut)

leftIn = pipeline.create(dai.node.XLinkIn)

leftOut = pipeline.create(dai.node.XLinkOut)

align = pipeline.create(dai.node.ImageAlign)

out = pipeline.create(dai.node.XLinkOut)

#Settings

rgbIn.setNumFrames(1)

rgbIn.setMaxDataSize(39237121)

leftIn.setNumFrames(1)

#Linkings

rgbIn.out.link(align.inputAlignTo)

rgbIn.out.link(rgbOut.input)

leftIn.out.link(align.input)

leftIn.out.link(leftOut.input)

align.outputAligned.link(out.input)

#Streams

rgbIn.setStreamName("rgb_in")

rgbOut.setStreamName("rgb_out")

leftIn.setStreamName("left_in")

leftOut.setStreamName("left_out")

out.setStreamName("align_out")

with device:

device.startPipeline(pipeline)

#load images from drive

rgb_image = cv.imread(str(rgb_image_path))

left_image = cv.imread(str(left_image_path), cv.IMREAD_GRAYSCALE)

depth = np.load(str(depth_path))

#depth= colorizeDepth(depth)

#depth = cv.cvtColor(depth, cv.COLOR_BGR2GRAY)

cv.imshow('depth', depth)

cv.waitKey(0)

print(f'RGB_type: {rgb_image.dtype}')

print(f'Left_type: {left_image.dtype}')

print(f'Depth_type: {depth.dtype}')

rgb_image = cv.resize(rgb_image, (1440, 1080))

#set streamport for caminput

in_q_left = device.getInputQueue("left_in", maxSize=1, blocking=True)

in_q_rgb = device.getInputQueue("rgb_in", maxSize=1, blocking=True)

#set streamport for camoutput

out_q_left = device.getOutputQueue("left_out")

out_q_rgb = device.getOutputQueue("rgb_out")

out_q_align = device.getOutputQueue("align_out")

while True:

#send images to cam

dai_left_frame = cvFrame_to_daiFrame(left_image, instance_num=1, category=1)

dai_rgb_frame = cvFrame_to_daiFrame(rgb_image, instance_num=0, category=0)

dai_depth_frame = cvFrame_to_daiFrame(depth, depth=True, instance_num=1, category=1)

in_q_left.send(dai_depth_frame)

in_q_rgb.send(dai_rgb_frame)

#get images from cam

received_rgb_frame = out_q_rgb.tryGet()

if received_rgb_frame is not None:

cv_frame_received_rgb = received_rgb_frame.getCvFrame()

cv.imshow("RGB", cv_frame_received_rgb)

received_left_frame =out_q_left.tryGet()

if received_left_frame is not None:

cv_frame_received_left = received_left_frame.getCvFrame()

cv.imshow("Left", cv_frame_received_left)

received_align_frame = out_q_align.get()

if received_align_frame is not None:

cv_frame_received_align = received_align_frame.getCvFrame()

cv.imshow("Align", cv_frame_received_align)

if cv.waitKey(1) == ord('q'):

break

i was pretty succesfull with that one using the 'left_frame' which is basically a visualized disparity frame

however. As soon as I try to pipe the depth-map beeing a float32 nd.array. It's completely messing up:

Any help on how to get the proper alignment would be very much appreciated!

This is my resized rgb-image: