Hello everyone,

I trained a yolov4 model with Pytorch to detect objects and converted it to a .blob file from an ONNX file for an RVC3-based customized edge module.

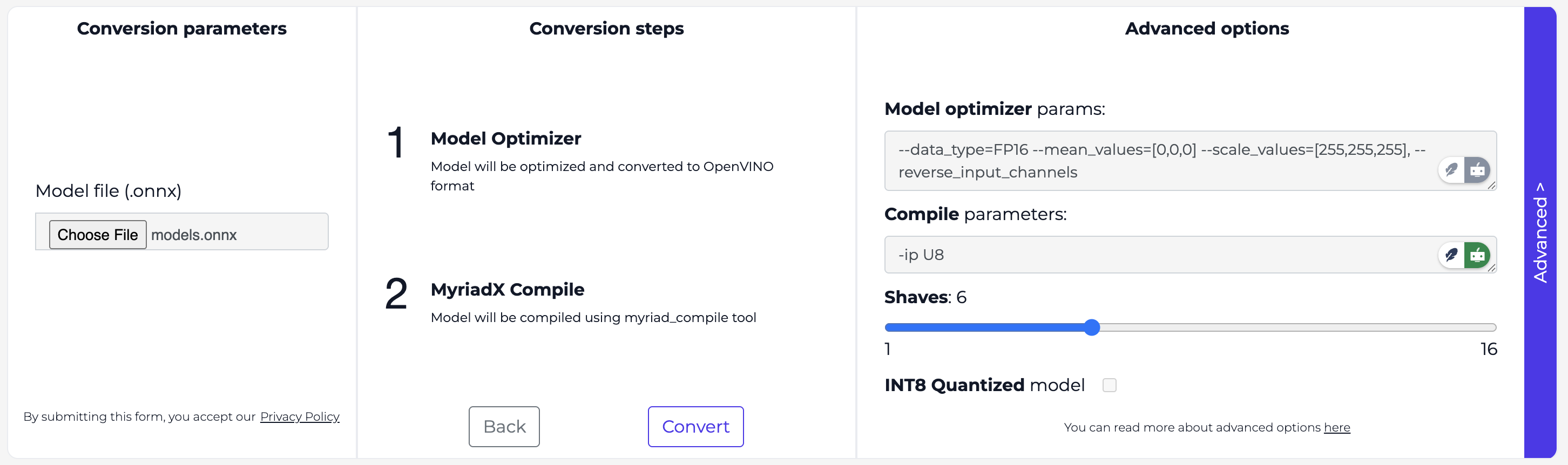

Configuration: --data_type=FP16 --mean_values=[0,0,0] --scale_values=[255,255,255], --reverse_input_channels

However, when I ran it to detect objects from an image, I encountered several issues:

- The detected class names seem correct, but all the bounding boxes are in the wrong positions (not consistently off in the same direction, more like random directions).

setConfidenceThreshold and setIouThreshold are not really controlling the detection results.

I was trying to mimic a similar setup with letterbox and non_max_suppression when I used ONNX to detect objects on my computer (running well). Currently, I only transferred the letterbox function to the script for testing.

Below is the code I ran on my host to use blob file to predict objects. I confirmed the anchors and anchorMasks are correct and matched the training (labelMap is removed on purpose).

Any ideas will be appreciated! Thank you.

import time

import cv2

import depthai as dai

import numpy as np

from pathlib import Path

###############################################################################

# Helper Functions #

###############################################################################

def letterbox(image, size=(416, 416)):

"""

Resize image with unchanged aspect ratio using padding,

and return the resized image along with the scaling factor and padding.

"""

shape = np.shape(image)[:2] # Get the original image shape (height, width)

if isinstance(size, int):

size = (size, size)

# Compute scaling ratio

r = min(size[0] / shape[0], size[1] / shape[1])

new_unpad = (int(round(shape[1] * r)), int(round(shape[0] * r))) # New width, height

dw, dh = size[1] - new_unpad[0], size[0] - new_unpad[1] # Padding width and height

dw /= 2

dh /= 2

# Resize the image if necessary

if shape[::-1] != new_unpad:

image = cv2.resize(image, new_unpad, interpolation=cv2.INTER_LINEAR)

# Compute padding values

top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1))

left, right = int(round(dw - 0.1)), int(round(dw + 0.1))

# Add border (padding)

letterboxed = cv2.copyMakeBorder(image, top, bottom, left, right, cv2.BORDER_CONSTANT, value=(128, 128, 128))

return letterboxed, r, left, top

###############################################################################

# Model and Pipeline Setup #

###############################################################################

# Load model blob

nnPath = str((Path(__file__).parent / Path('model_data/models-0255-reverse_input_channels.blob')).resolve().absolute())

imagePath = "image.jpg"

# Load class labels

labelMap = [

"XX"

]

# Create DepthAI pipeline

pipeline = dai.Pipeline()

xIn = pipeline.create(dai.node.XLinkIn)

xIn.setStreamName("inFrame")

detectionNetwork = pipeline.create(dai.node.YoloDetectionNetwork)

detectionNetwork.setBlobPath(nnPath)

detectionNetwork.setConfidenceThreshold(1)

detectionNetwork.setNumClasses(len(labelMap))

detectionNetwork.setCoordinateSize(4)

detectionNetwork.setIouThreshold(1)

detectionNetwork.setNumInferenceThreads(1)

detectionNetwork.input.setBlocking(False)

# Set anchors

anchors = [16,5, 30,9, 48,15, 67,25, 109,35, 84,53, 152,60, 223,96, 367,155]

anchorMasks = {

"side13": [6, 7, 8],

"side26": [3, 4, 5],

"side52": [0, 1, 2]

}

detectionNetwork.setAnchors(anchors)

detectionNetwork.setAnchorMasks(anchorMasks)

nnOut = pipeline.create(dai.node.XLinkOut)

nnOut.setStreamName("nn")

xIn.out.link(detectionNetwork.input)

detectionNetwork.out.link(nnOut.input)

###############################################################################

# 1) Preprocess and Send Image to DepthAI #

###############################################################################

# Load image (BGR)

orig_image = cv2.imread(imagePath)

if orig_image is None:

raise FileNotFoundError(f"Image {imagePath} not found")

# Resize with letterbox

resized_image, scale, pad_x, pad_y = letterbox(orig_image, (416, 416))

resized_image = resized_image.astype(np.uint8)

# Convert to CHW

planar_image = resized_image.transpose(2, 0, 1).copy()

###############################################################################

# 2) Run Inference and Process Results #

###############################################################################

with dai.Device(pipeline) as device:

qIn = device.getInputQueue("inFrame")

qDet = device.getOutputQueue("nn")

imgFrame = dai.ImgFrame()

imgFrame.setData(planar_image.tobytes())

imgFrame.setTimestamp(time.monotonic())

imgFrame.setWidth(416)

imgFrame.setHeight(416)

imgFrame.setType(dai.ImgFrame.Type.BGR888p)

qIn.send(imgFrame)

inDet = qDet.get()

detections = inDet.detections

if not detections:

print("No objects detected.")

else:

print("Objects detected, processing...")

for det in detections:

# First, get the coordinates in the letterboxed 416x416 image.

x1_letter = det.xmin * 416

y1_letter = det.ymin * 416

x2_letter = det.xmax * 416

y2_letter = det.ymax * 416

# Then, reverse the letterbox transformation:

# subtract the padding and scale by the inverse of the resize scale.

x1 = int((x1_letter - pad_x) / scale)

y1 = int((y1_letter - pad_y) / scale)

x2 = int((x2_letter - pad_x) / scale)

y2 = int((y2_letter - pad_y) / scale)

# Ensure coordinates are within the original image bounds

x1 = max(0, min(orig_image.shape[1], x1))

y1 = max(0, min(orig_image.shape[0], y1))

x2 = max(0, min(orig_image.shape[1], x2))

y2 = max(0, min(orig_image.shape[0], y2))

# Draw bounding box and label

cv2.rectangle(orig_image, (x1, y1), (x2, y2), (0, 255, 0), 2)

class_id = int(det.label) if hasattr(det, 'label') else 0

label_text = labelMap[class_id] if class_id < len(labelMap) else f"ID {class_id}"

conf_text = f"{int(det.confidence)}%"

cv2.putText(orig_image, f"{label_text} {conf_text}", (x1, y1 - 5),

cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 255, 0), 1)

print(f"Detected: {label_text} ({conf_text}) at [{x1}, {y1}, {x2}, {y2}]")

cv2.imshow("Detection Results", orig_image)

cv2.waitKey(0)

cv2.destroyAllWindows()