Hi,

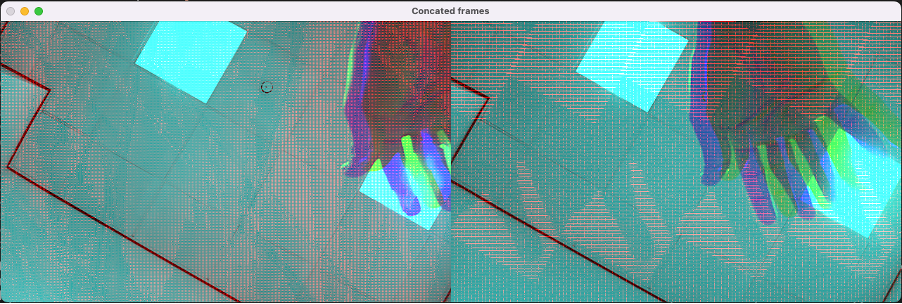

I am trying to use the cast node to display concatenated left and right mono camera images. When I run the "cast_concat.py" example, I can view the concatenated frames output as expected.

But if I just remove the RGB camera image and feed only the two mono images to the Pytorch frame concatenation NN model created using this example, I get the below error:

cv2.imshow("Concated frames", inCast.getCvFrame())

^^^^^^^^^^^^^^^^^^^

RuntimeError: ImgFrame doesn't have enough data to encode specified frame, required 1536000, actual 512000. Maybe metadataOnly transfer was made?

Please see all files for an MRE here. Please could you let me know what I am doing wrong?

Best,

AB