Hi there,

I have a custom stereo depth network (Unimatch) that I would like to deploy on my OAK-D Pro W (RCV2).

As the Blobconverter states, RCV2 only supports OpenVINO opset_version 8 or older (OpenVINO 2022.1).

Now, when I try to compile my traced torch model to ONNX with opset8, I get a warning, that the behavior of upsample layers has changed and that I should use opset_version 11 or newer:

UserWarning: You are trying to export the model with onnx:Upsample for ONNX opset version 8. This operator might cause results to not match the expected results by PyTorch.

ONNX's Upsample/Resize operator did not match Pytorch's Interpolation until opset 11. Attributes to determine how to transform the input were added in onnx:Resize in opset 11 to support Pytorch's behavior (like coordinate_transformation_mode and nearestmode).

We recommend using opset 11 and above for models using this operator.

Also, I get the error that upsample_bilinear2d is not implemented in opset_version 8:

Unsupported: ONNX export of operator upsample_bilinear2d, align_corners == True. Please feel free to request support or submit a pull request on PyTorch GitHub: https://github.com/pytorch/pytorch/issues

All this suggests to me that I have to compile the model in opset_version 11, which works fine. But of course the blob converter doesn't like that and gives me the Error:

Cannot create Interpolate layer /Resize id:18 from unsupported opset: opset11

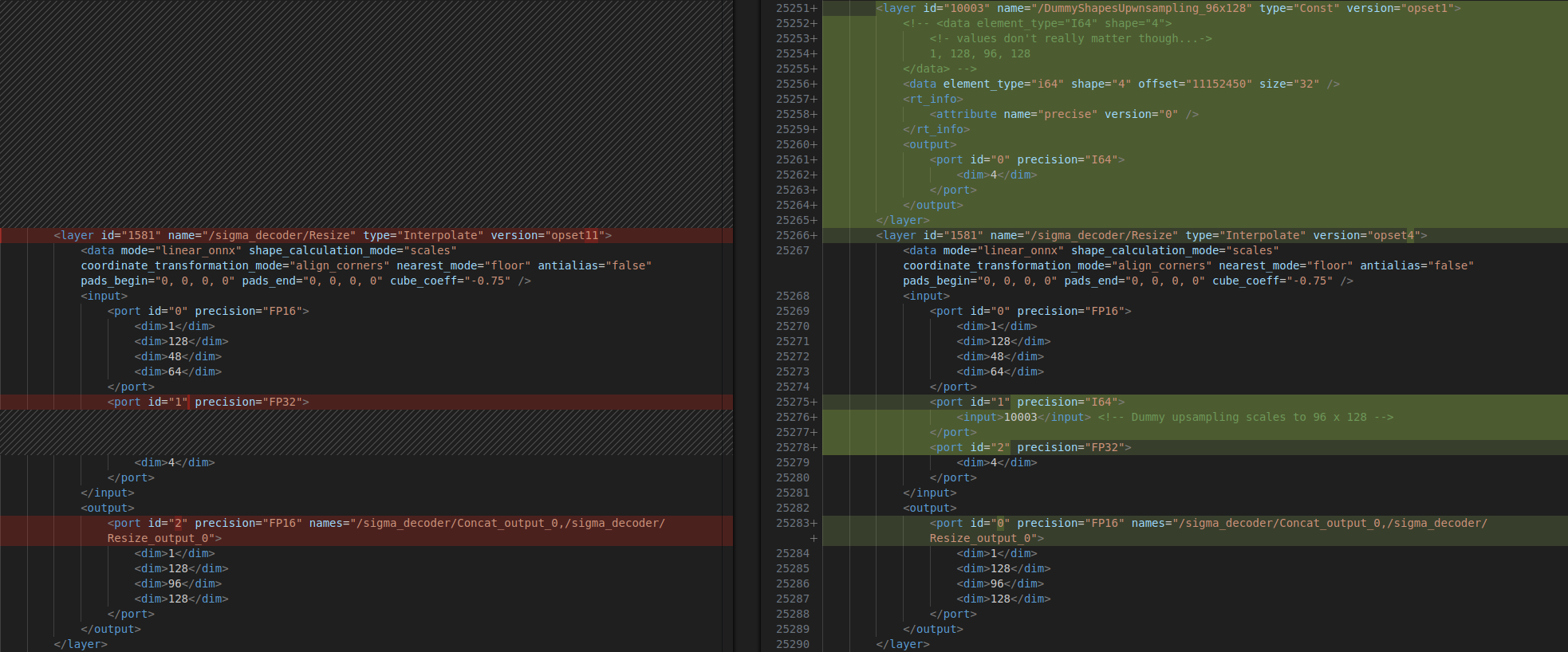

I have tried manually adjusting the OpenVINO IR by in the XML changing the opset versions of the type="Interpolate" layers (the only layers with opset > 8) to opset_version4 (layer version before 11) and manually adding input ports to match the old layer specification. I have also added missing constants to the .bin file:

This doesn't work, though, I am now stuck at this Blobconverter error, which as opposed to previous errors I cannot parse:

Check 'i < m_inputs.size()' failed at core/src/node.cpp:451:

index '2' out of range in get_input_element_type(size_t i)

And even if it did work, it would probably yield unpredictable results…

Now to my question:

I really like the idea of running my depth inference on the camera, and according to this chart and my model characteristics, I can expect almost 10 fps, which would be impressive and very much usable. However, the outdated supported opset_version holds me back from this, which I find quite sad. Does anyone of you have an idea, how I can still compile the unimatch model to opset_version 8 and deploy it on the OAK?

Any help would be greatly appreciated!

Cheers,

Leonard