Hi all,

I am trying to export the weights of a yolov4-tiny model that I have trained using darknet (https://github.com/hank-ai/darknet) to use it on a OAK-D type camera (actually a custom baseboard using the SoM).

Attached is my cfg file.

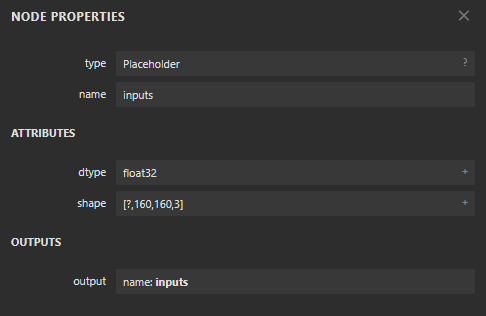

After training, I used the yolo2openvino repo (https://github.com/luxonis/yolo2openvino) to convert my model to TF model weights with the following command:

python ~/repos/yolo2openvino/convert_weights_pb.py \

--yolo 4 \

--weights_file ~/nn/test_project/test_project_best.weights \

--class_names ~/nn/test_project/test_project.names \

--output ~/nn/test_project/test_project.pb \

--tiny \

-h 160 \

-w 160 \

-a 4,18,9,35,15,61,27,100,48,138,95,151

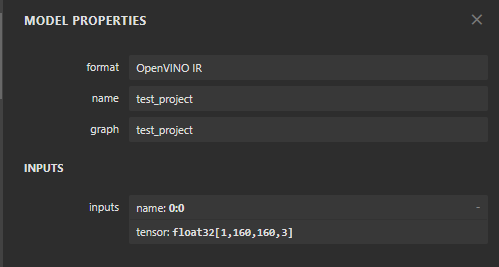

Next, I converted to the OpenVINO IR format using the model optimizer (v2022.1), json config attached:

mo \

--input_model ~/nn/test_project/test_project.pb \

--tensorflow_use_custom_operations_config ~/nn/test_project/test_project.json \

--batch 1 \

--data_type FP16 \

--reverse_input_channel \

--model_name test_project \

--output_dir ~/nn/test_project/

Finally, I converted to a blobfile using blobconverter:

python -m blobconverter \

-ox nn/test_project/test_project.xml \

-ob nn/test_project/test_project.bin \

-sh 6 \

-v 2022.1 \

-o nn/test_project

Uploading this blob to the device, results in the following errors:

[14442C1071F33FD700] [1.1] [1.803] [SpatialDetectionNetwork(8)] [warning] Input image (160x160) does not match NN (3x160)

[14442C1071F33FD700] [1.1] [1.813] [SpatialDetectionNetwork(8)] [error] Mask is not defined for output layer with width '5'. Define at pipeline build time using: 'setAnchorMasks' for 'side5'.

[14442C1071F33FD700] [1.1] [1.813] [SpatialDetectionNetwork(8)] [error] Mask is not defined for output layer with width '10'. Define at pipeline build time using: 'setAnchorMasks' for 'side10'.

Here is a snippet from my YoloSpatialDetectionNetwork configuration:

spatial_nn.setNumClasses(1)

spatial_nn.setCoordinateSize(4)

spatial_nn.setAnchors([4, 18, 9, 35, 15, 61, 27, 100, 48, 138, 95, 151])

spatial_nn.setAnchorMasks({"side26": [0, 1, 2], "side13": [3, 4, 5]})

spatial_nn.setIouThreshold(0.5)

It is clear that somewhere along the line I've messed up the layers during the conversion.

Any tips on where I can start looking for errors?

I am using Python 3.7.17