Hi @jakaskerl

Thanks for sharing the link to the blob converter. I was previously using the blobconverter python module which might have been causing some issues. After successful conversion using the blobconverter link you provided, I am able to run inference on my custom yolo detector. However, there is an issue that persists.

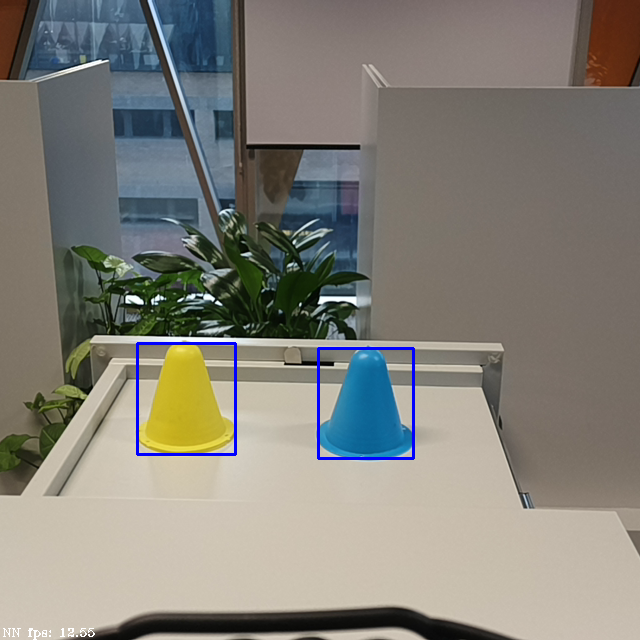

For my custom object detector, I utilised only 2 classes, which was trained and configured using a custom dataset/training pipeline where I used autodistil for the dataset and training. Upon conversion with the given blob converter, the bounding boxes are properly decoded, however, the detection labels and confidence scores are giving out erroneous outputs. Here part of my modified detection code and sample output for reference:

Changes for the class labels:

# Custom label texts / class maps

labelMap = [

"yellow cone", "blue cone"

]

Custom displayFrame function:

def displayFrame(name, frame):

color = (255, 0, 0)

for detection in detections:

bbox = frameNorm(frame, (detection.xmin, detection.ymin, detection.xmax, detection.ymax))

# cv2.putText(frame, labelMap[detection.label], (bbox[0] + 10, bbox[1] + 20), cv2.FONT_HERSHEY_TRIPLEX, 0.5, 255) # Commented out since these lines cause error

# cv2.putText(frame, f"{int(detection.confidence * 100)}%", (bbox[0] + 10, bbox[1] + 40), cv2.FONT_HERSHEY_TRIPLEX, 0.5, 255) # Commented out since these lines cause error

cv2.rectangle(frame, (bbox[0], bbox[1]), (bbox[2], bbox[3]), color, 2)

print(f"Detection Label: {detection.label}, Confidence Threshold: {detection.confidence*100} %")

# Show the frame

cv2.imshow(name, frame)

Full code here

Output Preview:

Erroneous output:

(env) envai4r@ai4r:~/cone-localizer$ python rgb_yolo_simple.py

Detection Label: 2, Confidence Threshold: 552.734375 %

Detection Label: 2, Confidence Threshold: 543.75 %

qt.qpa.plugin: Could not find the Qt platform plugin "wayland" in "/home/ai4r/cone-localizer/env/lib/python3.11/site-packages/cv2/qt/plugins"

Detection Label: 2, Confidence Threshold: 596.09375 %

Detection Label: 2, Confidence Threshold: 533.203125 %

Detection Label: 2, Confidence Threshold: 605.078125 %

Detection Label: 2, Confidence Threshold: 519.921875 %

Detection Label: 2, Confidence Threshold: 603.90625 %

Detection Label: 2, Confidence Threshold: 521.484375 %

Detection Label: 2, Confidence Threshold: 607.03125 %

Detection Label: 2, Confidence Threshold: 528.90625 %

Detection Label: 77, Confidence Threshold: 181100.0 %

Detection Label: 78, Confidence Threshold: 4162.5 %

Detection Label: 52, Confidence Threshold: 3366400.0 %

Detection Label: 34, Confidence Threshold: 5008000.0 %

Detection Label: 57, Confidence Threshold: 13362.5 %

Detection Label: 40, Confidence Threshold: 816.40625 %

Detection Label: 15, Confidence Threshold: 777.734375 %

Detection Label: 32, Confidence Threshold: 892.1875 %

Detection Label: 7, Confidence Threshold: 821.09375 %

Detection Label: 32, Confidence Threshold: 890.625 %

Detection Label: 32, Confidence Threshold: 849.21875 %

Detection Label: 32, Confidence Threshold: 897.65625 %

Detection Label: 32, Confidence Threshold: 855.46875 %

Detection Label: 68, Confidence Threshold: 1005.46875 %

Detection Label: 32, Confidence Threshold: 850.78125 %

Detection Label: 32, Confidence Threshold: 1046.875 %

Detection Label: 32, Confidence Threshold: 1030.46875 %

Detection Label: 50, Confidence Threshold: 5017600.0 %

Detection Label: 32, Confidence Threshold: 973.4375 %

Detection Label: 68, Confidence Threshold: 5840000.0 %

I was expecting my Detection Label to be between 0-1 and the confidence threshold to be at a more reasonable range. The model was properly detecting when I was using the NeuralNetwork node using the blob converted byblobconverter + decoding on the computer.

However, on-device decoding seems to be causing these issues when I am doing the luxonis blob converter.

I have also tried training a yolov8n using the official ultralytics framework but that has yielded exactly the same results.

Can you confirm if the luxonis blob converter supports custom models where the number of classes is different? Do you have a clue why this might be happening and/or if there is any workaround?

Thanks in advance!