Hi,

I am using an Oak-d pro with IR and trying to make yolo work, right now I have this code:

#!/usr/bin/env python3

import cv2

import depthai as dai

import time

import numpy as np

if 1: # PoE config

fps = 30

res = dai.MonoCameraProperties.SensorResolution.THE_400_P

poolSize = 24 # default 3, increased to prevent desync

else: # USB

fps = 30

res = dai.MonoCameraProperties.SensorResolution.THE_720_P

poolSize = 8 # default 3, increased to prevent desync

def frameNorm(frame, bbox):

normVals = np.full(len(bbox), frame.shape[0])

normVals[::2] = frame.shape[1]

return (np.clip(np.array(bbox), 0, 1) * normVals).astype(int)

# Path al modelo

pathBase = ""

pathYoloBlob = pathBase + "/yolov8n_openvino_2022.1_6shave.blob"

# Diccionario de yolo

translate = {0: 'person', 1: 'bicycle', 2: 'car', 3: 'motorbike', 4: 'aeroplane', 5: 'bus', 6: 'train', 7: 'truck', 8: 'boat', 9: 'traffic light', 10: 'fire hydrant', 11: 'stop sign', 12: 'parking meter', 13: 'bench', 14: 'bird', 15: 'cat', 16: 'dog', 17: 'horse', 18: 'sheep', 19: 'cow', 20: 'elephant', 21: 'bear', 22: 'zebra', 23: 'giraffe', 24: 'backpack', 25: 'umbrella', 26: 'handbag', 27: 'tie', 28: 'suitcase', 29: 'frisbee', 30: 'skis', 31: 'snowboard', 32: 'sports ball', 33: 'kite', 34: 'baseball bat', 35: 'baseball glove', 36: 'skateboard', 37: 'surfboard', 38: 'tennis racket', 39: 'bottle', 40: 'wine glass', 41: 'cup', 42: 'fork', 43: 'knife', 44: 'spoon', 45: 'bowl', 46: 'banana', 47: 'apple', 48: 'sandwich', 49: 'orange', 50: 'broccoli', 51: 'carrot', 52: 'hot dog', 53: 'pizza', 54: 'donut', 55: 'cake', 56: 'chair', 57: 'sofa', 58: 'pottedplant', 59: 'bed', 60: 'diningtable', 61: 'toilet', 62: 'tvmonitor', 63: 'laptop', 64: 'mouse', 65: 'remote', 66: 'keyboard', 67: 'cell phone', 68: 'microwave', 69: 'oven', 70: 'toaster', 71: 'sink', 72: 'refrigerator', 73: 'book', 74: 'clock', 75: 'vase', 76: 'scissors', 77: 'teddy bear', 78: 'hair drier', 79: 'toothbrush'}

# Create pipeline

pipeline = dai.Pipeline()

# Define sources and outputs

monoL = pipeline.create(dai.node.MonoCamera)

monoR = pipeline.create(dai.node.MonoCamera)

monoL.setCamera("left")

monoL.setResolution(res)

monoL.setFps(fps)

monoL.setNumFramesPool(poolSize)

monoR.setCamera("right")

monoR.setResolution(res)

monoR.setFps(fps)

monoR.setNumFramesPool(poolSize)

xoutFloodL = pipeline.create(dai.node.XLinkOut)

xoutFloodR = pipeline.create(dai.node.XLinkOut)

xoutFloodL.setStreamName('flood-left')

xoutFloodR.setStreamName('flood-right')

streams = ['flood-left', 'flood-right', 'nn_yolo']

# Script node for frame routing and IR dot/flood alternate

script = pipeline.create(dai.node.Script)

script.setProcessor(dai.ProcessorType.LEON_CSS)

script.setScript("""

#dotBright = 0.8

floodBright = 0.1

LOGGING = False # Set `True` for latency/timings debugging

node.warn(f'IR drivers detected: {str(Device.getIrDrivers())}')

while True:

# Wait first for a frame event, received at MIPI start-of-frame

event = node.io['event'].get()

if LOGGING: tEvent = Clock.now()

# Immediately reconfigure the IR driver.

# Note the logic is inverted, as it applies for next frame

Device.setIrFloodLightIntensity(floodBright)

if LOGGING: tIrSet = Clock.now()

# Wait for the actual frames (after MIPI capture and ISP proc is done)

frameL = node.io['frameL'].get()

if LOGGING: tLeft = Clock.now()

frameR = node.io['frameR'].get()

if LOGGING: tRight = Clock.now()

if LOGGING:

latIR = (tIrSet - tEvent ).total_seconds() * 1000

latEv = (tEvent - event.getTimestamp() ).total_seconds() * 1000

latProcL = (tLeft - event.getTimestamp() ).total_seconds() * 1000

diffRecvRL = (tRight - tLeft ).total_seconds() * 1000

node.warn(f'T[ms] latEv:{latEv:5.3f} latIR:{latIR:5.3f} latProcL:{latProcL:6.3f} '

+ f' diffRecvRL:{diffRecvRL:5.3f}')

# Route the frames to their respective outputs

node.io['floodL'].send(frameL)

node.io['floodR'].send(frameR)

""")

# Linking

# TODO: peta al par de segundos si se intenta pone monoR.frameEvent.link(script.inputs['event'])

# Tambien si se cambia mas abajo monoR.out.link(manip.inputImage) a monoL.out.link(manip.inputImage)

monoL.frameEvent.link(script.inputs['event'])

monoL.out.link(script.inputs['frameL'])

monoR.out.link(script.inputs['frameR'])

script.outputs['floodL'].link(xoutFloodL.input)

script.outputs['floodR'].link(xoutFloodR.input)

# Parte yolo

nn = pipeline.createYoloDetectionNetwork()

nn.setBlobPath(pathYoloBlob)

nn.setNumClasses(80)

nn.setCoordinateSize(4)

nn.setAnchors([])

nn.setAnchorMasks({})

nn.setIouThreshold(0.5)

nn.setConfidenceThreshold(0.5)

# Tomamos la imagen y la ponemos del size para la nn y en RGB

manip = pipeline.create(dai.node.ImageManip)

manip.initialConfig.setResize(640, 640)

manip.initialConfig.setFrameType(dai.ImgFrame.Type.BGR888p)

manip.setMaxOutputFrameSize(1228800) # 640 * 640 * 3

# Link de la camara a manip

monoR.out.link(manip.inputImage)

# Link de manip a la nn

manip.out.link(nn.input)

xout_nn_yolo = pipeline.createXLinkOut()

xout_nn_yolo.setStreamName("nn_yolo")

nn.out.link(xout_nn_yolo.input)

# Connect to device and start pipeline

time_start = time.time()

with dai.Device(pipeline) as device:

q_nn_yolo = device.getOutputQueue("nn_yolo")

q_flood_right = device.getOutputQueue("flood-right")

frame = None

while True:

in_nn_yolo = q_nn_yolo.tryGet()

in_flood_right = q_flood_right.tryGet()

if in_flood_right is not None:

# If the packet from RGB camera is present, we're retrieving the frame in OpenCV format using getCvFrame

frame = in_flood_right.getCvFrame()

if in_nn_yolo is not None:

# when data from nn is received, we take the detections array that contains mobilenet-ssd results

detections_yolo = in_nn_yolo.detections

if frame is not None:

for detection in detections_yolo:

# for each bounding box, we first normalize it to match the frame size

bbox = frameNorm(frame, (detection.xmin, detection.ymin, detection.xmax, detection.ymax))

# and then draw a rectangle on the frame to show the actual result

cv2.rectangle(frame, (bbox[0], bbox[1]), (bbox[2], bbox[3]), (0, 0, 255), 2)

cv2.imshow("preview", frame)

if cv2.waitKey(5) == ord('q'):

break

I have two problems:

I cannot delete monoR or monoL because for some reason if I change monoL.frameEvent.link(script.inputs['event']) to monoR.frameEvent.link(script.inputs['event']) only works for one or two seconds but at least it works using both.

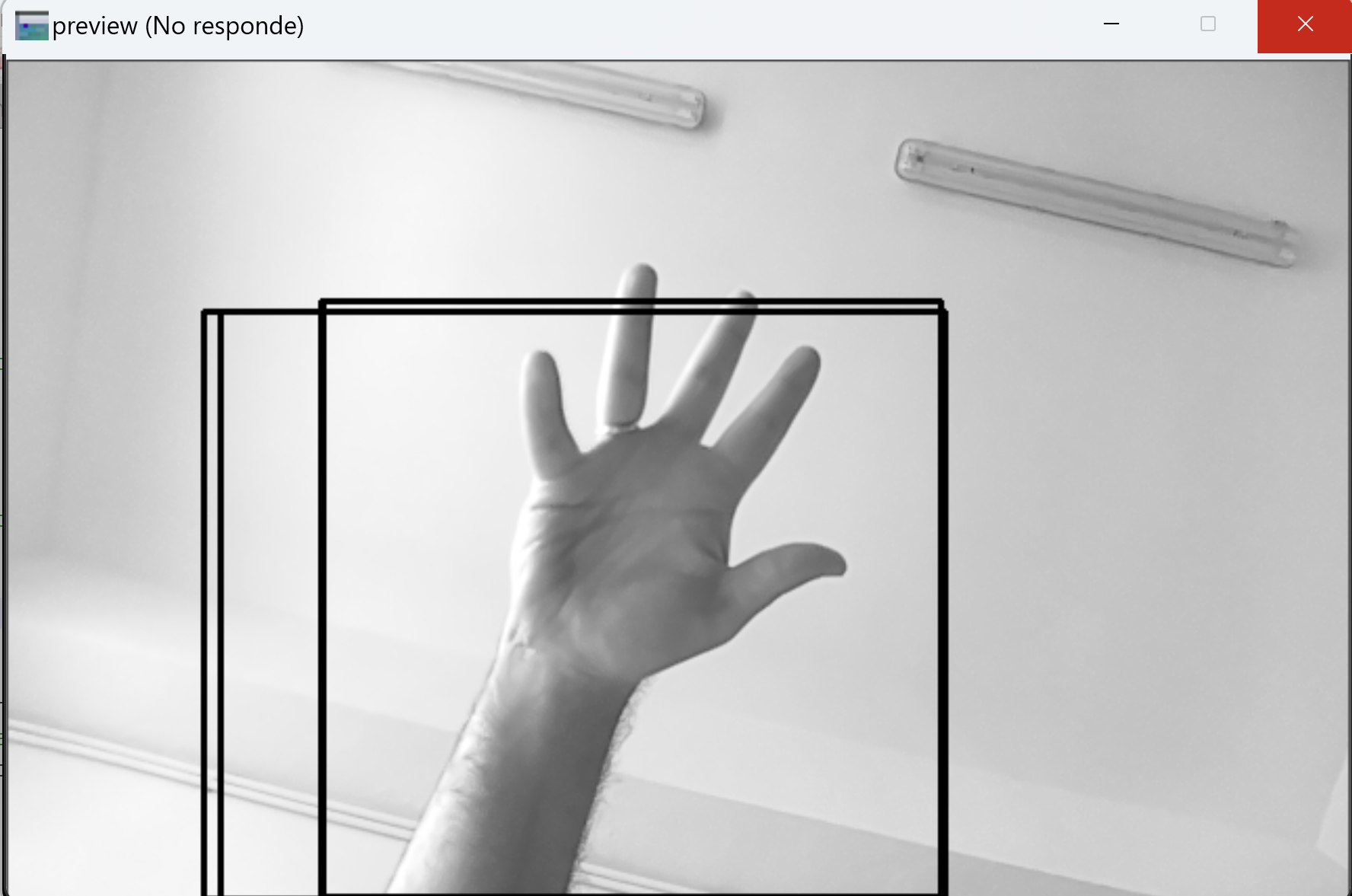

With this code it works for 2 seconds and this is the output:

I understand that the problem is the frame because it draws more than a rectangle of detections so I guess the part of yolo is working ok, any clues of what is the problem?

Thanks,

Pedro.