Hi folks,

this is a follow up from this post.

I got amazing help from Matija but I ran into a dead end.

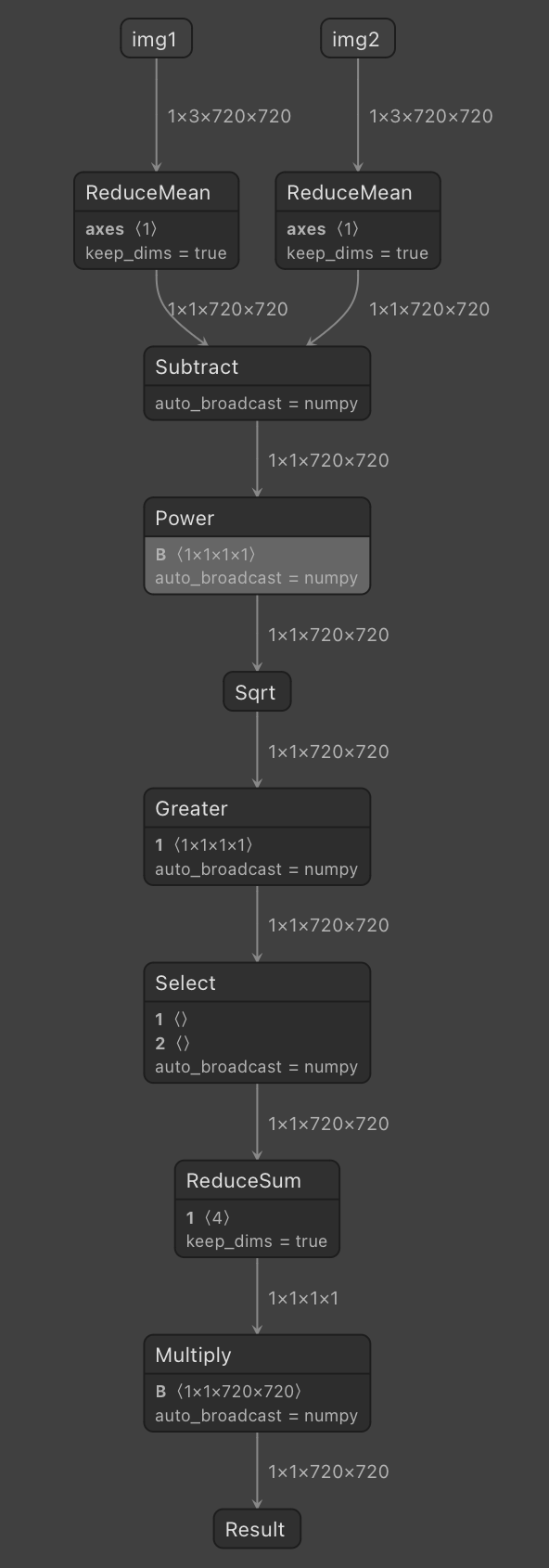

With Matijas help, I created a colab notebook, where I convert the pytorch code into a model. The model returns a correct result and the tree looks good:

class Model(nn.Module):

def forward(self, img1, img2):

# Calculate the mean of the two input tensors

mean1 = torch.mean(img1, dim=1, keepdim=True)

mean2 = torch.mean(img2, dim=1, keepdim=True)

# Calculate the absolute difference between the two mean tensors

diff = torch.sqrt(torch.pow(mean1 - mean2, 2)).float()

print('diff', diff, diff.shape)

threshold = 30.0

# Create a binary mask where differences are higher than the threshold

mask = torch.where(diff > threshold, torch.tensor(1.0), torch.tensor(0.0))

print('mask', mask, mask.shape)

# Count the number of moving pixels

movingPx = torch.sum(mask).view(1,1,1,1) # Ensure the output has the correct dimension

print('movingPx', movingPx, movingPx.shape)

#return movingPx

# Calculate the total number of pixels

totalPx = torch.tensor(mask.shape[2] * mask.shape[3], dtype=torch.float32)

print('totalPx', totalPx)

# Calculate the ratio of moving pixels to the total number of pixels

movingRatio = movingPx / totalPx

print('Result', movingRatio)

return movingRatio

model = Model()

torch.onnx.export(

model,

(torch.randn(1,3,720,720)*100, torch.randn(1,3,720,720)*100),

"model_diff.onnx",

opset_version=16,

input_names=['img1', 'img2'],

output_names=['movingRatio']

)

!mo --input_model model_diff.onnx --output_dir /content/out

# Then upload .bin and .xml to blobconverter

and the blob gets manually converted without error but gets stuck in the *get() *function.

To check, I tried to convert a known to work model. Converting worked, but without any return, when running it.

The code, that runs on the oak, has worked with other similar structured models.

installed packages in the colab (because other ones did not work):

blobconverter>=1.2.9

onnx onnx-simplifier

openvino-dev==2022.3

Any ideas?

best regards

Lasse