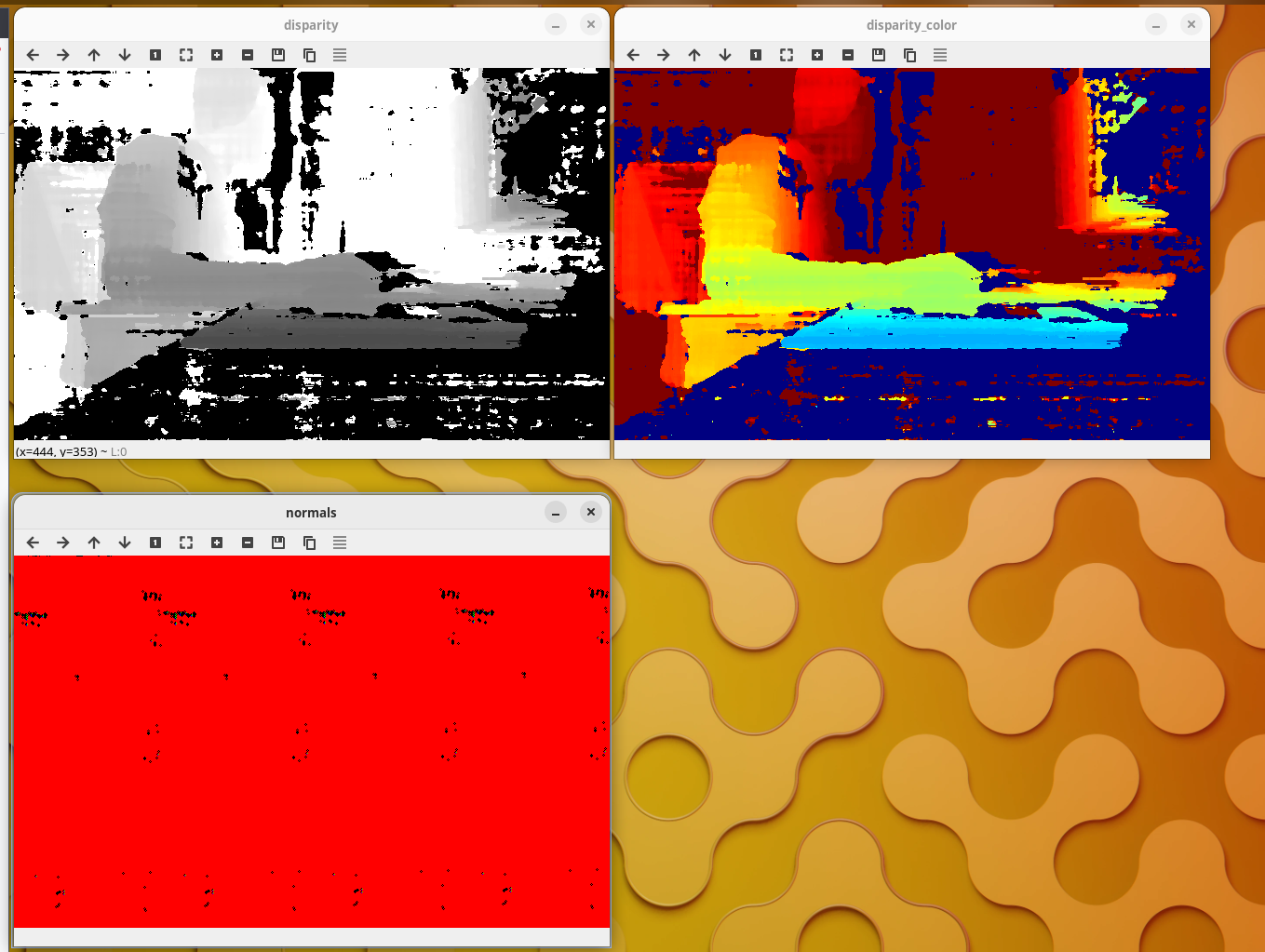

I'm trying to get surface normals from the depth map using an Oak-D Pro W. I found this post, going over exactly this. But when I tried using the code I get useless results. I'm using a very slightly modified version of the pipeline here.

auto GetSurfaceNormalMap(const cv::Mat& input_) -> cv::Mat {

cv::Mat input(input_.size(), CV_32FC1);

if (input.type() != CV_32FC1) {

cv::normalize(input_, input, 0, 65535, cv::NormTypes::NORM_MINMAX, CV_16UC1);

} else {

input = input_;

}

cv::Mat normals(input.size(), CV_32FC3);

for (int x = 0; x < input.rows; ++x) {

for (int y = 0; y < input.cols; ++y) {

// use float instead of double otherwise you will not get the correct result

// check my updates in the original post. I have not figured out yet why this

// is happening.

float dzdx = (input.at<double>(x + 1, y) - input.at<double>(x - 1, y)) / 2.0;

float dzdy = (input.at<double>(x, y + 1) - input.at<double>(x, y - 1)) / 2.0;

cv::Vec3f d(-dzdx, -dzdy, 1.0f);

cv::Vec3f n = normalize(d);

normals.at<cv::Vec3f>(x, y) = n;

}

}

return normals;

}

I'm not sure about the conversion at the beginning, but other than that it seems like it should be able to work.

Any help is appreciated