Hello,

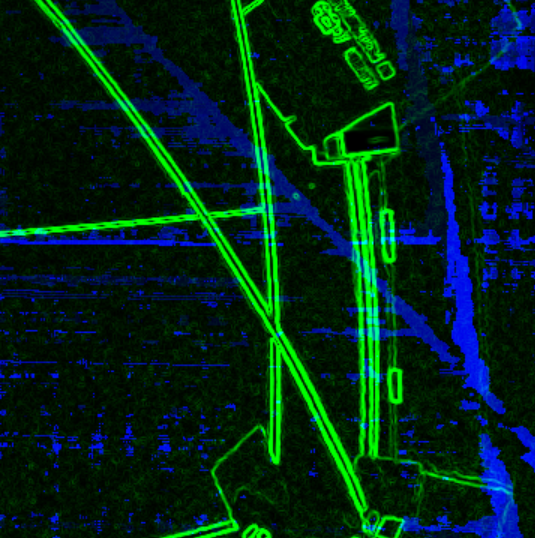

I'm exploring the possibility of merging the depth information obtained from a StereoDepth node with an image captured by one of the Mono cameras. My objective is to enhance the depth image with edge detection derived from the Mono camera image, aiming to facilitate more efficient object detection. I tried using stereo.setDepthAlign but no matter to which socket i align depth image I still see shifts between objects (green - edge detection, blue - depth). Straight object in the middle is shifted in depth view to right.

I also encounter challenges in clipping the depth detection range to a maximum of 4 meters. Although I have studied the stereo calculation chart, I am seeking guidance on how to effectively apply these parameters based on disparity in code to achieve precise range clipping.

Thank you in advance, Wiktor