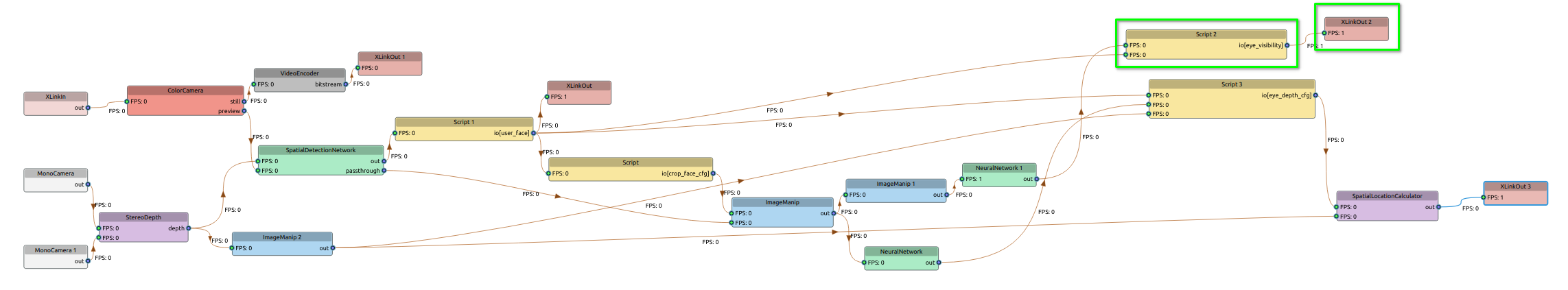

I have a pipeline that is working great. When I add two new nodes to it though (highlighted in green below) the whole pipeline freezes, meaning if I request a frame from an output queue, the first frame comes immediately, but any attempt to fetch a second frame hangs indefinitely.

My understanding based on https://discuss.luxonis.com/d/1774-script-node-blocking-behaviour-isnt-documented-or-as-expected is that when I have all input queues set to non-blocking (e.g. node.input.setBlocking(False)), I should not have any pipeline freezing, since no input queues are getting backed up. As can be seen in the above picture, all node inputs have a green circle which means they are all set to non-blocking.

Another detail which may affect things: every Script node I am using performs a blocking get (e.g. node.io["an_input"].get()) on each of its inputs for each iteration of the script, and outputs one output on every iteration of the script.

My question is: why is my pipeline freezing when all node input queues are set to non-blocking?