Hi @jakaskerl !!

Thanks for your quick response.

I tried using other models, and it turns out they don't work. This is a major issue for us because our priority that the cameras can work in standalone mode.

In response to your feedback and our tests, I have a series of questions regarding possible solutions and implementation on our devices.

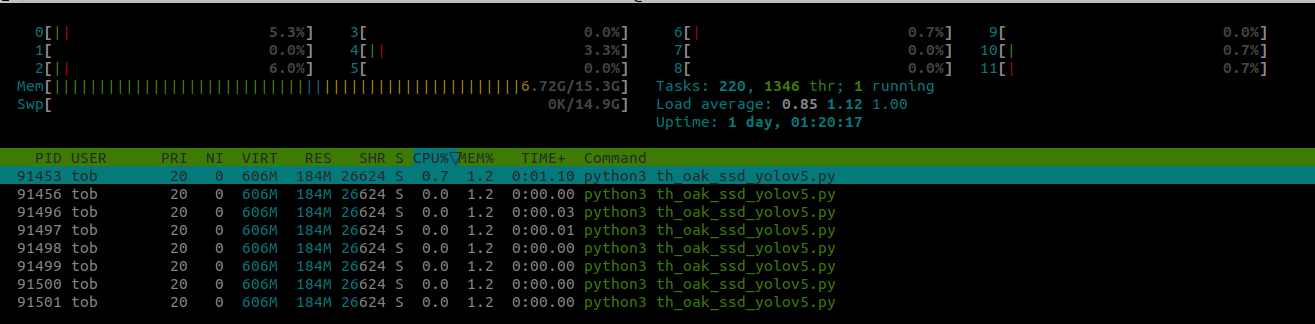

As you mentioned, now we are trying to avoid standalone mode. With that in mind, and considering our goal of operating 12 cameras with a single PC, we've noticed that when we execute the script (which you can check oak_ssd_yolov5.py) on the host, it launches 8 threads per camera. 7 of these threads correspond to the created nodes, plus the main script.

Logs:

I understand it's a complex issue, but is there any way to reduce or encapsulate this behavior?

Secondly, can we run a single script for all our cameras? We are thinking about the scalability of our development, so it was important to us that the cameras can work in standalone mode. Since that's not possible, our question is whether we can execute a single .py to all of our devices.

Lastly, looking ahead to our next projects, do you think if this dives, OAK-1 POE FF fit to us and our aims (custom multimodel standalone mode + TCP streming) also it's possible to incorporate an M12 connector into this device? If so, who can I speak to about it?

I appreciate all the help and look forward to your response.

Irena