If I edit the python file such that it only contains functions that are in the headless opencv package, then it runs fine.

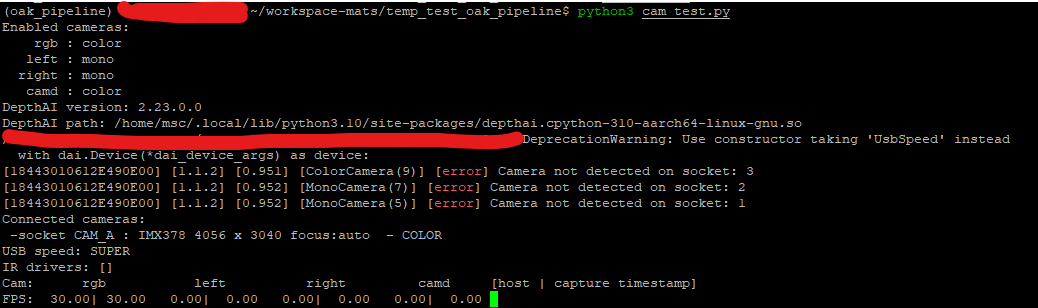

I have added the adjusted python file, the output of the dmesg and a screengrab of running the "cam_test.py"

python:

import os

#os.environ["DEPTHAI_LEVEL"] = "debug"

import cv2

import numpy as np

import argparse

import collections

import time

from itertools import cycle

from pathlib import Path

import sys

import signal

def socket_type_pair(arg):

socket, type = arg.split(',')

if not (socket in ['rgb', 'left', 'right', 'cama', 'camb', 'camc', 'camd']):

raise ValueError("")

if not (type in ['m', 'mono', 'c', 'color', 't', 'tof']):

raise ValueError("")

is_color = True if type in ['c', 'color'] else False

is_tof = True if type in ['t', 'tof'] else False

return [socket, is_color, is_tof]

parser = argparse.ArgumentParser()

parser.add_argument('-cams', '--cameras', type=socket_type_pair, nargs='+',

default=[['rgb', True, False], ['left', False, False],

['right', False, False], ['camd', True, False]],

help="Which camera sockets to enable, and type: c[olor] / m[ono] / t[of]. "

"E.g: -cams rgb,m right,c . Default: rgb,c left,m right,m camd,c")

parser.add_argument('-mres', '--mono-resolution', type=int, default=800, choices={480, 400, 720, 800},

help="Select mono camera resolution (height). Default: %(default)s")

parser.add_argument('-cres', '--color-resolution', default='1080', choices={'720', '800', '1080', '1012', '1200', '1520', '4k', '5mp', '12mp', '13mp', '48mp'},

help="Select color camera resolution / height. Default: %(default)s")

parser.add_argument('-rot', '--rotate', const='all', choices={'all', 'rgb', 'mono'}, nargs="?",

help="Which cameras to rotate 180 degrees. All if not filtered")

parser.add_argument('-fps', '--fps', type=float, default=30,

help="FPS to set for all cameras")

parser.add_argument('-isp3afps', '--isp3afps', type=int, default=0,

help="3A FPS to set for all cameras")

parser.add_argument('-ds', '--isp-downscale', default=1, type=int,

help="Downscale the ISP output by this factor")

parser.add_argument('-rs', '--resizable-windows', action='store_true',

help="Make OpenCV windows resizable. Note: may introduce some artifacts")

parser.add_argument('-tun', '--camera-tuning', type=Path,

help="Path to custom camera tuning database")

parser.add_argument('-raw', '--enable-raw', default=False, action="store_true",

help='Enable the RAW camera streams')

parser.add_argument('-tofraw', '--tof-raw', action='store_true',

help="Show just ToF raw output instead of post-processed depth")

parser.add_argument('-tofamp', '--tof-amplitude', action='store_true',

help="Show also ToF amplitude output alongside depth")

parser.add_argument('-tofcm', '--tof-cm', action='store_true',

help="Show ToF depth output in centimeters, capped to 255")

parser.add_argument('-tofmedian', '--tof-median', choices=[0,3,5,7], default=5, type=int,

help="ToF median filter kernel size")

parser.add_argument('-rgbprev', '--rgb-preview', action='store_true',

help="Show RGB `preview` stream instead of full size `isp`")

parser.add_argument('-d', '--device', default="", type=str,

help="Optional MX ID of the device to connect to.")

parser.add_argument('-ctimeout', '--connection-timeout', default=30000,

help="Connection timeout in ms. Default: %(default)s (sets DEPTHAI_CONNECTION_TIMEOUT environment variable)")

parser.add_argument('-btimeout', '--boot-timeout', default=30000,

help="Boot timeout in ms. Default: %(default)s (sets DEPTHAI_BOOT_TIMEOUT environment variable)")

args = parser.parse_args()

# Set timeouts before importing depthai

os.environ["DEPTHAI_CONNECTION_TIMEOUT"] = str(args.connection_timeout)

os.environ["DEPTHAI_BOOT_TIMEOUT"] = str(args.boot_timeout)

import depthai as dai

cam_list = []

cam_type_color = {}

cam_type_tof = {}

print("Enabled cameras:")

for socket, is_color, is_tof in args.cameras:

cam_list.append(socket)

cam_type_color[socket] = is_color

cam_type_tof[socket] = is_tof

print(socket.rjust(7), ':', 'tof' if is_tof else 'color' if is_color else 'mono')

print("DepthAI version:", dai.__version__)

print("DepthAI path:", dai.__file__)

cam_socket_opts = {

'rgb' : dai.CameraBoardSocket.CAM_A,

'left' : dai.CameraBoardSocket.CAM_B,

'right': dai.CameraBoardSocket.CAM_C,

'cama' : dai.CameraBoardSocket.CAM_A,

'camb' : dai.CameraBoardSocket.CAM_B,

'camc' : dai.CameraBoardSocket.CAM_C,

'camd' : dai.CameraBoardSocket.CAM_D,

}

rotate = {

'rgb': args.rotate in ['all', 'rgb'],

'left': args.rotate in ['all', 'mono'],

'right': args.rotate in ['all', 'mono'],

'cama': args.rotate in ['all', 'rgb'],

'camb': args.rotate in ['all', 'mono'],

'camc': args.rotate in ['all', 'mono'],

'camd': args.rotate in ['all', 'rgb'],

}

mono_res_opts = {

400: dai.MonoCameraProperties.SensorResolution.THE_400_P,

480: dai.MonoCameraProperties.SensorResolution.THE_480_P,

720: dai.MonoCameraProperties.SensorResolution.THE_720_P,

800: dai.MonoCameraProperties.SensorResolution.THE_800_P,

1200: dai.MonoCameraProperties.SensorResolution.THE_1200_P,

}

color_res_opts = {

'720': dai.ColorCameraProperties.SensorResolution.THE_720_P,

'800': dai.ColorCameraProperties.SensorResolution.THE_800_P,

'1080': dai.ColorCameraProperties.SensorResolution.THE_1080_P,

'1012': dai.ColorCameraProperties.SensorResolution.THE_1352X1012,

'1200': dai.ColorCameraProperties.SensorResolution.THE_1200_P,

'1520': dai.ColorCameraProperties.SensorResolution.THE_2024X1520,

'4k': dai.ColorCameraProperties.SensorResolution.THE_4_K,

'5mp': dai.ColorCameraProperties.SensorResolution.THE_5_MP,

'12mp': dai.ColorCameraProperties.SensorResolution.THE_12_MP,

'13mp': dai.ColorCameraProperties.SensorResolution.THE_13_MP,

'48mp': dai.ColorCameraProperties.SensorResolution.THE_48_MP,

}

def clamp(num, v0, v1):

return max(v0, min(num, v1))

# Calculates FPS over a moving window, configurable

class FPS:

def __init__(self, window_size=30):

self.dq = collections.deque(maxlen=window_size)

self.fps = 0

def update(self, timestamp=None):

if timestamp == None:

timestamp = time.monotonic()

count = len(self.dq)

if count > 0:

self.fps = count / (timestamp - self.dq[0])

self.dq.append(timestamp)

def get(self):

return self.fps

# Start defining a pipeline

pipeline = dai.Pipeline()

# Uncomment to get better throughput

# pipeline.setXLinkChunkSize(0)

control = pipeline.createXLinkIn()

control.setStreamName('control')

xinTofConfig = pipeline.createXLinkIn()

xinTofConfig.setStreamName('tofConfig')

cam = {}

tof = {}

xout = {}

xout_raw = {}

xout_tof_amp = {}

streams = []

tofConfig = {}

for c in cam_list:

tofEnableRaw = False

xout[c] = pipeline.createXLinkOut()

xout[c].setStreamName(c)

streams.append(c)

if cam_type_tof[c]:

cam[c] = pipeline.create(dai.node.ColorCamera) # .Camera

if args.tof_raw:

tofEnableRaw = True

else:

tof[c] = pipeline.create(dai.node.ToF)

cam[c].raw.link(tof[c].input)

tof[c].depth.link(xout[c].input)

xinTofConfig.out.link(tof[c].inputConfig)

tofConfig = tof[c].initialConfig.get()

tofConfig.depthParams.freqModUsed = dai.RawToFConfig.DepthParams.TypeFMod.MIN

tofConfig.depthParams.avgPhaseShuffle = False

tofConfig.depthParams.minimumAmplitude = 3.0

if args.tof_median == 0:

tofConfig.depthParams.median = dai.MedianFilter.MEDIAN_OFF

elif args.tof_median == 3:

tofConfig.depthParams.median = dai.MedianFilter.KERNEL_3x3

elif args.tof_median == 5:

tofConfig.depthParams.median = dai.MedianFilter.KERNEL_5x5

elif args.tof_median == 7:

tofConfig.depthParams.median = dai.MedianFilter.KERNEL_7x7

tof[c].initialConfig.set(tofConfig)

if args.tof_amplitude:

amp_name = 'tof_amplitude_' + c

xout_tof_amp[c] = pipeline.create(dai.node.XLinkOut)

xout_tof_amp[c].setStreamName(amp_name)

streams.append(amp_name)

tof[c].amplitude.link(xout_tof_amp[c].input)

elif cam_type_color[c]:

cam[c] = pipeline.createColorCamera()

cam[c].setResolution(color_res_opts[args.color_resolution])

cam[c].setIspScale(1, args.isp_downscale)

# cam[c].initialControl.setManualFocus(85) # TODO

if args.rgb_preview:

cam[c].preview.link(xout[c].input)

else:

cam[c].isp.link(xout[c].input)

else:

cam[c] = pipeline.createMonoCamera()

cam[c].setResolution(mono_res_opts[args.mono_resolution])

cam[c].out.link(xout[c].input)

cam[c].setBoardSocket(cam_socket_opts[c])

# Num frames to capture on trigger, with first to be discarded (due to degraded quality)

# cam[c].initialControl.setExternalTrigger(2, 1)

# cam[c].initialControl.setStrobeExternal(48, 1)

# cam[c].initialControl.setFrameSyncMode(dai.CameraControl.FrameSyncMode.INPUT)

# cam[c].initialControl.setManualExposure(15000, 400) # exposure [us], iso

# When set, takes effect after the first 2 frames

# cam[c].initialControl.setManualWhiteBalance(4000) # light temperature in K, 1000..12000

control.out.link(cam[c].inputControl)

if rotate[c]:

cam[c].setImageOrientation(dai.CameraImageOrientation.ROTATE_180_DEG)

cam[c].setFps(args.fps)

if args.isp3afps:

cam[c].setIsp3aFps(args.isp3afps)

if args.enable_raw or tofEnableRaw:

raw_name = 'raw_' + c

xout_raw[c] = pipeline.create(dai.node.XLinkOut)

xout_raw[c].setStreamName(raw_name)

streams.append(raw_name)

cam[c].raw.link(xout_raw[c].input)

cam[c].setRawOutputPacked(False)

if args.camera_tuning:

pipeline.setCameraTuningBlobPath(str(args.camera_tuning))

def exit_cleanly(signum, frame):

print("Exiting cleanly")

cv2.destroyAllWindows()

sys.exit(0)

signal.signal(signal.SIGINT, exit_cleanly)

# Pipeline is defined, now we can connect to the device

device = dai.Device.getDeviceByMxId(args.device)

dai_device_args = [pipeline]

if device[0]:

dai_device_args.append(device[1])

with dai.Device(*dai_device_args) as device:

# print('Connected cameras:', [c.name for c in device.getConnectedCameras()])

print('Connected cameras:')

cam_name = {}

for p in device.getConnectedCameraFeatures():

print(

f' -socket {p.socket.name:6}: {p.sensorName:6} {p.width:4} x {p.height:4} focus:', end='')

print('auto ' if p.hasAutofocus else 'fixed', '- ', end='')

print(*[type.name for type in p.supportedTypes])

cam_name[p.socket.name] = p.sensorName

if args.enable_raw:

cam_name['raw_'+p.socket.name] = p.sensorName

if args.tof_amplitude:

cam_name['tof_amplitude_'+p.socket.name] = p.sensorName

print('USB speed:', device.getUsbSpeed().name)

print('IR drivers:', device.getIrDrivers())

q = {}

fps_host = {} # FPS computed based on the time we receive frames in app

fps_capt = {} # FPS computed based on capture timestamps from device

for c in streams:

q[c] = device.getOutputQueue(name=c, maxSize=4, blocking=False)

# The OpenCV window resize may produce some artifacts

if args.resizable_windows:

cv2.namedWindow(c, cv2.WINDOW_NORMAL)

cv2.resizeWindow(c, (640, 480))

fps_host[c] = FPS()

fps_capt[c] = FPS()

controlQueue = device.getInputQueue('control')

tofCfgQueue = device.getInputQueue('tofConfig')

# Manual exposure/focus set step

EXP_STEP = 500 # us

ISO_STEP = 50

LENS_STEP = 3

DOT_STEP = 100

FLOOD_STEP = 100

DOT_MAX = 1200

FLOOD_MAX = 1500

# Defaults and limits for manual focus/exposure controls

lensPos = 150

lensMin = 0

lensMax = 255

expTime = 20000

expMin = 1

expMax = 33000

sensIso = 800

sensMin = 100

sensMax = 1600

dotIntensity = 0

floodIntensity = 0

awb_mode = cycle([item for name, item in vars(

dai.CameraControl.AutoWhiteBalanceMode).items() if name.isupper()])

anti_banding_mode = cycle([item for name, item in vars(

dai.CameraControl.AntiBandingMode).items() if name.isupper()])

effect_mode = cycle([item for name, item in vars(

dai.CameraControl.EffectMode).items() if name.isupper()])

ae_comp = 0

ae_lock = False

awb_lock = False

saturation = 0

contrast = 0

brightness = 0

sharpness = 0

luma_denoise = 0

chroma_denoise = 0

control = 'none'

show = False

jet_custom = cv2.applyColorMap(np.arange(256, dtype=np.uint8), cv2.COLORMAP_JET)

jet_custom[0] = [0, 0, 0]

print("Cam:", *[' ' + c.ljust(8)

for c in cam_list], "[host | capture timestamp]")

capture_list = []

while True:

for c in streams:

try:

pkt = q[c].tryGet()

except Exception as e:

print(e)

exit_cleanly(0, 0)

if pkt is not None:

fps_host[c].update()

fps_capt[c].update(pkt.getTimestamp().total_seconds())

width, height = pkt.getWidth(), pkt.getHeight()

frame = pkt.getCvFrame()

cam_skt = c.split('_')[-1]

if cam_type_tof[cam_skt] and not (c.startswith('raw_') or c.startswith('tof_amplitude_')):

if args.tof_cm:

# pixels represent `cm`, capped to 255. Value can be checked hovering the mouse

frame = (frame // 10).clip(0, 255).astype(np.uint8)

else:

frame = (frame.view(np.int16).astype(float))

frame = cv2.normalize(frame, frame, alpha=255, beta=0, norm_type=cv2.NORM_MINMAX, dtype=cv2.CV_8U)

frame = cv2.applyColorMap(frame, jet_custom)

if show:

txt = f"[{c:5}, {pkt.getSequenceNum():4}] "

txt += f"Exp: {pkt.getExposureTime().total_seconds()*1000:6.3f} ms, "

txt += f"ISO: {pkt.getSensitivity():4}, "

txt += f"Lens pos: {pkt.getLensPosition():3}, "

txt += f"Color temp: {pkt.getColorTemperature()} K"

if needs_newline:

print()

needs_newline = False

print(txt)

capture = c in capture_list

if capture:

capture_file_info = ('capture_' + c + '_' + cam_name[cam_socket_opts[cam_skt].name]

+ '_' + str(width) + 'x' + str(height)

+ '_exp_' + str(int(pkt.getExposureTime().total_seconds()*1e6))

+ '_iso_' + str(pkt.getSensitivity())

+ '_lens_' + str(pkt.getLensPosition())

+ '_' + capture_time

+ '_' + str(pkt.getSequenceNum())

)

capture_list.remove(c)

print()

if c.startswith('raw_') or c.startswith('tof_amplitude_'):

if capture:

filename = capture_file_info + '_10bit.bw'

print('Saving:', filename)

frame.tofile(filename)

# Full range for display, use bits [15:6] of the 16-bit pixels

type = pkt.getType()

multiplier = 1

if type == dai.ImgFrame.Type.RAW10: multiplier = (1 << (16-10))

if type == dai.ImgFrame.Type.RAW12: multiplier = (1 << (16-4))

frame = frame * multiplier

# Debayer as color for preview/png

if cam_type_color[cam_skt]:

# See this for the ordering, at the end of page:

# https://docs.opencv.org/4.5.1/de/d25/imgproc_color_conversions.html

# TODO add bayer order to ImgFrame getType()

frame = cv2.cvtColor(frame, cv2.COLOR_BayerGB2BGR)

else:

# Save YUV too, but only when RAW is also enabled (for tuning purposes)

if capture and args.enable_raw:

payload = pkt.getData()

filename = capture_file_info + '_P420.yuv'

print('Saving:', filename)

payload.tofile(filename)

if capture:

filename = capture_file_info + '.png'

print('Saving:', filename)

print("\rFPS:",

*["{:6.2f}|{:6.2f}".format(fps_host[c].get(), fps_capt[c].get()) for c in cam_list],

end=' ', flush=True)

needs_newline = True

print()

dmesg:

[ 0.106724] usbcore: registered new interface driver usbfs

[ 0.106760] usbcore: registered new interface driver hub

[ 0.106785] usbcore: registered new device driver usb

[ 0.218926] ehci_hcd: USB 2.0 'Enhanced' Host Controller (EHCI) Driver

[ 0.219168] ohci_hcd: USB 1.1 'Open' Host Controller (OHCI) Driver

[ 0.219941] usbcore: registered new interface driver uas

[ 0.219985] usbcore: registered new interface driver usb-storage

[ 0.220051] usbcore: registered new interface driver usbserial_generic

[ 0.220074] usbserial: USB Serial support registered for generic

[ 0.220101] usbcore: registered new interface driver ftdi_sio

[ 0.220123] usbserial: USB Serial support registered for FTDI USB Serial Device

[ 0.220155] usbcore: registered new interface driver usb_serial_simple

[ 0.220174] usbserial: USB Serial support registered for carelink

[ 0.220192] usbserial: USB Serial support registered for zio

[ 0.220213] usbserial: USB Serial support registered for funsoft

[ 0.220234] usbserial: USB Serial support registered for flashloader

[ 0.220252] usbserial: USB Serial support registered for google

[ 0.220273] usbserial: USB Serial support registered for libtransistor

[ 0.220291] usbserial: USB Serial support registered for vivopay

[ 0.220310] usbserial: USB Serial support registered for moto_modem

[ 0.220329] usbserial: USB Serial support registered for motorola_tetra

[ 0.220352] usbserial: USB Serial support registered for nokia

[ 0.220376] usbserial: USB Serial support registered for novatel_gps

[ 0.220394] usbserial: USB Serial support registered for hp4x

[ 0.220414] usbserial: USB Serial support registered for suunto

[ 0.220432] usbserial: USB Serial support registered for siemens_mpi

[ 0.220464] usbcore: registered new interface driver usb_ehset_test

[ 0.232796] usbcore: registered new interface driver usbhid

[ 0.232804] usbhid: USB HID core driver

[ 2.330630] xhci-hcd xhci-hcd.1.auto: new USB bus registered, assigned bus number 1

[ 2.354657] hub 1-0:1.0: USB hub found

[ 2.368113] xhci-hcd xhci-hcd.1.auto: new USB bus registered, assigned bus number 2

[ 2.375785] xhci-hcd xhci-hcd.1.auto: Host supports USB 3.0 SuperSpeed

[ 2.382369] usb usb2: We don't know the algorithms for LPM for this host, disabling LPM.

[ 2.390862] hub 2-0:1.0: USB hub found

[ 2.626376] usb 1-1: new high-speed USB device number 2 using xhci-hcd

[ 2.831652] hub 1-1:1.0: USB hub found

[ 2.915041] usb 2-1: new SuperSpeed USB device number 2 using xhci-hcd

[ 2.975321] hub 2-1:1.0: USB hub found

[ 815.625929] usb 1-1.2: new high-speed USB device number 3 using xhci-hcd

[ 855.418717] usb 1-1.2: USB disconnect, device number 3

[ 856.164748] usb 2-1.2: new SuperSpeed USB device number 3 using xhci-hcd

[ 856.660836] usb 2-1.2: USB disconnect, device number 3

[ 856.908715] usb 1-1.2: new high-speed USB device number 4 using xhci-hcd

[ 3411.634561] usb 1-1.2: USB disconnect, device number 4

[ 3412.437928] usb 2-1.2: new SuperSpeed USB device number 4 using xhci-hcd

[ 3412.936592] usb 2-1.2: USB disconnect, device number 4

[ 3413.189887] usb 1-1.2: new high-speed USB device number 5 using xhci-hcd

[ 3458.339968] usb 1-1.2: USB disconnect, device number 5

[ 3458.987736] usb 2-1.2: new SuperSpeed USB device number 5 using xhci-hcd

[ 3459.484450] usb 2-1.2: USB disconnect, device number 5

[ 3459.731690] usb 1-1.2: new high-speed USB device number 6 using xhci-hcd

[ 3560.178248] usb 1-1.2: USB disconnect, device number 6

[ 3560.960914] usb 2-1.2: new SuperSpeed USB device number 6 using xhci-hcd

[ 3561.460132] usb 2-1.2: USB disconnect, device number 6

[ 3561.708871] usb 1-1.2: new high-speed USB device number 7 using xhci-hcd

[ 3868.213111] usb 1-1.2: USB disconnect, device number 7

[ 3869.010991] usb 2-1.2: new SuperSpeed USB device number 7 using xhci-hcd

[ 3869.507605] usb 2-1.2: USB disconnect, device number 7

[ 3869.758962] usb 1-1.2: new high-speed USB device number 8 using xhci-hcd

[ 3914.143082] usb 1-1.2: USB disconnect, device number 8

[ 3914.889198] usb 2-1.2: new SuperSpeed USB device number 8 using xhci-hcd

[ 3915.385079] usb 2-1.2: USB disconnect, device number 8

[ 3915.633171] usb 1-1.2: new high-speed USB device number 9 using xhci-hcd

[ 4792.704395] usb 1-1.2: USB disconnect, device number 9

[ 4802.326924] usb 1-1.2: new high-speed USB device number 10 using xhci-hcd

[ 4918.635710] usb 1-1.2: USB disconnect, device number 10

[ 4919.369358] usb 1-1.2: new high-speed USB device number 11 using xhci-hcd

[ 4920.229714] usb 1-1.2: USB disconnect, device number 11

[ 4920.457374] usb 1-1.2: new high-speed USB device number 12 using xhci-hcd

[ 5583.458666] usb 1-1.2: USB disconnect, device number 12

[ 5584.139156] usb 2-1.2: new SuperSpeed USB device number 9 using xhci-hcd

[ 5584.637933] usb 2-1.2: USB disconnect, device number 9

[ 5584.887094] usb 1-1.2: new high-speed USB device number 13 using xhci-hcd

[ 5792.584877] usb 1-1.2: USB disconnect, device number 13

[ 5793.349417] usb 2-1.2: new SuperSpeed USB device number 10 using xhci-hcd

[ 5793.847847] usb 2-1.2: USB disconnect, device number 10

[ 5794.093378] usb 1-1.2: new high-speed USB device number 14 using xhci-hcd

[ 5926.282468] usb 1-1.2: USB disconnect, device number 14

[ 5926.954783] usb 2-1.2: new SuperSpeed USB device number 11 using xhci-hcd

[ 5927.451571] usb 2-1.2: USB disconnect, device number 11

[ 5927.698723] usb 1-1.2: new high-speed USB device number 15 using xhci-hcd

[ 5972.087062] usb 1-1.2: USB disconnect, device number 15

[ 5972.831228] usb 2-1.2: new SuperSpeed USB device number 12 using xhci-hcd

[ 5973.327165] usb 2-1.2: USB disconnect, device number 12

[ 5973.575175] usb 1-1.2: new high-speed USB device number 16 using xhci-hcd

[ 5986.989801] usb 1-1.2: USB disconnect, device number 16

[ 5987.647373] usb 2-1.2: new SuperSpeed USB device number 13 using xhci-hcd

[ 5988.142063] usb 2-1.2: USB disconnect, device number 13

[ 5988.395327] usb 1-1.2: new high-speed USB device number 17 using xhci-hcd

[ 6047.624040] usb 1-1.2: USB disconnect, device number 17

[ 6048.335964] usb 2-1.2: new SuperSpeed USB device number 14 using xhci-hcd

[ 6048.817982] usb 2-1.2: USB disconnect, device number 14

[ 6049.079914] usb 1-1.2: new high-speed USB device number 18 using xhci-hcd

[ 8474.028855] usb 1-1.2: USB disconnect, device number 18

[ 8474.688034] usb 2-1.2: new SuperSpeed USB device number 15 using xhci-hcd

[ 8475.186354] usb 2-1.2: USB disconnect, device number 15

[ 8475.444031] usb 1-1.2: new high-speed USB device number 19 using xhci-hcd

[ 9830.665322] usb 1-1.2: USB disconnect, device number 19

[ 9831.274943] usb 2-1.2: new SuperSpeed USB device number 16 using xhci-hcd

[ 9831.608949] usb 2-1.2: USB disconnect, device number 16

[ 9832.050900] usb 1-1.2: new high-speed USB device number 20 using xhci-hcd

[ 9890.250025] usb 1-1.2: USB disconnect, device number 20

[ 9890.944911] usb 2-1.2: new SuperSpeed USB device number 17 using xhci-hcd

[ 9891.381399] usb 2-1.2: USB disconnect, device number 17

[ 9891.828884] usb 1-1.2: new high-speed USB device number 21 using xhci-hcd

[ 9898.732531] usb 1-1.2: USB disconnect, device number 21

[ 9899.385192] usb 2-1.2: new SuperSpeed USB device number 18 using xhci-hcd

[ 9899.819532] usb 2-1.2: USB disconnect, device number 18

[ 9900.265147] usb 1-1.2: new high-speed USB device number 22 using xhci-hcd

[ 9907.174434] usb 1-1.2: USB disconnect, device number 22

[ 9912.181554] usb 1-1.2: new high-speed USB device number 23 using xhci-hcd

[ 9917.652950] usb 1-1.2: USB disconnect, device number 23

[ 9918.333818] usb 2-1.2: new SuperSpeed USB device number 19 using xhci-hcd

[ 9918.780700] usb 2-1.2: USB disconnect, device number 19

[ 9919.221770] usb 1-1.2: new high-speed USB device number 24 using xhci-hcd

[ 9927.647703] usb 1-1.2: USB disconnect, device number 24

[ 9928.382138] usb 2-1.2: new SuperSpeed USB device number 20 using xhci-hcd

[ 9928.704055] usb 2-1.2: USB disconnect, device number 20

[ 9929.150106] usb 1-1.2: new high-speed USB device number 25 using xhci-hcd

[ 9966.628105] usb 1-1.2: USB disconnect, device number 25

[ 9967.275414] usb 2-1.2: new SuperSpeed USB device number 21 using xhci-hcd

[10131.774225] usb 2-1.2: USB disconnect, device number 21

[10132.224706] usb 1-1.2: new high-speed USB device number 26 using xhci-hcd