Hi from Pollen Robotics 🙂

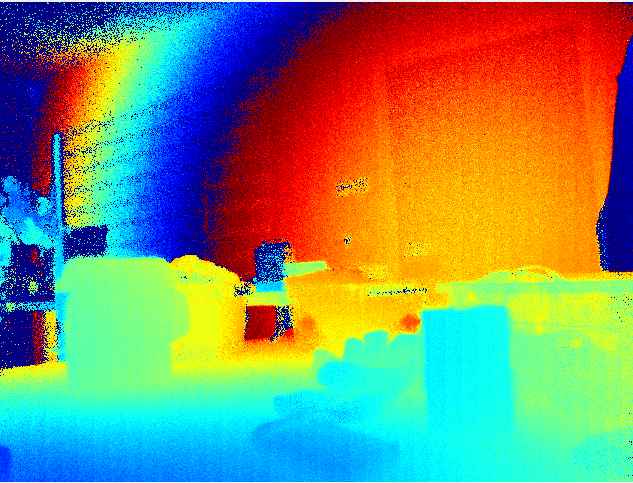

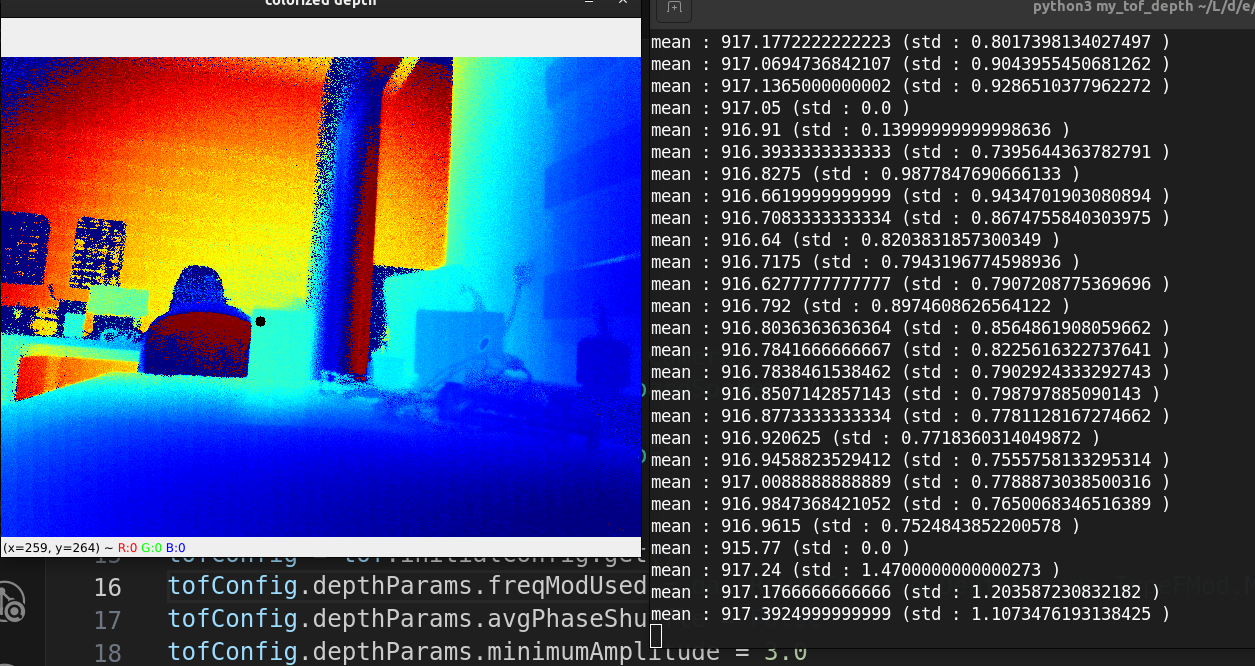

We just received our ToF module. Using this example we get a pretty nice looking depthmap

(Except for the looping colors, but I assume that over a few meters the sensor is not accurate anymore)

(We are using an OAK FFC-4P btw)

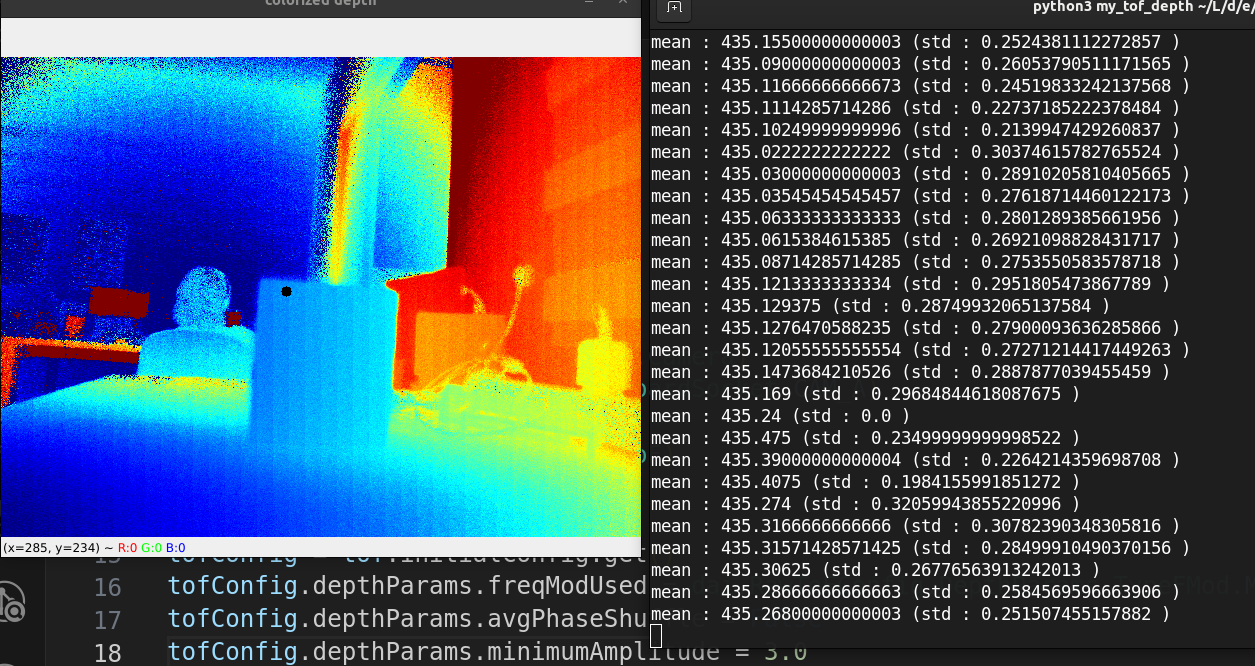

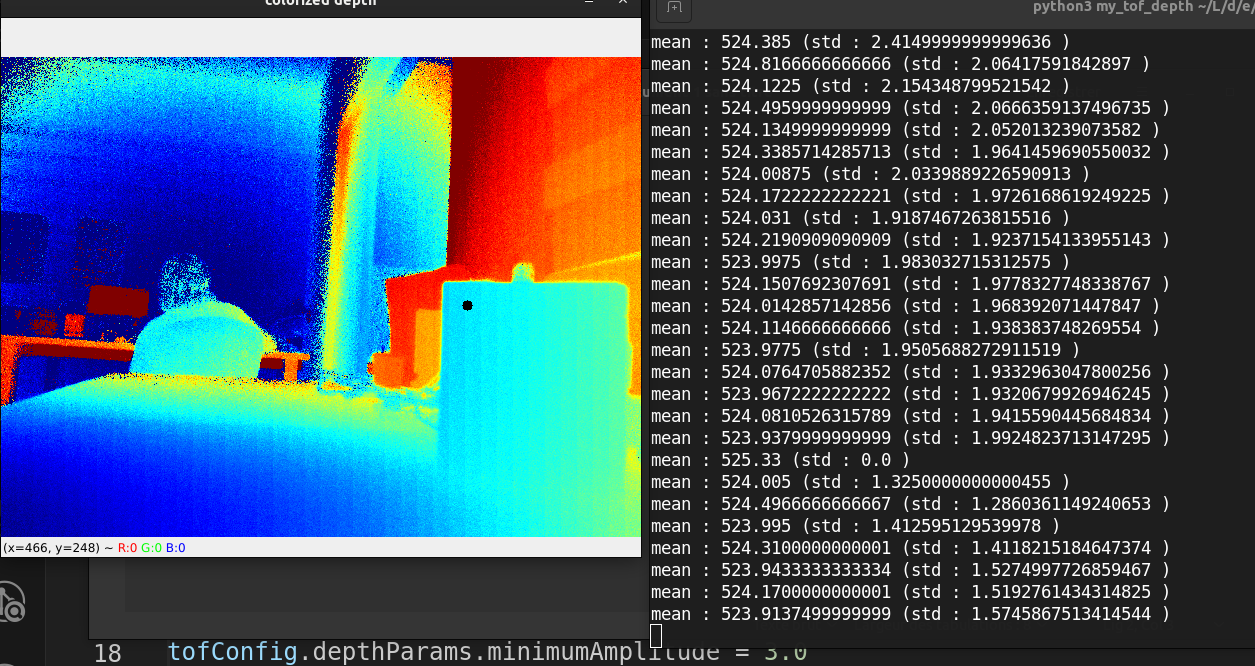

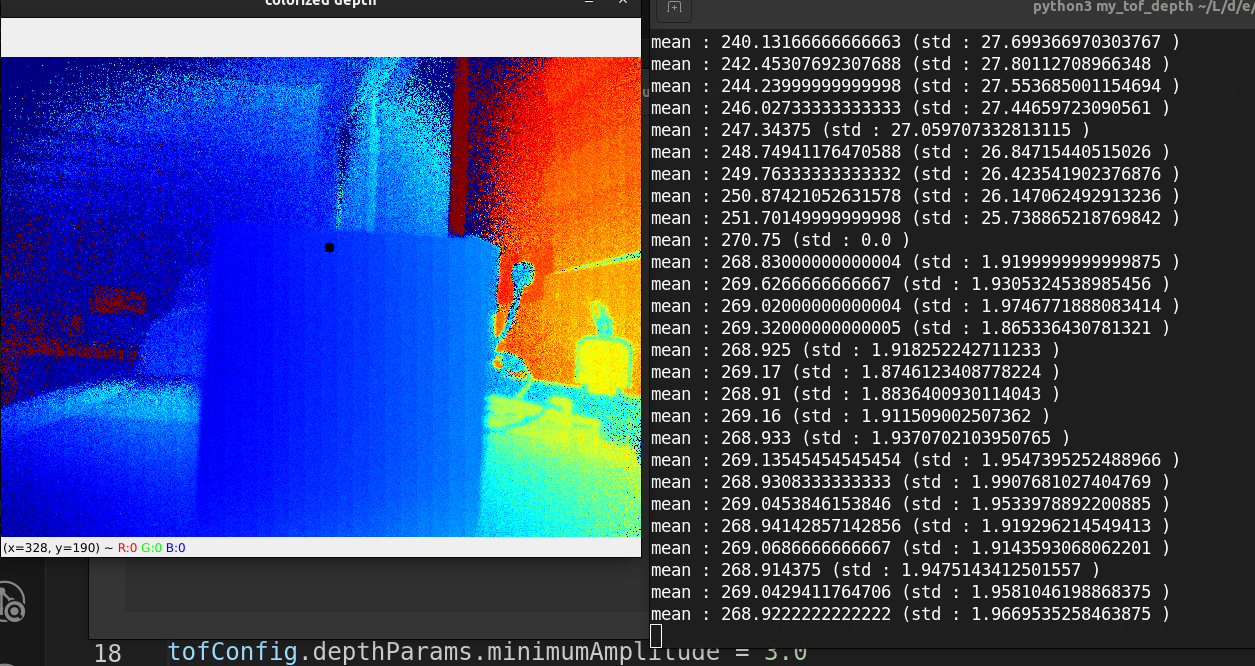

However, when I try to mesure distances, they are pretty far off most of the time. For example

Real distance (black circle) ~= 380

Real distance ~= 340

Real distance ~= 195

Real distance ~= 880

A few questions :

- I don't understand what the different parameters are doing, and the documentation is not very helpful to me (this is the first time I work with a ToF sensor). Could you explain what freqModUsed, avgPhaseShuffle, and minimumAmplitude are doing ?

- Am I right to expect millimeters out of the depth map ?

- Is there any calibration needed ? Could that have been degraded during transport ?

Here is my code for depth mesurement in the image (at the position of the cursor), based on the provided example

#!/usr/bin/env python3

import cv2

import depthai as dai

import numpy as np

pipeline = dai.Pipeline()

cam_a = pipeline.create(dai.node.Camera)

cam_a.setBoardSocket(dai.CameraBoardSocket.CAM_A)

tof = pipeline.create(dai.node.ToF)

# Configure the ToF node

tofConfig = tof.initialConfig.get()

tofConfig.depthParams.freqModUsed = dai.RawToFConfig.DepthParams.TypeFMod.MAX

tofConfig.depthParams.avgPhaseShuffle = False

tofConfig.depthParams.minimumAmplitude = 3.0

tof.initialConfig.set(tofConfig)

cam_a.raw.link(tof.input)

xout = pipeline.create(dai.node.XLinkOut)

xout.setStreamName("depth")

tof.depth.link(xout.input)

last_mouse_pos = (0, 0)

last_depth_mesurement = 0

def mouse_callback(event, x, y, flags, params):

global last_mouse_pos

last_mouse_pos = (x, y)

cv2.namedWindow("colorized depth")

cv2.setMouseCallback("colorized depth", mouse_callback)

time_averaged_depth = []

# Connect to device and start pipeline

with dai.Device(pipeline) as device:

print('Connected cameras:', device.getConnectedCameraFeatures())

q = device.getOutputQueue(name="depth")

while True:

imgFrame = q.get()

depth_map = imgFrame.getFrame()

depth_downscaled = depth_map[::4]

non_zero_depth = depth_downscaled[depth_downscaled != 0] # Remove invalid depth values

if len(non_zero_depth) == 0:

min_depth, max_depth = 0, 0

else:

min_depth = np.percentile(non_zero_depth, 3)

max_depth = np.percentile(non_zero_depth, 97)

last_depth_mesurement = depth_map[last_mouse_pos[1]-5:last_mouse_pos[1]+5, last_mouse_pos[0]-5:last_mouse_pos[0]+5]

last_depth_mesurement = np.mean(last_depth_mesurement)

time_averaged_depth.append(last_depth_mesurement)

time_averaged_depth = time_averaged_depth[20:] if len(time_averaged_depth) > 20 else time_averaged_depth

print("mean :", np.mean(time_averaged_depth), "(std :", np.std(time_averaged_depth), ")")

depth_colorized = np.interp(depth_map, (min_depth, max_depth), (0, 255)).astype(np.uint8)

depth_colorized = cv2.applyColorMap(depth_colorized, cv2.COLORMAP_JET)

depth_colorized = cv2.circle(depth_colorized, last_mouse_pos, 5, (0, 0, 0), -1)

cv2.imshow("colorized depth", depth_colorized)

if cv2.waitKey(1) == ord('q'):

break

Thanks !

Antoine