Using 5V 3Amp power supply for RPI4, and usbc3 cable to connect to Oak-1 camera.

The first few minutes of this running for the day does not crash at all, and then it begins to give X_LINK_ERROR every few seconds. It works fine when running on my mac, but not on RPI.

What can be causing this?

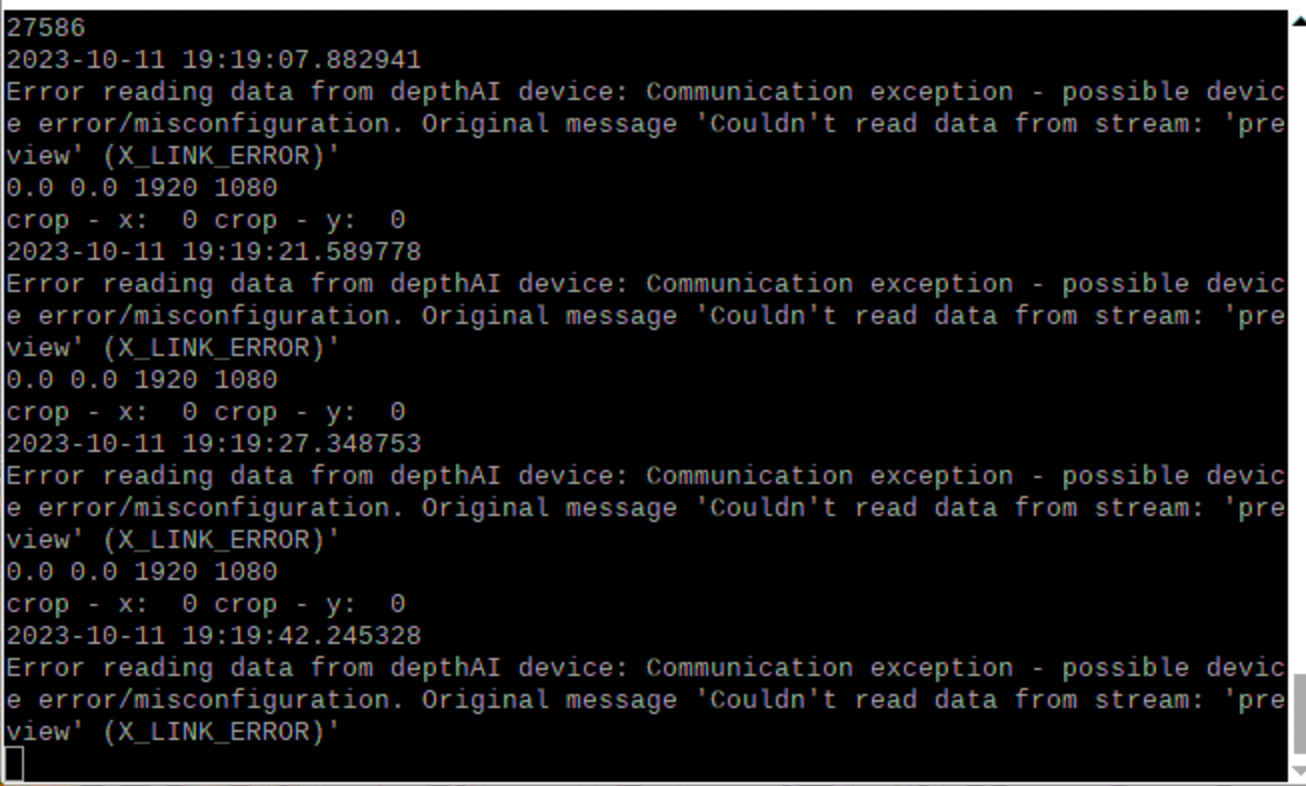

Console Logs:

First Image is on first initiation, second is while running.

Code:

from pathlib import Path

import sys

import os

import cv2

import depthai as dai

import numpy as np

import time

import argparse

import json

import blobconverter

import datetime

# Print count at set interval

def print_count(count, previous_time):

# Print every X seconds

TIME_INTERVAL = 60

timestamp = datetime.datetime.utcnow()

# Interval time hasn't passed, do nothing and pass same previous_time out

if ((timestamp - previous_time).seconds < TIME_INTERVAL):

return previous_time

# Interval time passed, print

else:

print(count)

return timestamp

# parse arguments

parser = argparse.ArgumentParser()

parser.add_argument("-m", "--model", help="Provide model name or model path for inference",

default='best_openvino_2022.1_6shave.blob', type=str)

parser.add_argument("-c", "--config", help="Provide config path for inference",

default='best_openvino_2022.1_6shave.json', type=str)

parser.add_argument('-roi', '--roi_position', type=float,

default=0.25, help='ROI Position (0-1)')

parser.add_argument('-a', '--axis', default=False, action='store_false',

help='Axis for cumulative counting (default=x axis)')

args = parser.parse_args()

# parse config

configPath = Path(args.config)

if not configPath.exists():

raise ValueError("Path {} does not exist!".format(configPath))

with configPath.open() as f:

config = json.load(f)

nnConfig = config.get("nn_config", {})

# parse input shape

if "input_size" in nnConfig:

W, H = tuple(map(int, nnConfig.get("input_size").split('x')))

# extract metadata

metadata = nnConfig.get("NN_specific_metadata", {})

classes = metadata.get("classes", {})

coordinates = metadata.get("coordinates", {})

anchors = metadata.get("anchors", {})

anchorMasks = metadata.get("anchor_masks", {})

iouThreshold = metadata.get("iou_threshold", {})

confidenceThreshold = metadata.get("confidence_threshold", {})

print(metadata)

# parse labels

nnMappings = config.get("mappings", {})

labels = nnMappings.get("labels", {})

# get model path

nnPath = args.model

if not Path(nnPath).exists():

print("No blob found at {}.".format(nnPath))

nnPath = str(blobconverter.from_zoo(args.model, shaves = 6, zoo_type = "depthai", use_cache=True))

# sync outputs

syncNN = True

# Create pipeline

pipeline = dai.Pipeline()

# Define sources and outputs

camRgb = pipeline.create(dai.node.ColorCamera)

detectionNetwork = pipeline.create(dai.node.YoloDetectionNetwork)

objectTracker = pipeline.create(dai.node.ObjectTracker)

configIn = pipeline.create(dai.node.XLinkIn)

xlinkOut = pipeline.create(dai.node.XLinkOut)

trackerOut = pipeline.create(dai.node.XLinkOut)

xlinkOut.setStreamName("preview")

trackerOut.setStreamName("tracklets")

configIn.setStreamName('config')

# Properties

camRgb.setPreviewSize(W, H)

camRgb.setResolution(dai.ColorCameraProperties.SensorResolution.THE_1080_P)

camRgb.setInterleaved(False)

camRgb.setColorOrder(dai.ColorCameraProperties.ColorOrder.BGR)

camRgb.setFps(35)

camRgb.setPreviewKeepAspectRatio(False)

# Network specific settings

detectionNetwork.setConfidenceThreshold(confidenceThreshold)

detectionNetwork.setNumClasses(classes)

detectionNetwork.setCoordinateSize(coordinates)

detectionNetwork.setAnchors(anchors)

detectionNetwork.setAnchorMasks(anchorMasks)

detectionNetwork.setIouThreshold(iouThreshold)

detectionNetwork.setBlobPath(nnPath)

detectionNetwork.setNumInferenceThreads(2)

detectionNetwork.input.setBlocking(False)

objectTracker.setDetectionLabelsToTrack([0]) # track only person

# possible tracking types: ZERO_TERM_COLOR_HISTOGRAM, ZERO_TERM_IMAGELESS, SHORT_TERM_IMAGELESS, SHORT_TERM_KCF

objectTracker.setTrackerType(dai.TrackerType.ZERO_TERM_COLOR_HISTOGRAM)

# take the smallest ID when new object is tracked, possible options: SMALLEST_ID, UNIQUE_ID

objectTracker.setTrackerIdAssignmentPolicy(dai.TrackerIdAssignmentPolicy.UNIQUE_ID)

objectTracker.setTrackerThreshold(0.5)

# Linking

camRgb.preview.link(detectionNetwork.input)

objectTracker.passthroughTrackerFrame.link(xlinkOut.input)

configIn.out.link(camRgb.inputConfig)

camRgb.preview.link(objectTracker.inputTrackerFrame)

#detectionNetwork.passthrough.link(objectTracker.inputTrackerFrame)

detectionNetwork.passthrough.link(objectTracker.inputDetectionFrame)

detectionNetwork.out.link(objectTracker.inputDetections)

objectTracker.out.link(trackerOut.input)

# from https://www.pyimagesearch.com/2018/08/13/opencv-people-counter/

class TrackableObject:

def __init__(self, objectID, centroid):

# store the object ID, then initialize a list of centroids

# using the current centroid

self.objectID = objectID

self.centroids = [centroid]

# initialize a boolean used to indicate if the object has

# already been counted or not

self.counted = False

# Set initial timestamp for print interval

timestamp = datetime.datetime.utcnow()

# Set initial count from counter.txt file

objectCounter = int(open("counter.txt", "r").read())

while True:

try:

# Connect to device and start pipeline

with dai.Device(pipeline) as device:

#preview = device.getOutputQueue("preview", 4, False)

preview = device.getOutputQueue("preview", 1, False)

tracklets = device.getOutputQueue("tracklets", 4, False)

configQueue = device.getInputQueue("config")

# Max cropX & cropY

maxCropX = (camRgb.getIspWidth() - camRgb.getVideoWidth()) / camRgb.getIspWidth()

maxCropY = (camRgb.getIspHeight() - camRgb.getVideoHeight()) / camRgb.getIspHeight()

print(maxCropX, maxCropY, camRgb.getIspWidth(), camRgb.getVideoHeight())

# Default crop

cropX = 0

cropY = 0

sendCamConfig = True

if sendCamConfig:

cfg = dai.ImageManipConfig()

cfg.setCropRect(cropX, cropY, maxCropX, maxCropY)

configQueue.send(cfg)

print('crop - x: ', cropX, 'crop - y: ', cropY)

startTime = time.monotonic()

counter = 0

fps = 0

frame = None

trackableObjects = {}

while(True):

try:

imgFrame = preview.get()

track = tracklets.get()

counter+=1

current_time = time.monotonic()

if (current_time - startTime) > 1 :

fps = counter / (current_time - startTime)

counter = 0

startTime = current_time

color = (255, 0, 0)

frame = imgFrame.getCvFrame()

height = frame.shape[0]

width = frame.shape[1]

trackletsData = track.tracklets

for t in trackletsData:

to = trackableObjects.get(t.id, None)

# calculate centroid

roi = t.roi.denormalize(frame.shape[1], frame.shape[0])

x1 = int(roi.topLeft().x)

y1 = int(roi.topLeft().y)

x2 = int(roi.bottomRight().x)

y2 = int(roi.bottomRight().y)

centroid = (int((x2-x1)/2+x1), int((y2-y1)/2+y1))

newX = int((x2-x1)/2+x1)

newY = int((y2-y1)/2+y1)

# If new tracklet, save its centroid

if t.status == dai.Tracklet.TrackingStatus.NEW:

to = TrackableObject(t.id, centroid)

elif to is not None:

if not to.counted:

y = [c[1] for c in to.centroids]

direction = centroid[1] - np.mean(y)

if centroid[1] > args.roi_position*height and direction > 0 and np.mean(y) < args.roi_position*height:

objectCounter += 1

if objectCounter > 999999 :

objectCounter = 0

open("counter.txt", "w").write(str(objectCounter))

to.counted = True

to.centroids.append(centroid)

trackableObjects[t.id] = to

#Update timestamp and print if interval time passed

timestamp = print_count(objectCounter, timestamp)

except RuntimeError as e:

print(datetime.datetime.utcnow())

print(f"Error reading data from depthAI device: {e}")

break # Break out of loop and reinitialize

except:

continue