Matija

Hi and thank you for your response.

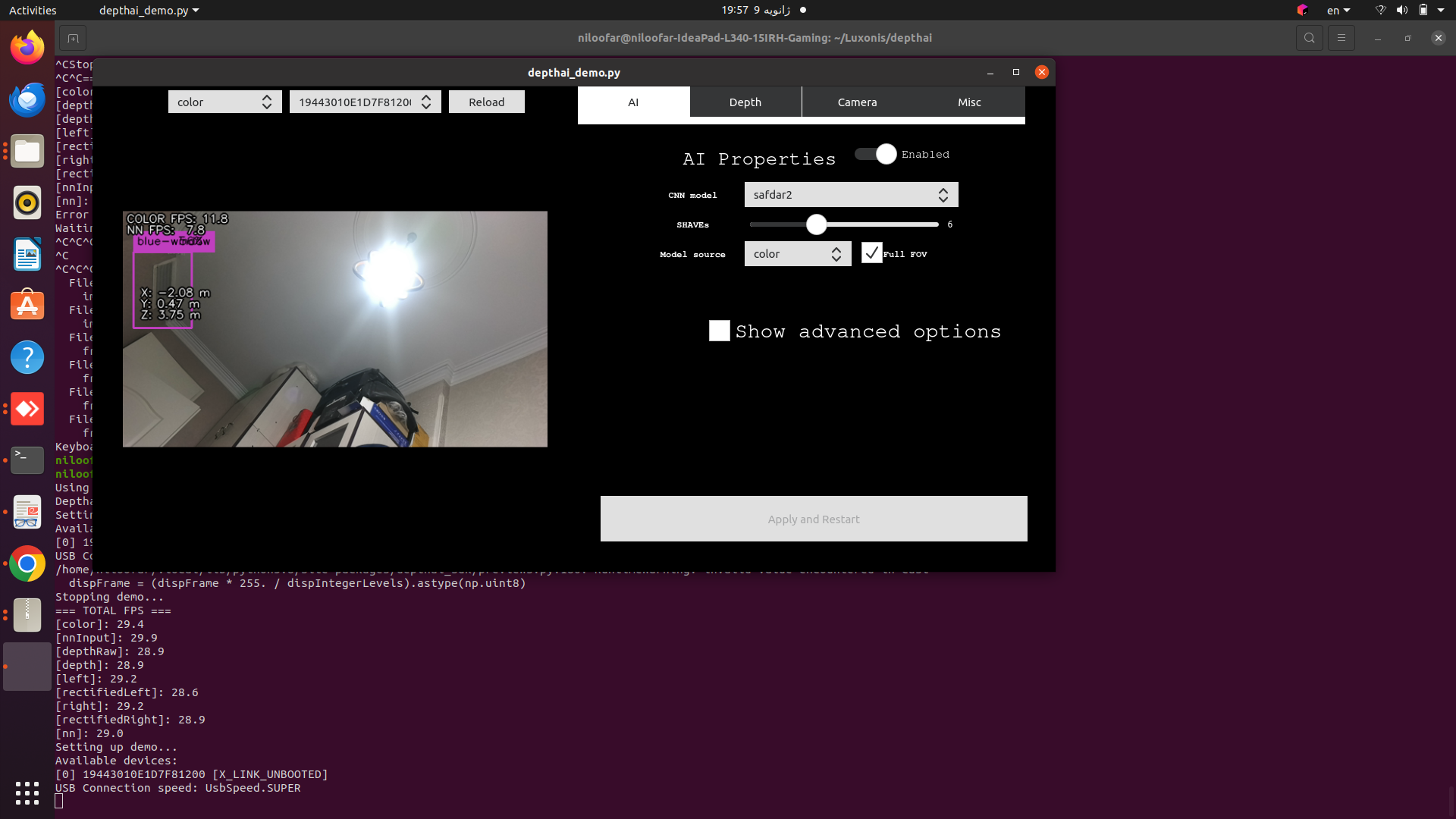

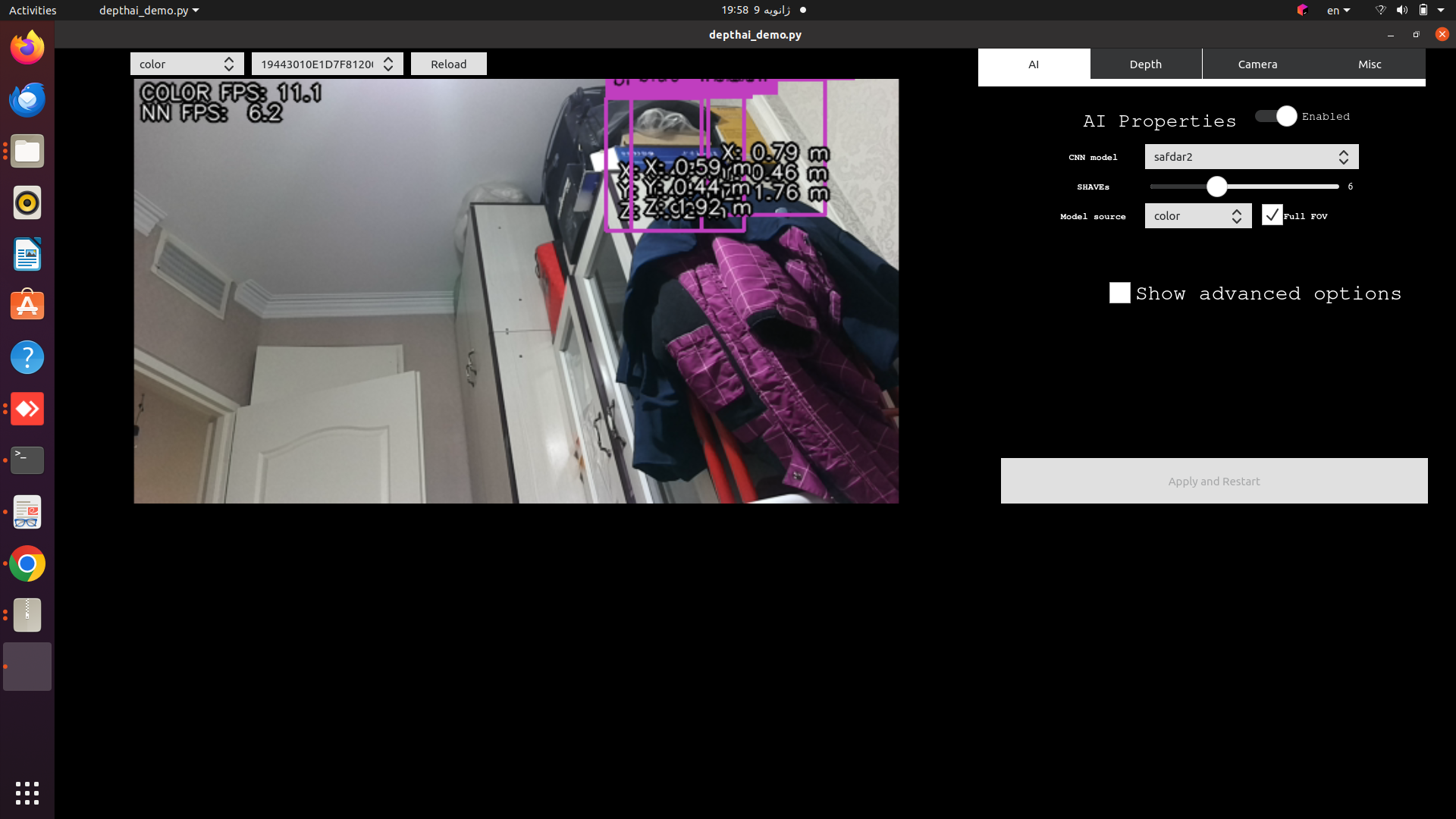

Matija, I have just added my custom model in Oak GUI. By running the custom model I got wrong or false detection.

The Oak has showed some reactions. but they are not true…decetions are not blue window as I have mentioned before.

I am exceeding my dataset to 250 images and I have a question, Do you think something else has gone wrong?

How can I make Oak detect Truly ??

or this false detection is due to my dataset?

except increasing the dataset, what are other factors can cause this false detection?

the image below is the detections by custom model.

and another question, I have noticed in your custom model folder a file written in python and named by handler… what is handler and how it works and should have to write for my custom models?

Can you explain it more …

Thanks in advance.