Hi Jaka,

Thank you for your response. I would like to confirm that you are referring to the following code for MCAP recording? https://github.com/luxonis/depthai/blob/main/depthai_sdk/examples/recording/mcap_record.py

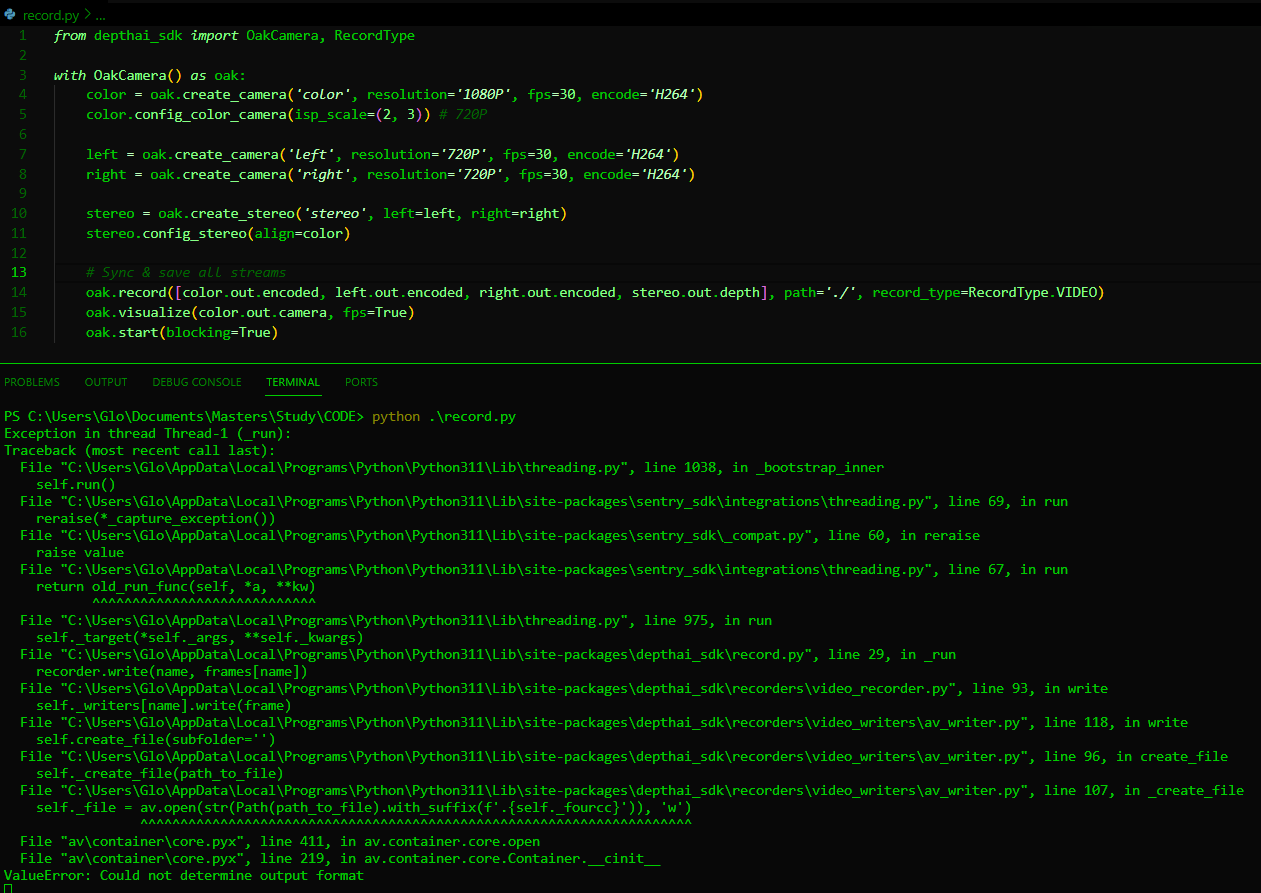

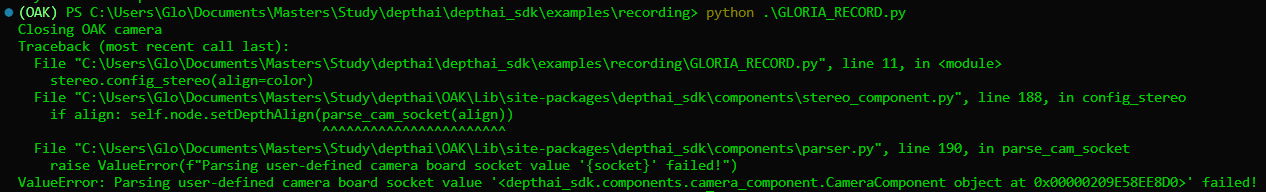

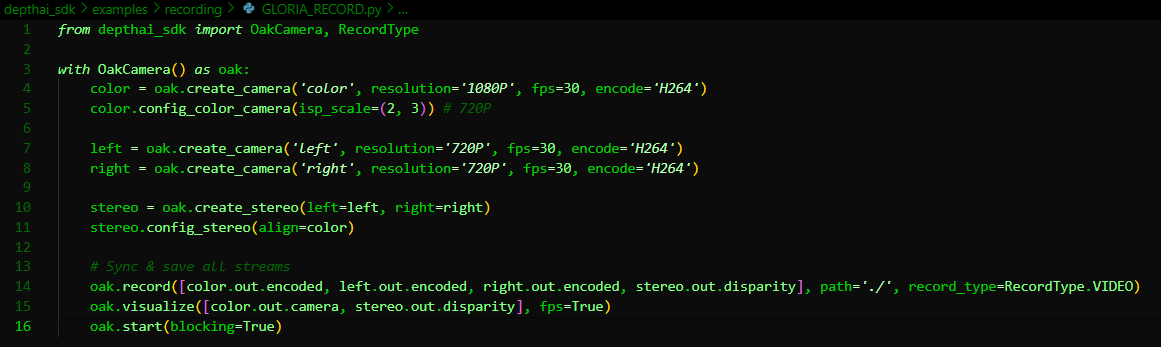

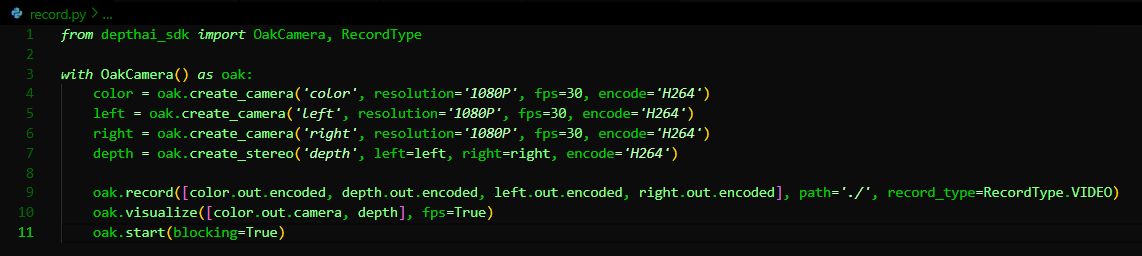

I've been using the mp4 recording method (code below) but find that the RGB FOV is a zoomed-in version of the depth data. For instance, if I take a video of myself, I can only see part of my head/face in the RGB video but I can see the whole thing and more behind me in the depth video. Am I missing something? This is why I ended up resorting to the RGB-D alignment code.

Thank you so much for your help.