I trained a custom Yolo v8 classification model on 2 classes. When i run the model on my laptop it classifies images correctly, so the problem is most likely not in the dataset. I used depthai library as interface for oak camera. I converted the model from .pt format to required blob format using http://blobconverter.luxonis.com/. When i deploy the converted model to oak camera using the depthai, the model always classifies the images as the class number 1. I guess that there is a certain loss of data or parameters in the conversion process, because classification works correctly in .pt format on my laptop as i mentioned before.

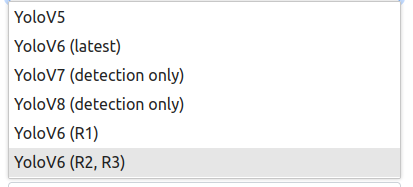

I tried using http://tools.luxonis.com/ website but it only supports detection, and not classification when it comes to Yolo v8. I also tried to use the export command that Yolo v8 offers and exported the model in the Intermediate Format(IR), and then convert it to blob format using the same tool http://blobconverter.luxonis.com/. Weirdly, the model now always classified the images as class number 2.

I wonder if there is another tool i could use, or am i doing something wrong in the conversion process?