Hi,

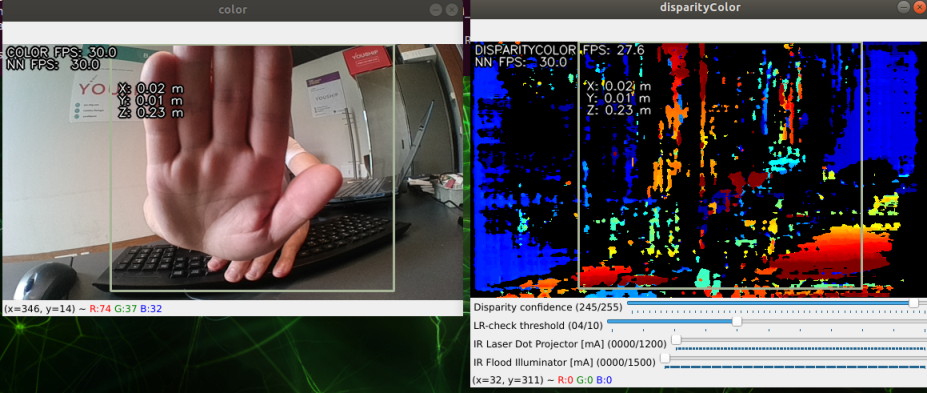

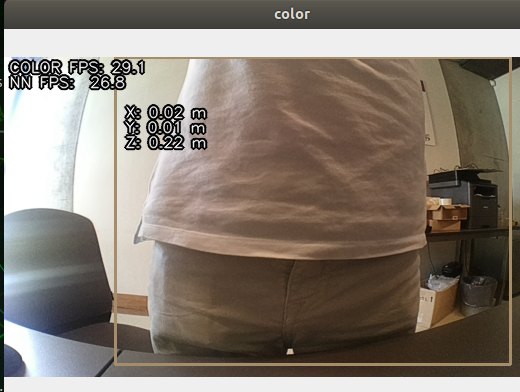

I am trying to align the depth frame with the color frame to do NN inference and get the 3D coordinates.

Using the dephtai/dephtai_demo.py it looks like the Z value is realistic and works to the closest's objects.

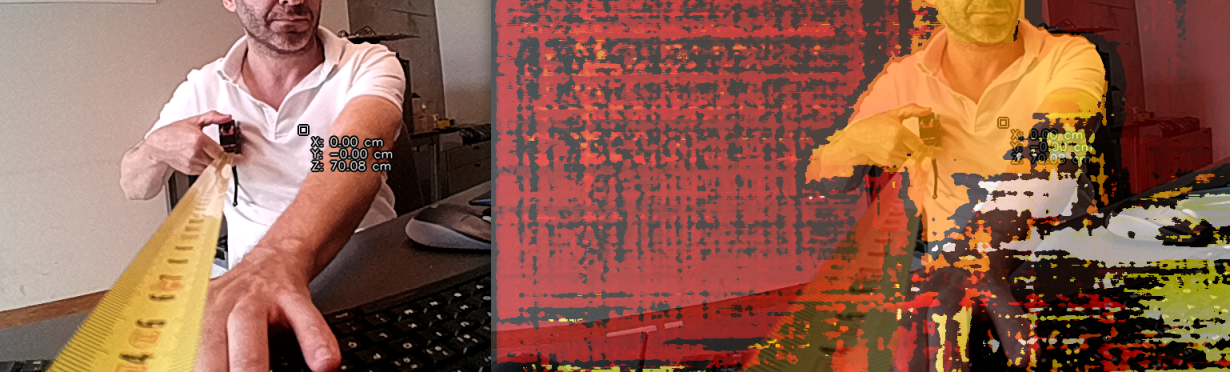

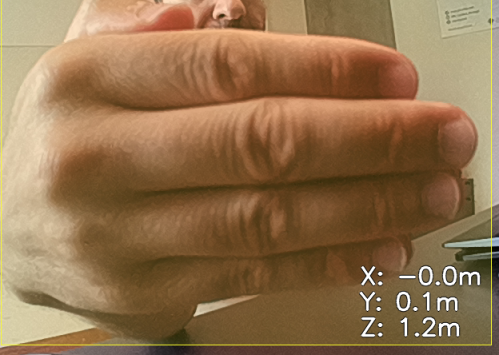

However when I go through dephtai_python/examples, the Z value is very inaccurate, and for the closest's objects, the value had a big error (1 or 2 or 3 meters).

What is the best example to start with the camera OAK-D-W OV9782?

Thanks!