I've been running the spatial_mobilenet_mono API example in low light/IR environments, and noticed that the depth map improves the mono footage and its rate of detection by a huge amount (in addition to providing depth data).

How would I do this with yolo 8 with the SDK? I've managed to run object detection with spatial data in bright rooms, but have not been able to get the depth map to improve the detection in low light environments. It seems like I have to feed the depth map into the NN_Component somehow, but I have not been able to find documentation on this.

I have included screenshots and code below:

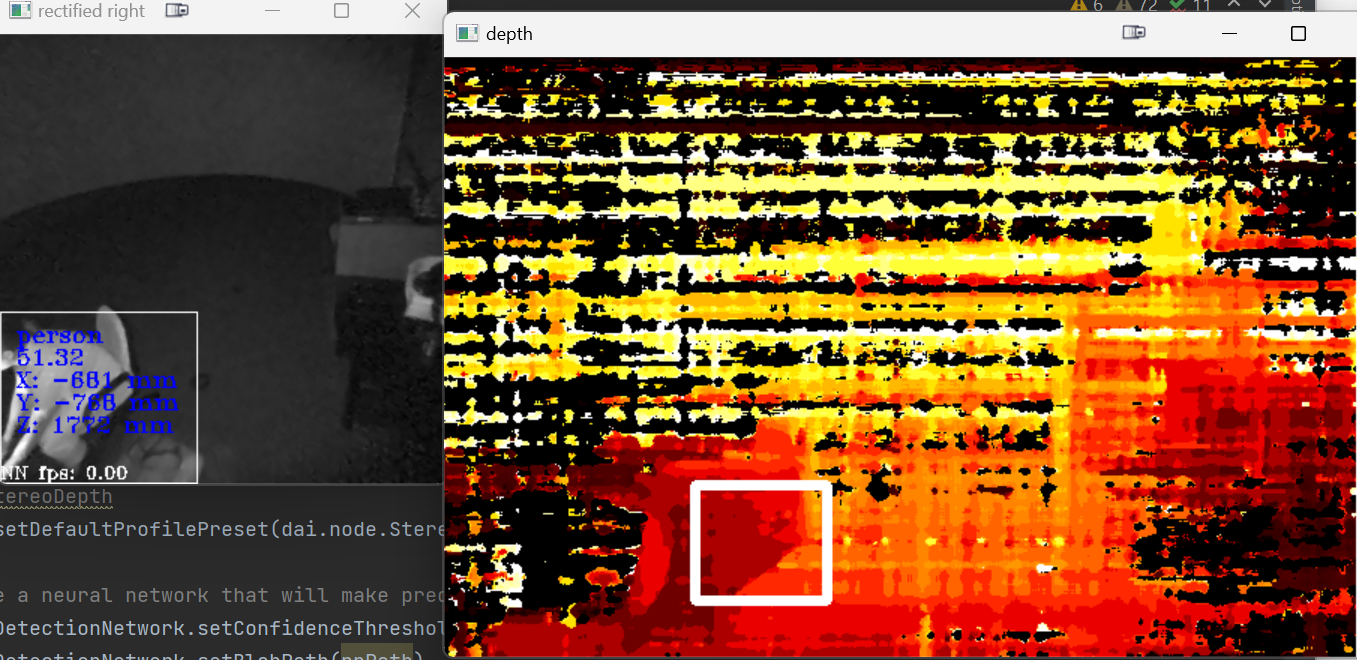

spatial_mobilenet_mono in low light/IR environment

You can see that the mono footage is very clear and it detects people more than 90% of the time

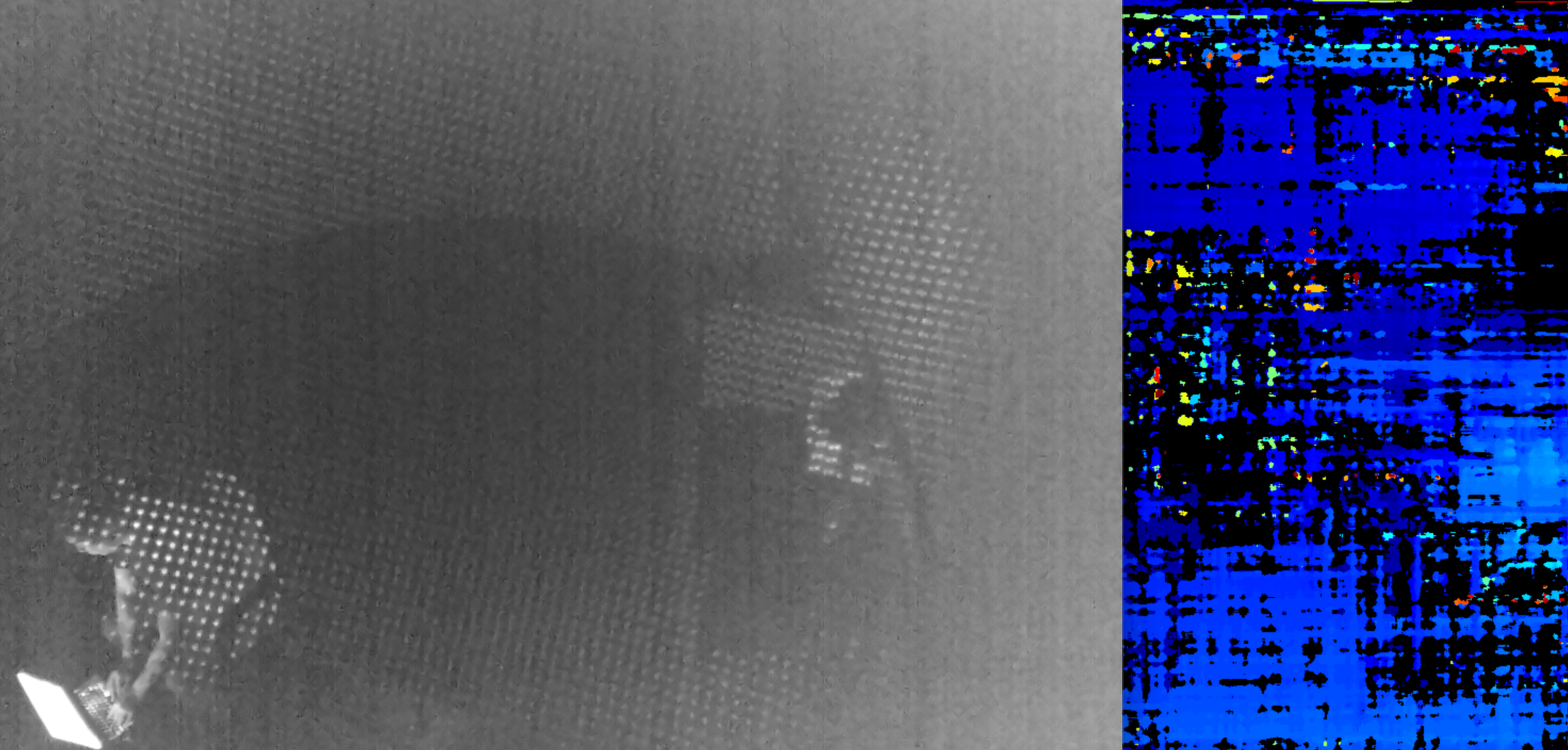

yolo_8 in low light (I want it to work like the spatial_mobilenet_mono)

The mono footage is a lot blurrier and you can barely see anything. Detects people less than 10% of the time.

yolo_8 code

from depthai_sdk import OakCamera

import depthai as dai

with OakCamera() as oak:

left = oak.create_camera("left")

right = oak.create_camera("right")

stereo = oak.create_stereo(left=left, right=right, fps=30)

nn = oak.create_nn('yolov8n_coco_640x352', left, spatial=stereo)

nn.config_spatial(

bb_scale_factor=0.5, # Scaling bounding box before averaging the depth in that ROI

lower_threshold=300, # Discard depth points below 30cm

upper_threshold=10000, # Discard depth pints above 10m

#Average depth points before calculating X and Y spatial coordinates:

calc_algo=dai.SpatialLocationCalculatorAlgorithm.AVERAGE

)

oak.visualize([nn.out.main, stereo.out.depth], fps=True)

#oak.show_graph()

oak.start(blocking=True)

API code of spatial_mobilenet_mono

https://docs.luxonis.com/projects/api/en/latest/samples/SpatialDetection/spatial_mobilenet_mono/

Appreciate your time and thanks in advance!