Hi Erik,

I'll try to upload a colorized disparity map tomorrow when I have the time to make some good images for comparison.

When running the command calibData.getFov(dai.CameraBoardSocket.CAM_A, useSpec=False) I get a value of around 77 degrees.

When I use this value in my pixel to mm function I get a wrong result, my function is as follows:

int LuxonisCam::Pix2mm(float Punt_mmtmp[3], float pix[2], float Dist) {

//https://github.com/luxonis/depthai-experiments/blob/master/gen2-calc-spatials-on-host/calc.py

int dx = pix[0] - (DepthXsize / 2);

int dy = pix[1] - (DepthYsize / 2);

float phiX = atan(tan(this->DepthFOV / 2.0) /* 0.82*/ * dx / (DepthXsize / 2.0));

float phiY = atan(tan(this->DepthFOV / 2.0) * dy / (DepthXsize / 2.0));

Punt_mmtmp[0] = -Dist * tan(phiX); //X

Punt_mmtmp[1] = -Dist * tan(phiY); //Y

Punt_mmtmp[2] = Dist; //Z

return 0;

//2_deproject_pixel_to_point(Punt_mmtmp, &intrinsics, pix, Dist);

}

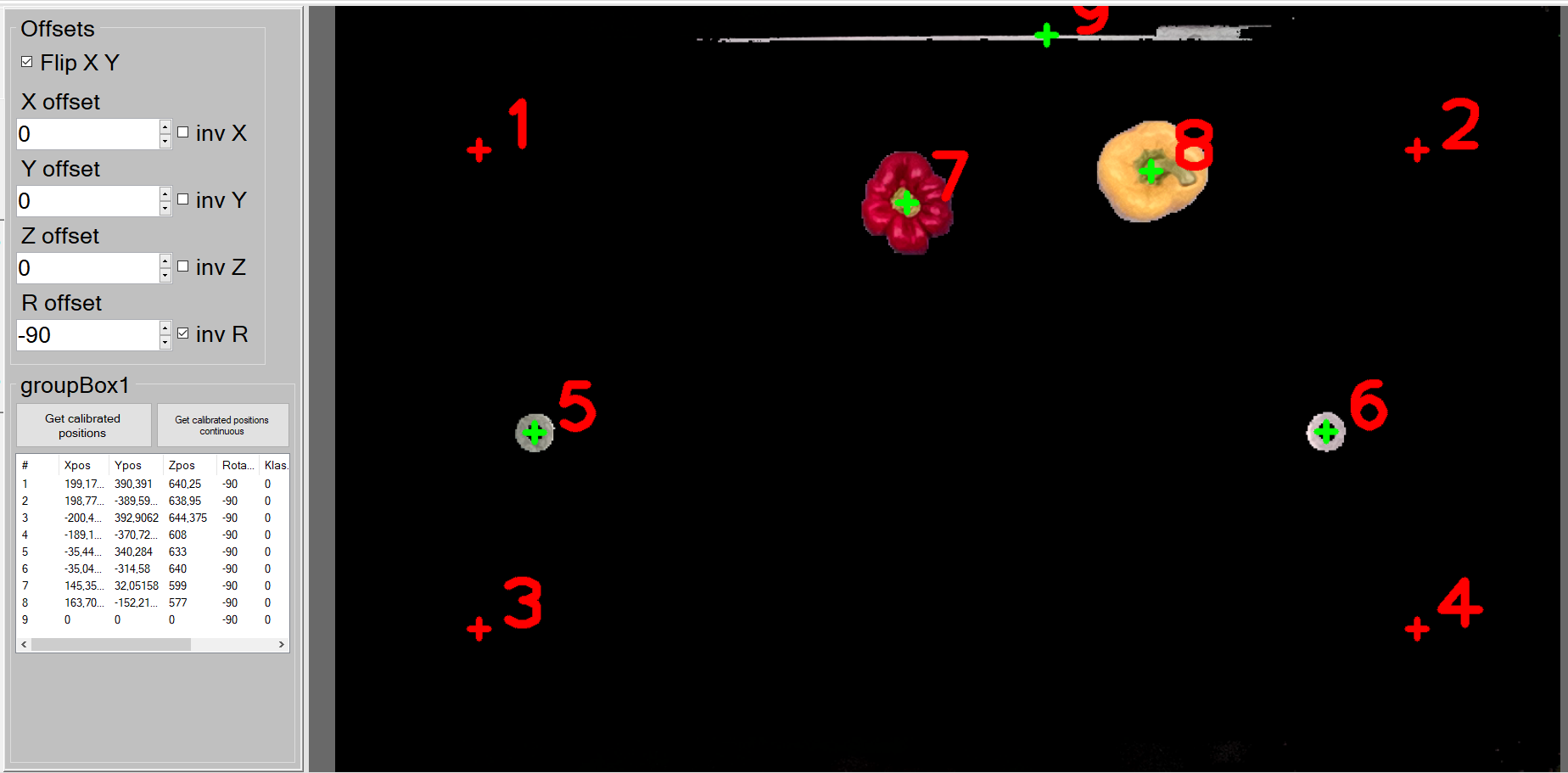

The image is taken from the following scene:

This is the result (background filtered out). As you can see the distance between 5 and 6 is 654mm instead of 685mm. The distance from the belt to the front of the camera is in reality 670mm instead of the 640mm i get from the camera.

any ideas?