Hello,

I am reviving this thread because I noticed differences in performance of the stereo calibration when using the StereoDepth node as compared to doing it "manually" using code inspired by this https://github.com/luxonis/depthai-python/blob/main/examples/ColorCamera/rgb_undistort.py

Here is a stripped down version of my code:

def get_maps(ispSize):

l_CS = ... # Left camera socket

r_CS = ... # Right camera socket

calib = device.readCalibration()

left_K = np.array(calib.getCameraIntrinsics(l_CS, ispSize[0], ispSize[1]))

left_D = np.array(calib.getDistortionCoefficients(l_CS))

right_K = np.array(calib.getCameraIntrinsics(r_CS, ispSize[0], ispSize[1]))

right_D = np.array(calib.getDistortionCoefficients(r_CS))

T_l_r = np.array(calib.getCameraExtrinsics(r_CS, l_CS))

R = T_l_r[:3, :3]

T = T_l_r[:3, 3]

R1, R2, P1, P2, _, _, _ = cv2.stereoRectify(

left_K,

left_D,

right_K,

right_D,

ispSize,

R,

T

)

maps = {}

maps["left"] = cv2.initUndistortRectifyMap(

left_K, left_D, R1, P1, ispSize, cv2.CV_32FC1

)

maps["right"] = cv2.initUndistortRectifyMap(

right_K, right_D, R2, P2, ispSize, cv2.CV_32FC1

)

return maps, left_K, left_D, right_K, right_D, R1, R2, P1, P2

def get_mesh(cam_name, ispSize):

maps, left_K, left_D, right_K, right_D, R1, R2, P1, P2 = get_maps(ispSize)

maps["left"] = cv2.initUndistortRectifyMap(

left_K, left_D, R1, P1, ispSize, cv2.CV_32FC1

)

maps["right"] = cv2.initUndistortRectifyMap(

right_K, right_D, R2, P2, ispSize, cv2.CV_32FC1

)

mapX = maps[cam_name][0]

mapY = maps[cam_name][1]

meshCellSize = 16

mesh0 = []

# Creates subsampled mesh which will be loaded on to device to undistort the image

for y in range(mapX.shape[0] + 1): # iterating over height of the image

if y % meshCellSize == 0:

rowLeft = []

for x in range(mapX.shape[1]): # iterating over width of the image

if x % meshCellSize == 0:

if y == mapX.shape[0] and x == mapX.shape[1]:

rowLeft.append(mapX[y - 1, x - 1])

rowLeft.append(mapY[y - 1, x - 1])

elif y == mapX.shape[0]:

rowLeft.append(mapX[y - 1, x])

rowLeft.append(mapY[y - 1, x])

elif x == mapX.shape[1]:

rowLeft.append(mapX[y, x - 1])

rowLeft.append(mapY[y, x - 1])

else:

rowLeft.append(mapX[y, x])

rowLeft.append(mapY[y, x])

if (mapX.shape[1] % meshCellSize) % 2 != 0:

rowLeft.append(0)

rowLeft.append(0)

mesh0.append(rowLeft)

mesh0 = np.array(mesh0)

meshWidth = mesh0.shape[1] // 2

meshHeight = mesh0.shape[0]

mesh0.resize(meshWidth * meshHeight, 2)

mesh = list(map(tuple, mesh0))

return mesh, meshWidth, meshHeight

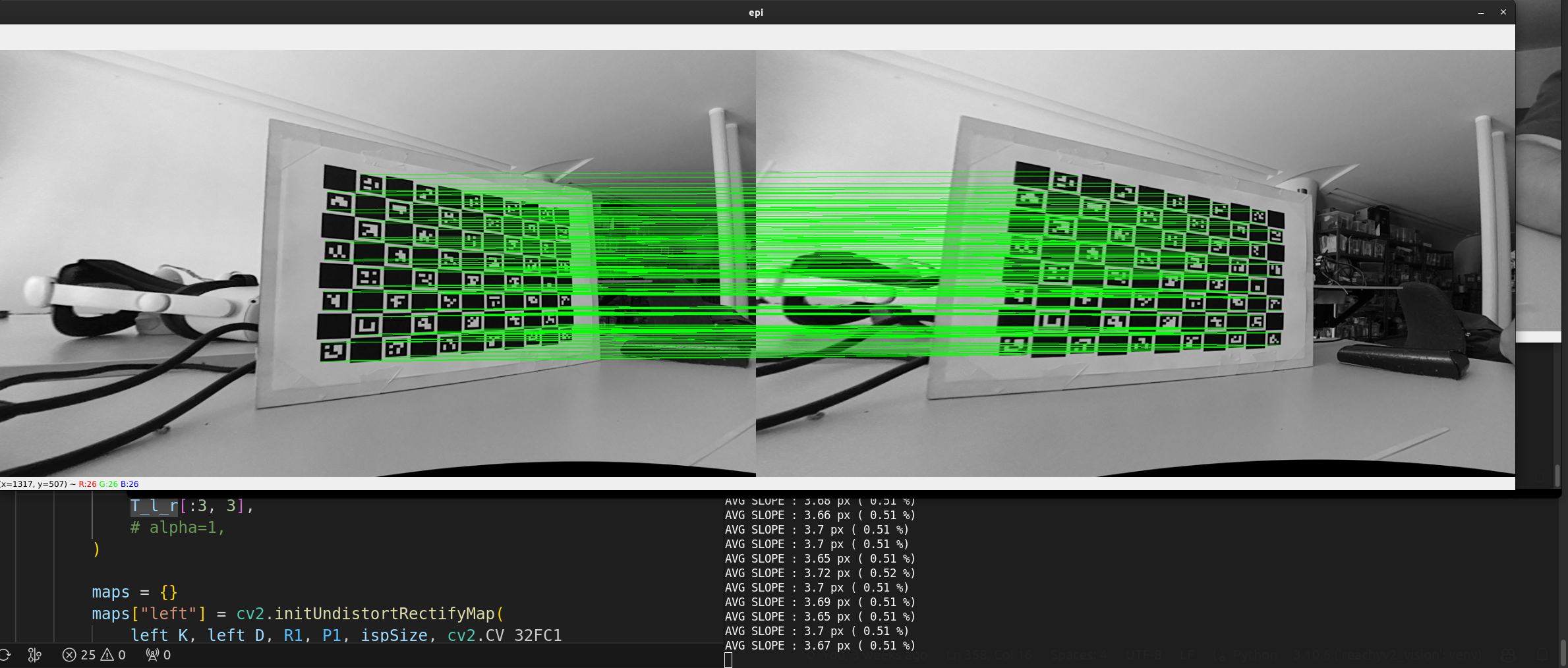

Using ImageManip with setWarpMesh() with the returned mesh works ok, I have an average epipolar error of about 0.5% (~3.5px on a 720p image, see image below)

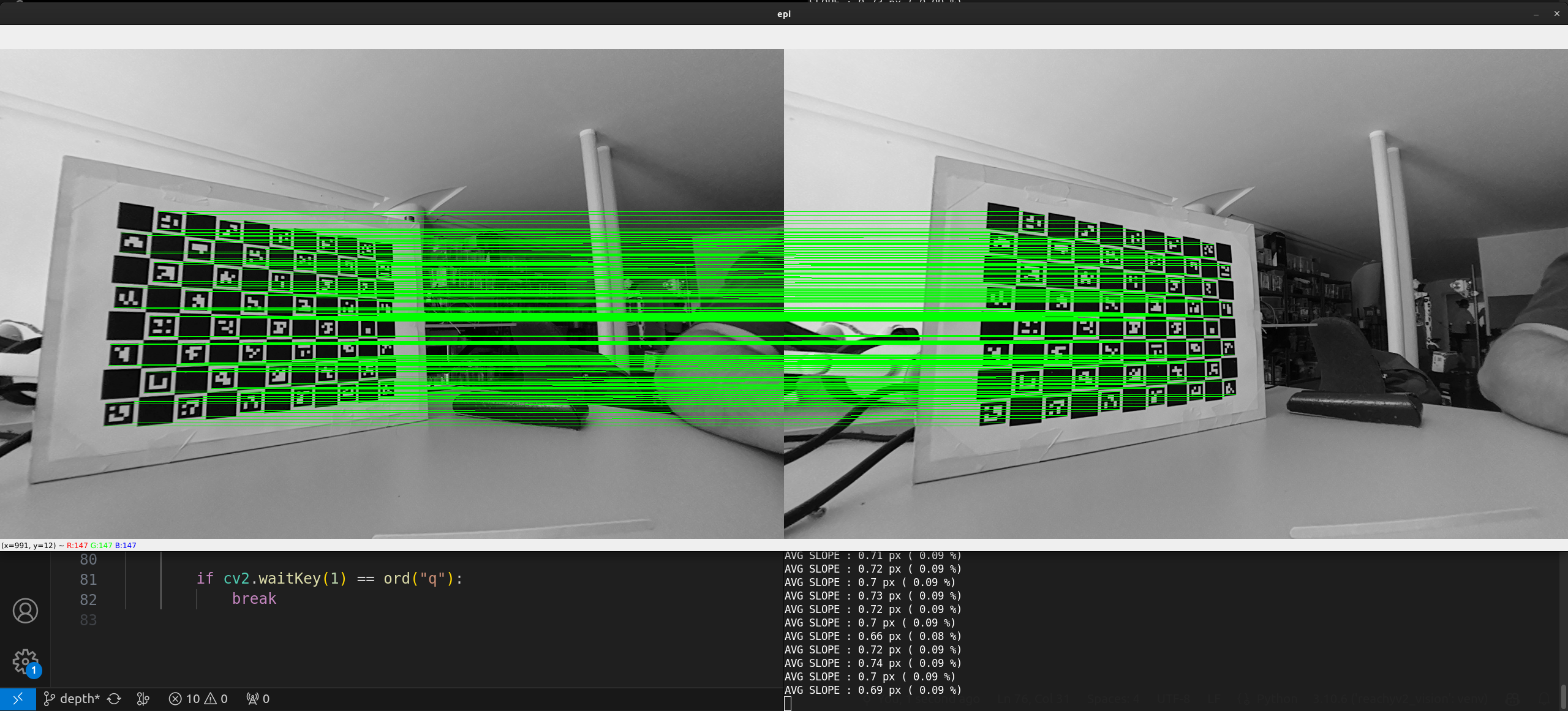

Using the same calibration results (intrinsics and extrinsics) and the StereoDepth node, computing the epipolar error on the rectified left and right images that the node outputs, I get much better results (about 0.09%, 0.69px on a 720p image, see image below)

Here is how I compute the epilines score for reference

def drawEpiLines(left, right, aruco_dict):

concatIm = np.hstack((left, right))

lcorners, lids, _ = aruco.detectMarkers(image=left, dictionary=aruco_dict)

rcorners, rids, _ = aruco.detectMarkers(image=right, dictionary=aruco_dict)

if len(lcorners) == 0 or len(rcorners) == 0:

return concatIm

lids = lids.reshape(-1)

rids = rids.reshape(-1)

lcorners_dict = {}

for i in range(len(lids)):

lid = lids[i]

lcorners_dict[lid] = lcorners[i].reshape((-1, 2))

rcorners_dict = {}

for i in range(len(rids)):

rid = rids[i]

rcorners_dict[rid] = rcorners[i].reshape((-1, 2))

avg_slope = []

for lid in lids:

if lid not in rids:

continue

lcorners = lcorners_dict[lid]

rcorners = rcorners_dict[lid]

for i in range(len(lcorners)):

x1 = lcorners[i][0]

y1 = lcorners[i][1]

x2 = rcorners[i][0] + left.shape[1]

y2 = rcorners[i][1]

avg_slope.append(abs(y2 - y1))

cv2.line(

concatIm,

(int(x1), int(y1)),

(int(x2), int(y2)),

(0, 255, 0),

1,

)

avg_slope = np.mean(avg_slope)

avg_slope_percent = avg_slope / left.shape[0] * 100

print(

"AVG SLOPE :",

round(avg_slope, 2),

"px (",

round(abs(avg_slope_percent), 2),

"%)",

)

return concatIm

I have two questions:

- Do you have an idea of what could explain this difference ?

- Do you have any plans to add the support of rectified color images as output of the StereoDepth node ? (We use IMX296 color cameras right now)

Thanks !