Hi.

I have the OAK-D PRO W POE camera running on my desktop. Some of the examples from the depthai-python repo are working just fine, but the depth-alignment ones are not. For instance the "rgbdepthaligned.py file gives me the following error:

victor@victor-Legion-5-Pro-16ACH6H:~/development/depthai-python/examples/StereoDepth$ python rgb_depth_aligned.py

[184430109159A30F00] [10.46.28.199] [4.850] [ColorCamera(0)] [warning] Unsupported resolution set for detected camera OV9782, needs 800_P or 720_P. Defaulting to 800_P

[184430109159A30F00] [10.46.28.199] [5.124] [StereoDepth(3)] [error] Disparity/depth width must be multiple of 16, but RGB camera width is 854. Set output size explicitly using 'setOutputSize(width, height)'.

[2023-05-08 15:50:37.646] [warning] Monitor thread (device: 184430109159A30F00 [10.46.28.199]) - ping was missed, closing the device connectionI am using the latest from the repo: https://github.com/luxonis/depthai-python.

The specific source code i am running is below:

```

#!/usr/bin/env python3

import cv2

import numpy as np

import depthai as dai

# Weights to use when blending depth/rgb image (should equal 1.0)

rgbWeight = 0.4

depthWeight = 0.6

def updateBlendWeights(percent_rgb):

"""

Update the rgb and depth weights used to blend depth/rgb image

@param[in] percent_rgb The rgb weight expressed as a percentage (0..100)

"""

global depthWeight

global rgbWeight

rgbWeight = float(percent_rgb)/100.0

depthWeight = 1.0 - rgbWeight

# Optional. If set (True), the ColorCamera is downscaled from 1080p to 720p.

# Otherwise (False), the aligned depth is automatically upscaled to 1080p

downscaleColor = True

fps = 30

# The disparity is computed at this resolution, then upscaled to RGB resolution

monoResolution = dai.MonoCameraProperties.SensorResolution.THE_720_P

# Create pipeline

pipeline = dai.Pipeline()

device = dai.Device()

queueNames = []

# Define sources and outputs

camRgb = pipeline.create(dai.node.ColorCamera)

left = pipeline.create(dai.node.MonoCamera)

right = pipeline.create(dai.node.MonoCamera)

stereo = pipeline.create(dai.node.StereoDepth)

rgbOut = pipeline.create(dai.node.XLinkOut)

disparityOut = pipeline.create(dai.node.XLinkOut)

rgbOut.setStreamName("rgb")

queueNames.append("rgb")

disparityOut.setStreamName("disp")

queueNames.append("disp")

#Properties

camRgb.setBoardSocket(dai.CameraBoardSocket.RGB)

camRgb.setResolution(dai.ColorCameraProperties.SensorResolution.THE_1080_P)

camRgb.setFps(fps)

if downscaleColor: camRgb.setIspScale(2, 3)

# For now, RGB needs fixed focus to properly align with depth.

# This value was used during calibration

try:

calibData = device.readCalibration2()

lensPosition = calibData.getLensPosition(dai.CameraBoardSocket.RGB)

if lensPosition:

camRgb.initialControl.setManualFocus(lensPosition)

except:

raise

left.setResolution(monoResolution)

left.setBoardSocket(dai.CameraBoardSocket.LEFT)

left.setFps(fps)

right.setResolution(monoResolution)

right.setBoardSocket(dai.CameraBoardSocket.RIGHT)

right.setFps(fps)

stereo.setDefaultProfilePreset(dai.node.StereoDepth.PresetMode.HIGH_DENSITY)

# LR-check is required for depth alignment

stereo.setLeftRightCheck(True)

stereo.setDepthAlign(dai.CameraBoardSocket.RGB)

# Linking

camRgb.isp.link(rgbOut.input)

left.out.link(stereo.left)

right.out.link(stereo.right)

stereo.disparity.link(disparityOut.input)

# Connect to device and start pipeline

with device:

device.startPipeline(pipeline)

frameRgb = None

frameDisp = None

# Configure windows; trackbar adjusts blending ratio of rgb/depth

rgbWindowName = "rgb"

depthWindowName = "depth"

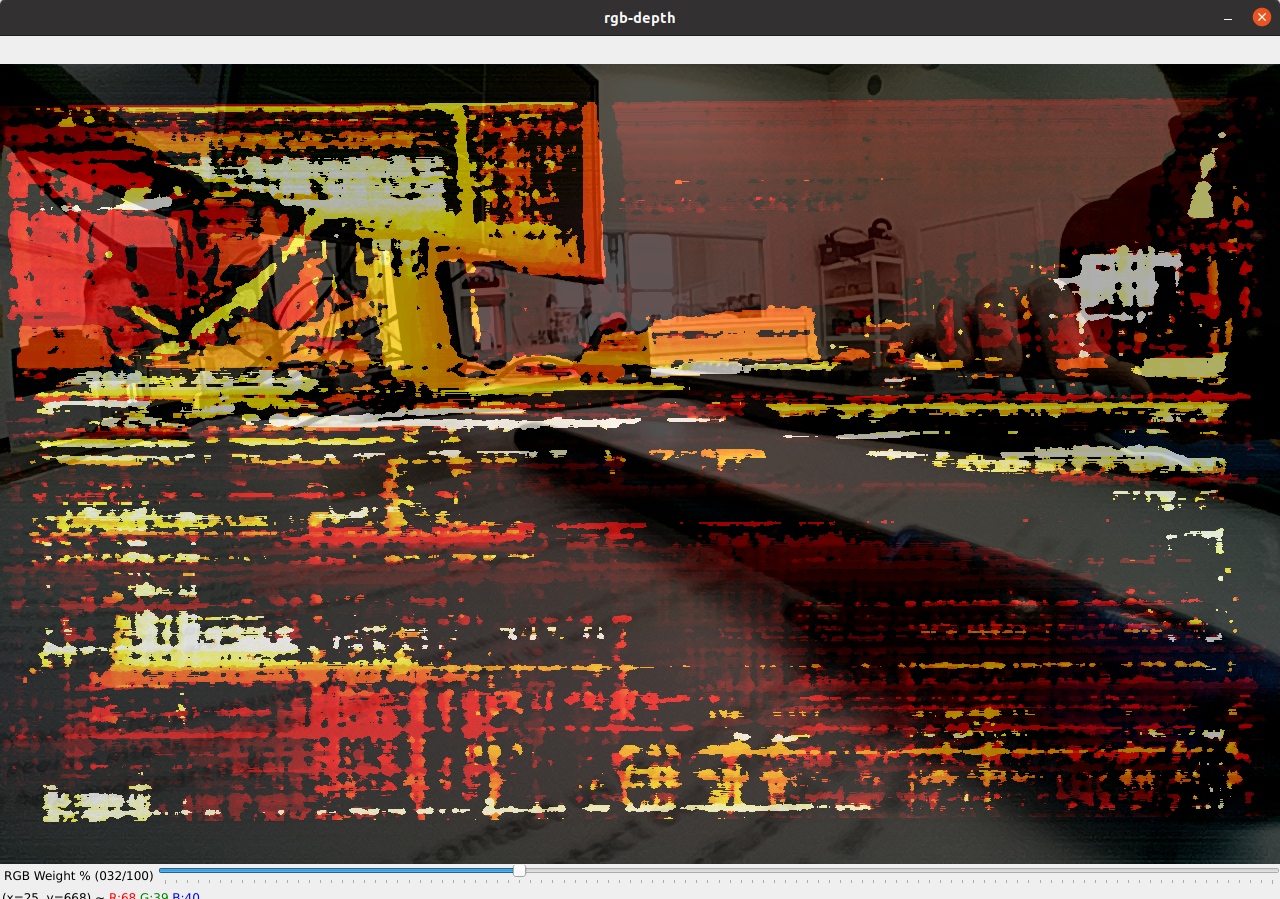

blendedWindowName = "rgb-depth"

cv2.namedWindow(rgbWindowName)

cv2.namedWindow(depthWindowName)

cv2.namedWindow(blendedWindowName)

cv2.createTrackbar('RGB Weight %', blendedWindowName, int(rgbWeight*100), 100, updateBlendWeights)

while True:

latestPacket = {}

latestPacket["rgb"] = None

latestPacket["disp"] = None

queueEvents = device.getQueueEvents(("rgb", "disp"))

for queueName in queueEvents:

packets = device.getOutputQueue(queueName).tryGetAll()

if len(packets) > 0:

latestPacket[queueName] = packets[-1]

if latestPacket["rgb"] is not None:

frameRgb = latestPacket["rgb"].getCvFrame()

cv2.imshow(rgbWindowName, frameRgb)

if latestPacket["disp"] is not None:

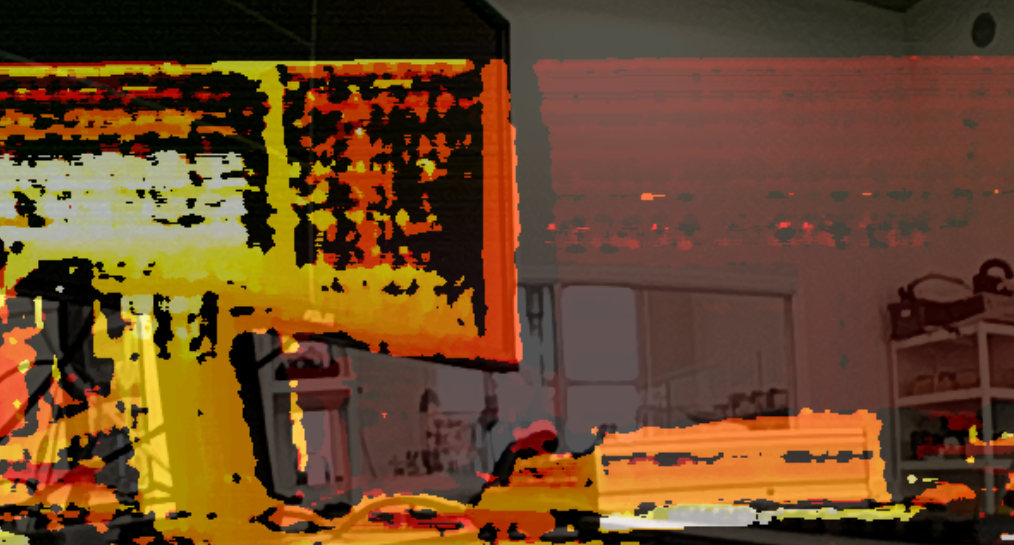

frameDisp = latestPacket["disp"].getFrame()

maxDisparity = stereo.initialConfig.getMaxDisparity()

# Optional, extend range 0..95 -> 0..255, for a better visualisation

if 1: frameDisp = (frameDisp * 255. / maxDisparity).astype(np.uint8)

# Optional, apply false colorization

if 1: frameDisp = cv2.applyColorMap(frameDisp, cv2.COLORMAP_HOT)

frameDisp = np.ascontiguousarray(frameDisp)

cv2.imshow(depthWindowName, frameDisp)

# Blend when both received

if frameRgb is not None and frameDisp is not None:

# Need to have both frames in BGR format before blending

if len(frameDisp.shape) < 3:

frameDisp = cv2.cvtColor(frameDisp, cv2.COLOR_GRAY2BGR)

blended = cv2.addWeighted(frameRgb, rgbWeight, frameDisp, depthWeight, 0)

cv2.imshow(blendedWindowName, blended)

frameRgb = None

frameDisp = None

if cv2.waitKey(1) == ord('q'):

breakThank you in advance.