I've been training my own yolov5 model, which shows quite a nice result when I run inference using the yolov5 repo, but the performance is significantly different (and worse) when I run it on an oak-D. I've been trying to figure out what the culprit is, but I am unsure how I could properly debug this.

My current focus is to get the same result from inference in pytorch as on the camera, and right now I'm using the yolov5s pretrained model from the yolov5 repo to make sure that my own model is not the problem. I've resized one of their example images to 448x448, my target size, see below.

In the pytorch repo, I can get an output image with the folowwing command:

python detect.py --weights yolov5s.pt --source bus2.jpg --img 448

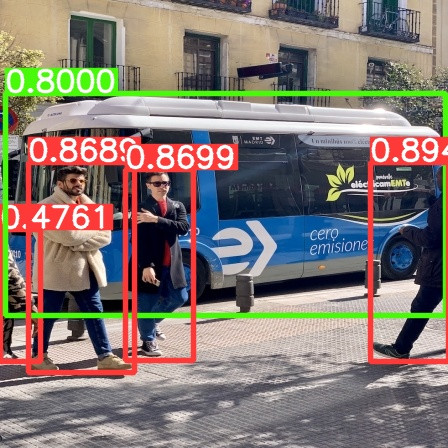

Which gives me the result below. I've removed the label name so I can get those confidence values printed out.

Then, I use the code below to run the same image through the oak-d, with the .blob generated by the luxonis tool. (specify location of yolov5s .blob, .json and image yourself)

from pathlib import Path

import depthai as dai

import matplotlib.pyplot as plt

import numpy as np

import cv2

import os

# load model json and blob

model_name = "yolov5s"

model_dir = Path("yolov5s")

model_config_path = model_dir / (model_name + '.json')

with open(model_config_path) as fp:

config = json.load(fp)

model_blob_path = model_dir / (model_name + '.blob')

model_config = config['nn_config']

labels = config['mappings']['labels']

metadata = model_config['NN_specific_metadata']

coordinate_size = metadata['coordinates']

anchors = metadata['anchors']

anchor_masks = metadata['anchor_masks']

iou_threshold = metadata['iou_threshold']

confidence_threshold = metadata['confidence_threshold']

# build pipeline

pipeline = dai.Pipeline()

detection = pipeline.create(dai.node.YoloDetectionNetwork)

detection.setBlobPath(model_blob_path)

detection.setAnchors(anchors)

detection.setAnchorMasks(anchor_masks)

detection.setConfidenceThreshold(confidence_threshold)

detection.setNumClasses(len(labels))

detection.setCoordinateSize(coordinate_size)

detection.setIouThreshold(iou_threshold)

detection.setNumInferenceThreads(2)

detection.input.setBlocking(False)

detection.input.setQueueSize(1)

xin = pipeline.create(dai.node.XLinkIn)

xin.setStreamName("frameIn")

xin.out.link(detection.input)

detection_out = pipeline.create(dai.node.XLinkOut)

detection_out.setStreamName("detectionOut")

detection.out.link(detection_out.input)

device = dai.Device(pipeline)

qIn = device.getInputQueue("frameIn")

qOut = device.getOutputQueue("detectionOut", maxSize=10, blocking=False)

# Create ImgFrame message

image = cv2.imread("bus2.jpg")

imsize = 448

img = dai.ImgFrame()

img.setData(image.transpose(2, 0, 1))

img.setWidth(imsize)

img.setHeight(imsize)

qIn.send(img)

frame_out = qOut.get()

fix, ax = plt.subplots()

ax.imshow(image[:, :, [2, 1, 0]])

for detection in frame_out.detections:

xmin = detection.xmin

xmax = detection.xmax

ymin = detection.ymin

ymax = detection.ymax

xpos = np.array([xmin, xmax, xmax, xmin, xmin]) * imsize

ypos = np.array([ymax, ymax, ymin, ymin, ymax]) * imsize

print(detection.confidence)

ax.plot(xpos, ypos)

plt.show()

Which gives me roughly the same bounding boxes, but not exactly the same as the pytorch implementation. The detection confidences are also a bit off (compare below with those in the image):

0.887598991394043

0.8628273010253906

0.8618507385253906

0.7907819747924805

0.4408433437347412

Unfortunately for me, the results are significantly off when I do the above steps with my own network, on an image of my own. And the fact that there is still a difference when I do it with the general yolov5 model makes me believe that something in the conversion from .pt to .blob is messing it up for me.

How would you suggest I further debug this? Is it reasonable to believe something is happening is the conversion from .pt to .blob, and could I counteract it? If you want I can also send you my own trained model and example image, but I'd rather not share that publicly.