- Edited

Hi DiproChakraborty , I used Yolov5s.

Hi DiproChakraborty , I used Yolov5s.

Hi,

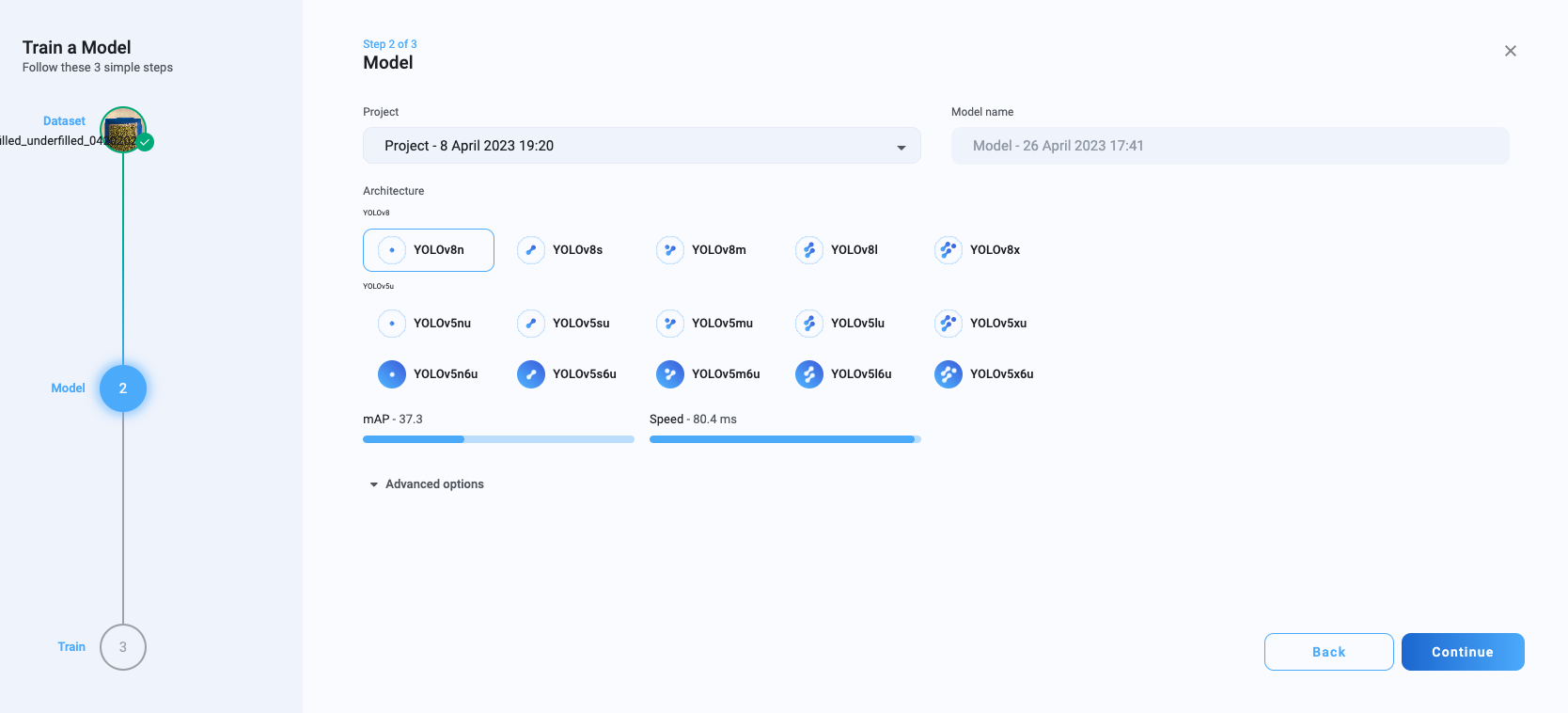

How did you choose or find YOLOv5s in this set? When I add a custom dataset, it only lets me choose between the previously attached models for training on a custom dataset. Is it still possible for you to use YOLOv5s on a custom dataset? I'm wondering if something changed on Ultralytics Hub or if there are specific permissions for you to access YOLOv5s for custom dataset training? Are you able to see the other models as well?

As you can see below, I don't see an option to choose YOLOv5s?

Hi DiproChakraborty , Looks like they updated the ultralytics hub, previously I was able to choose that, not anymore. Perhaps you could try with other models.

Hi,

Correct, the Ultralytics Hub seems to be updated. None of the YOLOv5 models seem to be able to be converted using the DepthAI Tool now. The only model that seems to be able to be converted with the DepthAI Tool is "YOLOv8n". However, as you previously tried, it seemed like that pt was in the incorrect format and could not find the reader for model format xml and read the model. So currently, it seems that no YOLO models on Ultralytics Hub can make it all the way down to a deployable OAK-D model. Is there any way this can be corrected?

Is there any way to adjust the blob converter or OpenVINO converter en route to the MyriadX blob converter? I can see that these models work in PyTorch, but they cannot be converted to be deployed to the OAK-D currently.

Alternatively, is there to correct the converter or allow the VOLOv8 model to work on the OAK-D?

As another option, is there a way to allow any of the Ultralytics Hub models YOLOv5 (or v8) to convert with the DepthAI Tool?

Hi DiproChakraborty ,

Yes, you can also go with the "manual path" - using OpenVINO tools. Docs here: https://docs.luxonis.com/en/latest/pages/model_conversion/

I hope this helps.

Hi @erik ,

I tried using the online Blobconverter app to convert the Ultralytics HUB model 'YOLOv5su'. It was able to return a YOLOv5su blob. Does this blob work for you for spatial detection?

YOLOv5su Blob: https://drive.google.com/drive/folders/1tU07tntcYj6dhEAo6f78LZZWbT-GvXUQ?usp=share_link

DiproChakraborty Could you follow the tutorial here? https://github.com/luxonis/depthai-experiments/tree/master/gen2-yolo/device-decoding#yolo-with-depthai-sdk-tutorial

Hi @erik ,

The tutorial seems to ask for multiple files in addition to the .blob file. When I submit an ONNX model to BlobConverter, I only get returned the .blob file. As a reminder, I used Ultralytics Hub to train a YOLOv5su model, then downloaded the ONNX weights from Ultralytics Hub. I then converted the ONNX weights to the Blob Converter using http://blobconverter.luxonis.com/ . I could not use DepthAI Tools to convert the trained model in PyTorch, because it does not convert YOLOv5su models correctly and returns an error.

When I try following the tutorial and running the model, I get an error as follows:

python main_api.py -m dome_handle_yv5su_try2.blob

returns the following error:

{'classes': 1, 'coordinates': 4, 'anchors': [1], 'anchor_masks': {}, 'iou_threshold': 0.5, 'confidence_threshold': 0.5}

[18443010E157E40F00] [20.4] [2.005] [DetectionNetwork(1)] [error] Mask is not defined for output layer with width '3549'. Define at pipeline build time using: 'setAnchorMasks' for 'side3549'.Despite the fact that I have the PyTorch/ONNX model, I'm not sure there is a way to convert it into a usable OAK-D model? Is there something further I need to modify with the model or the JSON to allow it to run? I do not need any anchors for this YOLO model.

Hi DiproChakraborty ,

For YOLO models you need to supply anchors and masks, and since you didn't supply these, it throws an error. Using tools.luxonis.com will generate correct anchors/masks from .pt model.

Thanks, Erik

Hi @DiproChakraborty ,

After checking, I was able to get YOLOv8n running on the device. Steps:

--config argAfter some testing, with the YOLOv8n we got:

640x352: 19FPS512x288: 27FPSI chose these input sizes to be as close to 19:6 aspect ratio (like the camera) as possible.

I hope this helps.

Hi @erik ,

Yes, I am also able to get YOLOv8n running on the device! I was also able to configure the spatial detection YOLO script to return X Y Z values of the YOLOv8n object detection objects as well.

Thank you for all your help  Phew!

Phew!

I am running into some issues again running this pipeline. I tried training a new YOLOv8 model using Ultralytics Hub, exporting the PyTorch model, and trying to use the DepthAI YOLO Export Tool. Unfortunately, now I am getting an Error at this stage and not being allowed to export. However, previously, I was able to get the model to convert and return a MyriadX blob. I can share the new YOLOv8 PyTorch model here to debug further -- I suspect something changed on the Ultralytics Hub / Luxonis DepthAI Tools converter process that is breaking the conversion pipeline downstream now. When I train a new YOLOv8n model on the old dataset that previously produced a working YOLOv8n model, this new model also returns a DepthAI Tools error.

Hopefully this can be resolved quickly, as this is breaking a key part of the pipeline. Please see the attached model in case it provides insight ; if there is a way to use Ultralytics Hub and provide a working YOLO model, that would be appreciated.

DiproChakraborty unfortunately a known issue, we are working on a fix: luxonis/tools56