Hi @jesse31 ,

So the main reason was eye safety and making sure that that is done correct. So it's faster to get products out safely without lasers.

We are working on a variant with IR-capable cameras and IR dot projection, see here:

https://github.com/luxonis/depthai-hardware/issues/20

Backing up a bit though, the main purpose of DepthAI is not to be yet another depth camera, but to provide spatial AI.

So as far as we understand, we make the only camera in the world that does spatial sensing and AI on the same device.

So the number 1 confusion about DepthAI is that we are yet another depth sensing camera... that is not the purpose.

More information on that is here:

https://discuss.luxonis.com/d/48-summary-of-stereo-mapping-cameras

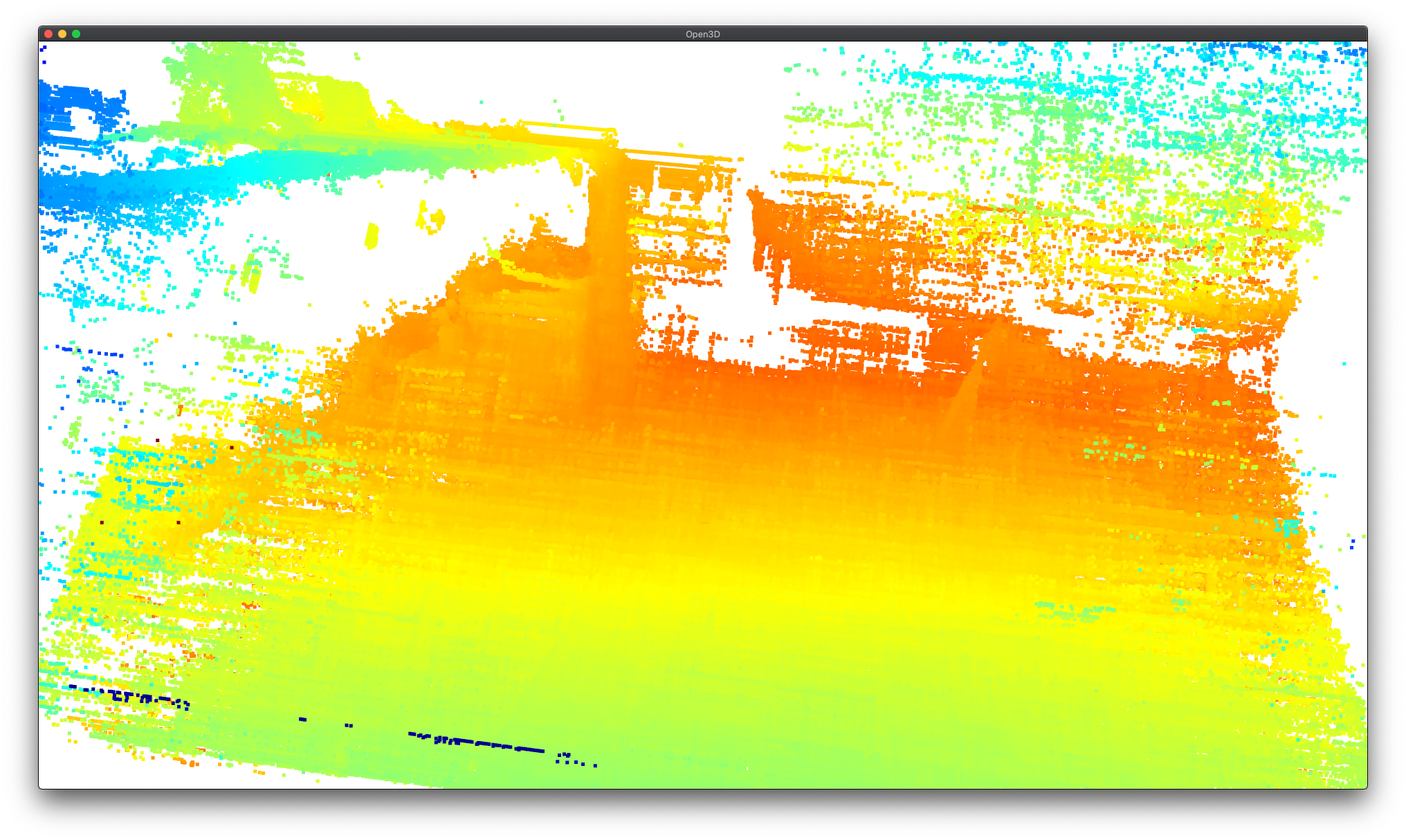

That said, for not using any active illumination or laser projection, the depth quality is not bad:

This is with no filtering whatsoever (with Median fully disabled, for example). The reference code to produce these is here.

Thoughts?

Thanks,

Brandon