Hi,

I’m running a YOLOv6 model for object detection trained on custom data on an OAK-D PoE camera (12MP) via DepthAI API.

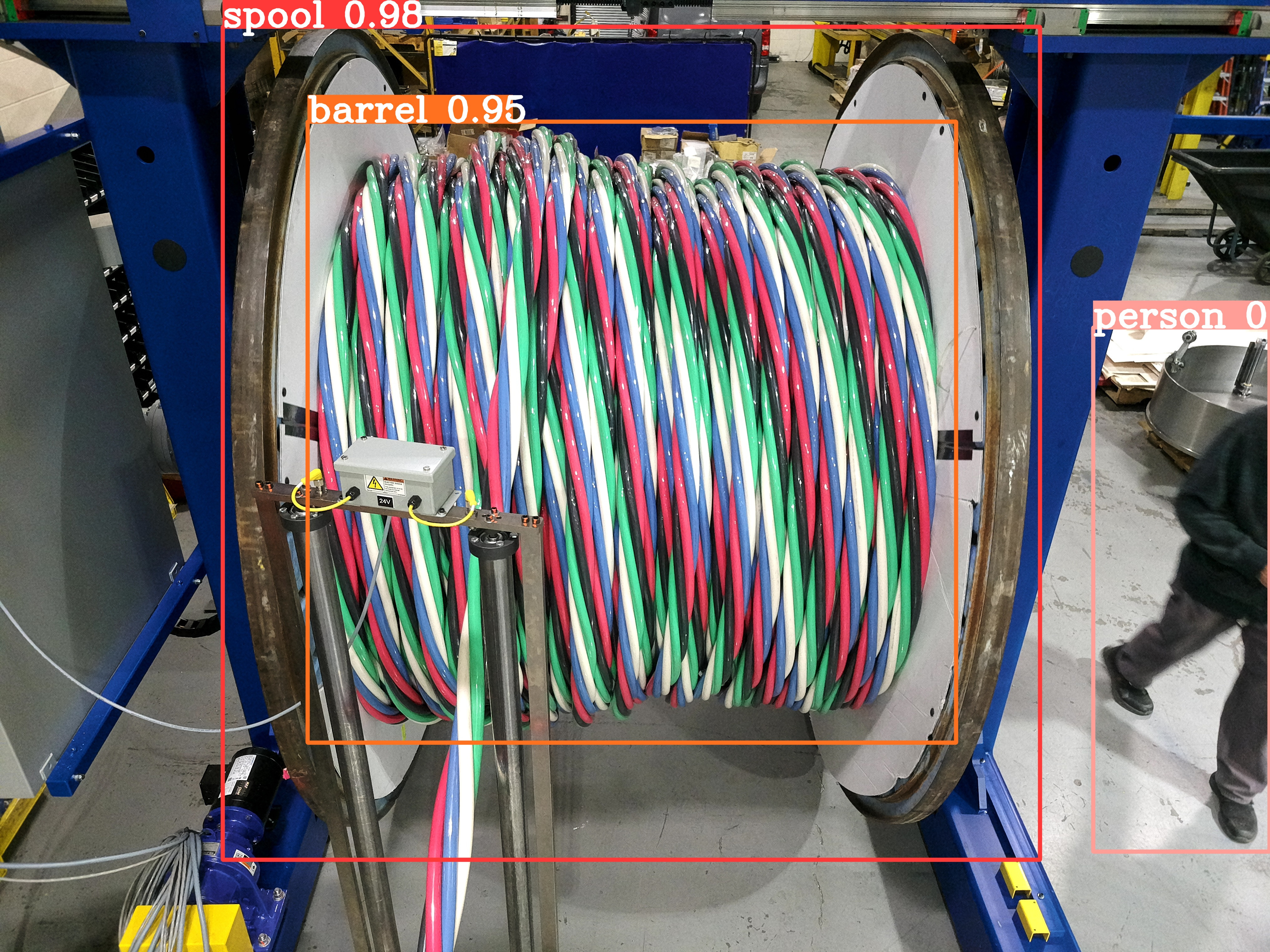

The model detects three classes: spool, barrel, and person.

Inference works perfectly on PC (ONNX / PyTorch), all objects are detected with high confidence as shown below:

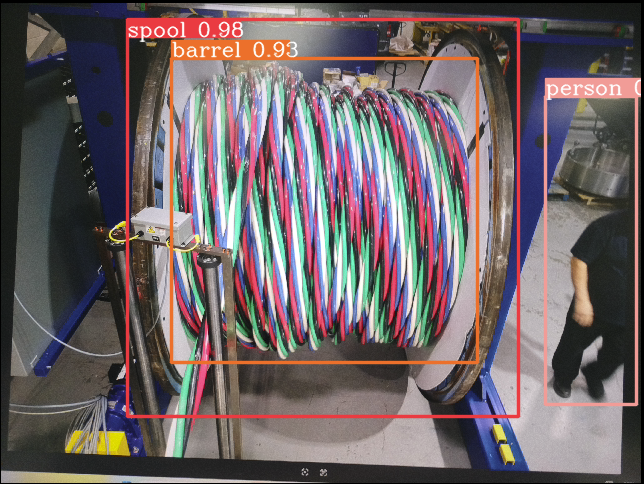

However, on the OAK device, “barrel” detections are often missing, while “spool” and “person” still appear normally. I also saved the passthrough images and used them on carrying out inference on my PC using the same pytorch model. I still see it detects correctly as expected as shown below:

It just happens on this kind of spool in the image, with other spools the detection on the camera is good. I even further tuned the model with almost 2k new images of this spool. But i still see the same behavior.

For your reference i am sharing my OAK depthai code as well below:

from pathlib import Path

import sys

import cv2

import depthai as dai

import numpy as np

import time

import argparse

import json

import blobconverter

from datetime import timedelta

def getDisparityFrame(frame, cvColorMap):

maxDisp = stereo.initialConfig.getMaxDisparity()

disp = (frame * (255.0 / maxDisp)).astype(np.uint8)

disp = cv2.applyColorMap(disp, cvColorMap)

return disp

# parse arguments

parser = argparse.ArgumentParser()

parser.add_argument("-m", "--model", help="Provide model name or model path for inference",

default="C:/Users/ymalhotra/Downloads/result (7)/best_ckpt_openvino_2022.1_3shave.blob", type=str)

parser.add_argument("-c", "--config", help="Provide config path for inference",

default="C:/Users/ymalhotra/Downloads/result (7)/best_ckpt.json", type=str)

args = parser.parse_args()

# parse config

configPath = Path(args.config)

if not configPath.exists():

raise ValueError("Path {} does not exist!".format(configPath))

with configPath.open() as f:

config = json.load(f)

nnConfig = config.get("nn_config", {})

# parse input shape

if "input_size" in nnConfig:

W, H = tuple(map(int, nnConfig.get("input_size").split('x')))

# extract metadata

metadata = nnConfig.get("NN_specific_metadata", {})

classes = metadata.get("classes", {})

coordinates = metadata.get("coordinates", {})

anchors = metadata.get("anchors", {})

anchorMasks = metadata.get("anchor_masks", {})

iouThreshold = 0.45 #metadata.get("iou_threshold", {})

confidenceThreshold = 0.4 #metadata.get("confidence_threshold", {})

#print(metadata)

# parse labels

nnMappings = config.get("mappings", {})

labels = nnMappings.get("labels", {})

#print("Labels: ", labels)

# get model path

nnPath = args.model

if not Path(nnPath).exists():

print("No blob found at {}. Looking into DepthAI model zoo.".format(nnPath))

nnPath = str(blobconverter.from_zoo(args.model, shaves = 6, zoo_type = "depthai", use_cache=True))

# sync outputs

syncNN = True

# Depth and Disparity

outRectified = False # Output and display rectified streams

lrcheck = True # Better handling for occlusions

extended = False # Closer-in minimum depth, disparity range is doubled

subpixel = True # Better accuracy for longer distance, fractional disparity 32-levels

depth = True # Display depth frames

swap_left_right = False #Swap left right frames

alpha = None

resolution = dai.MonoCameraProperties.SensorResolution.THE_800_P

median = dai.StereoDepthProperties.MedianFilter.KERNEL_7x7 # 7x7

# Create pipeline

pipeline = dai.Pipeline()

# Define sources and outputs

camRgb = pipeline.create(dai.node.ColorCamera)

detectionNetwork = pipeline.create(dai.node.YoloDetectionNetwork)

# By Yishu

manip = pipeline.create(dai.node.ImageManip)

# Depth and Disparity

camLeft = pipeline.create(dai.node.MonoCamera)

camRight = pipeline.create(dai.node.MonoCamera)

stereo = pipeline.create(dai.node.StereoDepth)

xoutLeft = pipeline.create(dai.node.XLinkOut)

xoutRight = pipeline.create(dai.node.XLinkOut)

xoutLeft.setStreamName("left")

xoutRight.setStreamName("right")

# Sync node

sync = pipeline.create(dai.node.Sync)

xoutGrp = pipeline.create(dai.node.XLinkOut)

xoutGrp.setStreamName("xout")

# Sync node properties

sync.setSyncThreshold(timedelta(milliseconds=100))

# Properties

camRgb.setPreviewSize(W, H)

camRgb.setResolution(dai.ColorCameraProperties.SensorResolution.THE_12_MP) #THE_1080_P

camRgb.setInterleaved(False)

camRgb.setColorOrder(dai.ColorCameraProperties.ColorOrder.RGB)

camRgb.setFps(4) #40

# By Yishu

#manip.initialConfig.setKeepAspectRatio(False) #True

#manip.initialConfig.setResize(W, H)

manip.initialConfig.setResizeThumbnail(W, H)

manip.initialConfig.setKeepAspectRatio(True)

# Change to RGB image than BGR - Yishu

manip.initialConfig.setFrameType(dai.ImgFrame.Type.BGR888p) #dai.ImgFrame.Type.RGB888p

# setMaxOutputFrameSize to avoid image bigger than max frame size error - Yishu

manip.setMaxOutputFrameSize(1228800)

# By Yishu

detectionNetwork.input.setQueueSize(10)

# Network specific settings

detectionNetwork.setConfidenceThreshold(confidenceThreshold)

detectionNetwork.setNumClasses(classes)

detectionNetwork.setCoordinateSize(coordinates)

detectionNetwork.setAnchors(anchors)

detectionNetwork.setAnchorMasks(anchorMasks)

detectionNetwork.setIouThreshold(iouThreshold)

detectionNetwork.setBlobPath(nnPath)

detectionNetwork.setNumInferenceThreads(2)

detectionNetwork.input.setBlocking(False)

# Depth and Disparity Properties

if swap_left_right:

camLeft.setCamera("right")

camRight.setCamera("left")

else:

camLeft.setCamera("left")

camRight.setCamera("right")

for monoCam in (camLeft, camRight): # Common config

monoCam.setResolution(resolution)

monoCam.setFps(2)

stereo.setDefaultProfilePreset(dai.node.StereoDepth.PresetMode.HIGH_DENSITY)

stereo.initialConfig.setMedianFilter(median) # KERNEL_7x7 default

stereo.setRectifyEdgeFillColor(0) # Black, to better see the cutout

stereo.setLeftRightCheck(lrcheck)

stereo.setExtendedDisparity(extended)

stereo.setSubpixel(subpixel)

if alpha is not None:

stereo.setAlphaScaling(alpha)

config = stereo.initialConfig.get()

config.postProcessing.brightnessFilter.minBrightness = 0

stereo.initialConfig.set(config)

# Alignment with RGB - Ahmad

stereo.setDepthAlign(dai.CameraBoardSocket.RGB)

# # 4056x3040

stereo.setOutputSize(1248, 936)

# Linking

camRgb.isp.link(manip.inputImage)

manip.out.link(detectionNetwork.input)

stereo.syncedLeft.link(xoutLeft.input)

stereo.syncedRight.link(xoutRight.input)

# Depth and Disparity Linking

camLeft.out.link(stereo.left)

camRight.out.link(stereo.right)

# Syncing NN,ISP, Disparity,

detectionNetwork.out.link(sync.inputs["NN_Sync"])

camRgb.isp.link(sync.inputs["ISP_Sync"])

stereo.disparity.link(sync.inputs["DISPARITY_Sync"])

stereo.depth.link(sync.inputs["DEPTH_Sync"])

detectionNetwork.passthrough.link(sync.inputs["Passthrough"])

sync.inputs["NN_Sync"].setBlocking(False)

sync.inputs["ISP_Sync"].setBlocking(False)

sync.inputs["DISPARITY_Sync"].setBlocking(False)

sync.inputs["DEPTH_Sync"].setBlocking(False)

sync.inputs["Passthrough"].setBlocking(False)

sync.out.link(xoutGrp.input)

xoutGrp.input.setBlocking(False)

streams = ["left", "right", "ISP","nn"] #Change by ahmad

streams.append("disparity")

if depth:

streams.append("depth")

cvColorMap = cv2.applyColorMap(np.arange(256, dtype=np.uint8), cv2.COLORMAP_JET)

cvColorMap[0] = [0, 0, 0]

# PayOff Camera Static IP address

device_info = dai.DeviceInfo("192.168.220.10")

try:

# Connect to device and start pipeline

with dai.Device(pipeline, device_info) as device:

# Output queues will be used to get the rgb frames and nn data from the outputs defined above

qSync = device.getOutputQueue("xout", 4, False)

msgGrp = None

syncframe = None

sync_detections = None

sync_disparity = None

sync_depth = None

qLeft = device.getOutputQueue("left", 1, blocking=False)

qRight = device.getOutputQueue("right", 1, blocking=False)

frame = None

passthrough = None

Disparityframe = None

Depthframe = None

LeftFrame = None

RightFrame = None

detections = []

startTime = time.monotonic()

counter = 0

color2 = (255, 255, 255)

# nn data, being the bounding box locations, are in <0..1> range - they need to be normalized with frame width/height

def frameNorm(frame, bbox):

normVals = np.full(len(bbox), frame.shape[0])

normVals[::2] = frame.shape[1]

return (np.clip(np.array(bbox), 0, 1) * normVals).astype(int)

def displayFrame(name, frame, detections, i):

color_spool = (255, 0, 0)

color_person = (0, 255, 0)

color_barrel = (255, 255, 0)

color = ''

color_blank_img = (255, 255, 255)

blank_image = np.zeros((3040,4056,1), np.uint8)

text_output_path = "D:\\Cameras_Live\\PayOff\\" + "Label{}".format(j) + ".txt"

print("Detections: ", [[d.label, d.confidence *100, d.xmin, d.ymin, d.xmax, d.ymax] for d in detections])

#text_file = open(text_output_path, 'w')

for detection in detections:

bbox = frameNorm(frame, (detection.xmin, detection.ymin, detection.xmax, detection.ymax))

if labels[detection.label] == 'Person':

color = color_person

elif labels[detection.label] == 'Barrel':

color = color_barrel

else:

color = color_spool

cv2.putText(frame, labels[detection.label], (bbox[0] + 10, bbox[1] + 20), cv2.FONT_HERSHEY_TRIPLEX, 0.5, (255,255,255))

cv2.putText(frame, f"{int(detection.confidence * 100)}%", (bbox[0] + 10, bbox[1] + 40), cv2.FONT_HERSHEY_TRIPLEX, 0.5, (255,255,255))

cv2.rectangle(frame, (bbox[0], bbox[1]), (bbox[2], bbox[3]), color, 2)

cv2.rectangle(blank_image, (bbox[0], bbox[1]), (bbox[2], bbox[3]), color_blank_img, 50)

# Write detections to a text file

#text_file.write(f'{labels[detection.label]} {bbox[0]} {bbox[1]} {bbox[2]} {bbox[3]} {detection.confidence}\n') # Add a separator between entries

#text_file.close()

# Show the frame

#cv2.namedWindow("Model Inference", cv2.WND_PROP_FULLSCREEN)

cv2.namedWindow(name, cv2.WINDOW_NORMAL)

#cv2.setWindowProperty(name, cv2.WND_PROP_FULLSCREEN, cv2.WINDOW_FULLSCREEN)

cv2.imshow(name, frame)

#cv2.imwrite("D:\\Cameras_Live\\PayOff\\Mask{}.bmp".format(i),blank_image)

cv2.imwrite("C:\\Cameras_Live\\PayOff\\Labeled_Image{}.bmp".format(i),frame)

j=1

###Create the folder that will contain capturing

folder_name = "C:\\Cameras_Live\\PayOff"

path = Path(folder_name)

while True:

###Create the folder that will contain capturing

path.mkdir(parents=True, exist_ok=True)

# Delay to match DA reading fps

#time.sleep(4)

# By Yishu

msgGrp = qSync.get()

inLeft = qLeft.tryGet()

inRight = qRight.tryGet()

if msgGrp is not None:

#print('msgGrp: ', msgGrp)

for name, msg in msgGrp:

if name == "ISP_Sync":

if msg is not None:

print("Exposure: ", msg.getExposureTime().total_seconds())

print("Senstivity: ", msg.getSensitivity())

syncframe = msg.getCvFrame()

#cv2.imwrite("D:\\Cameras_Live\\PayOff\\Image{}.bmp".format(j), syncframe)

nn_fps =counter / (time.monotonic() - startTime)

print("nn_SYNC_fps: ", nn_fps)

#print("syncframe: ")

if name == "Passthrough":

if msg is not None:

passthrough = msg.getCvFrame()

cv2.imwrite("C:\\Cameras_Live\\PayOff\\Passthrough{}.bmp".format(j), passthrough)

if name == "NN_Sync":

if msg is not None:

#print("Detections not None")

sync_detections = msg.detections

counter += 1

#print("sync_detections: ")

if name == "DISPARITY_Sync":

if msg is not None:

sync_disparity = msg.getCvFrame()

sync_disparity = getDisparityFrame(sync_disparity, cvColorMap)

#cv2.imwrite("D:\\Cameras_Live\\PayOff\\Disparity{}.bmp".format(j),sync_disparity)

#print("sync_disparity: ")

if name == "DEPTH_Sync":

if msg is not None:

sync_depth = msg.getCvFrame()

sync_depth = sync_depth.astype(np.uint16)

#cv2.imwrite("D:\\Cameras_Live\\PayOff\\Depth{}.png".format(j),sync_depth)

#print("sync_depth: ")

if syncframe is not None and sync_detections is not None:

displayFrame("ISP_Sync", syncframe, sync_detections, j)

if inLeft is not None:

LeftFrame = inLeft.getCvFrame()

#print('LEFT')

#cv2.imwrite("D:\\Cameras_Live\\PayOff\\Left{}.bmp".format(j),LeftFrame)

if inRight is not None:

#print('RIGHT')

RightFrame = inRight.getCvFrame()

#cv2.imwrite("D:\\Cameras_Live\\PayOff\\Right{}.bmp".format(j),RightFrame)

if cv2.waitKey(1) == ord('q'):

break

j=j+1

if j==100:

j=1

except Exception as e:

print(f"An error occurred: {e}")

sys.exit(0)

Thanks,

Yishu